I’m Stephanie Chasteen, an external evaluator on NSF-funded projects aiming to improve STEM education at the university level. This is part 1 of a 2-part series on evaluative rubrics. In today’s post I’ll talk about how we developed the rubric. Tomorrow I’ll share how we created visuals from the rubric data to inform action steps.

This rubric was developed for the Physics Teacher Education Coalition (PhysTEC), a project addressing the shortage of well-prepared future physics teachers in the K-12 sector. To give guidance to physics departments, we developed an analytic rubric assessing different activities a physics department could undertake.

The rubric items and their levels were based on what happens at institutions which graduate large numbers of physics teachers. We conducted 1-2 day site visits (virtual and in-person) with a wide variety of stakeholders at 8 such “thriving” institutions, rated the program on a pilot rubric, and then modified the rubric to better reflect our observations. This was a consensus process among the researcher-evaluators and project staff. The new rubric draft was then used at the next site visit. The process was labor-intensive, but has resulted in an instrument with relatively good validity and buy-in from the community and project staff.

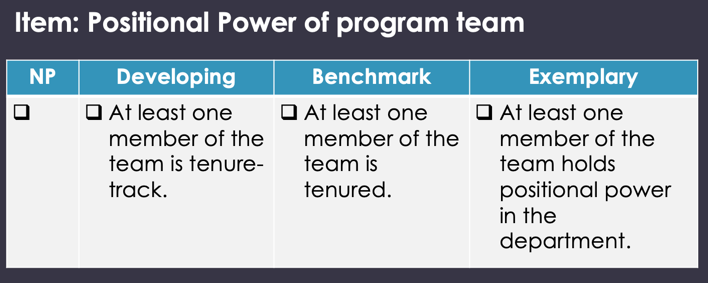

The final rubric (the Physics Teacher Education Program Analysis Rubric) contains about 90 items, each rated at one of four levels: Not Present, Developing, Benchmark, and Exemplary, with Benchmark being the recommended level. We attempted to make items unidimensional for clarity. An example item is shown below.

The intention is that the rubric is to be primarily used for self-assessment, helping physics faculty leaders to address key gaps and make improvements for the future. It is, of course, also a valuable evaluation tool to systematically collect information on what physics teacher education programs do, including those which are funded by the client.

Lesson Learned:

A rubric doesn’t have to be perfect to be useful. There are certainly improvements to be made, but many users have communicated to me that they find the rubric items themselves helpful. The rubric provides a catalog of the things that are involved in physics teacher preparation.

Cool Trick:

I found an Excel ninja who was able to program a wonderful version of the rubric in Excel, with radio buttons to select the desired level. This allowed easy aggregation of the data for several purposes. In summary tabs, items that are incomplete or flagged for “more information” are displayed for the user to review, and the user is given visualizations of the results to aid interpretation. The Excel version is also awesome for me, as I can grab the entire results of the rubric in one copy/paste to analyze data for a single program, or aggregate across programs.

Rad Resources:

The project website, http://phystec.org/thriving, has our Excel rubric, user’s guides, and other supports.

Acknowledgements: We acknowledge funding from NSF-0808790 for development of the rubric and visuals.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.