Welcome to Day 5 of Inclusive Evaluation Design hosted by the Disabilities and Underrepresented Populations TIG. We are June Gothberg and Caitlyn Bukaty, the chairs of the TIG. Over the past two years, the chairs, and other invited members of the DUP TIG have been collaborating with the United Nations Evaluation Group (UNEG) Gender, Disability & Human Rights Working Group to help provide guidance on inclusive evaluation approaches. Much of this work has been happening during the COVID-19 global pandemic, leading to considerations for conducting evaluations virtually or at a distance. The Secretary General shared the following sentiments in the 2021 report:

- “The success of our recovery from the COVID-19 pandemic will be measured by the extent to which people, particularly those who are most excluded, are placed at the centre of recovery efforts”.

- “The crisis should be transformed into an opportunity to build a world that is more equal and inclusive and to ensure that our societies are more resilient and agile”.

- “I am therefore more resolved than ever to ensure that persons with disabilities are included in the COVID-19 recovery process and encourage all entities and country teams to redouble their efforts to mainstream disability inclusion throughout the United Nations system”.

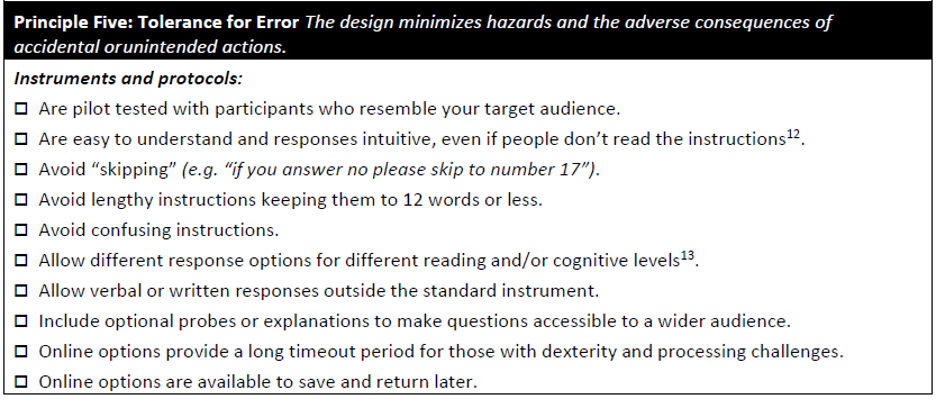

Making a call to “improve inclusive evaluation efforts”. As a group, the UNEG has committed to embracing Universal Design in their push for increased inclusive evaluation designs. Through this collaboration, we have drafted the Universal Design for Evaluation Checklist (5th ed.; Gothberg, Bukaty, & Sullivan Sulewski, 2021). This week we have been highlighting this new edition and today we will be focusing on Principle 5: Tolerance for Error.

In their guidance to improve program evaluation in developing countries, Stecklov & Weinreb felt that while the science of program evaluation has come a tremendous distance in the past couple of decades, errors remain a serious concern and the implications are often poorly understood. They offer a few tips to help you reduce error in your evaluations before administering.

- Pilot testing can save you a lot of time fixing issues, help avoid participant errors, and improve your data findings.

- Selecting the appropriate people to administer your survey or other evaluation tools can help increase participant trust and improve response rates.

- It’s important to evaluate whether there are systematic response or nonresponse patterns that might affect interpretation of findings.

- Ask yourself, do your findings make sense? If you aren’t sure, it’s important to compare your results with those that might be obtained from other evaluations or other data sources.

Hot Tips

- The design should minimize hazards and the adverse consequences of accidental or unintended actions.

- Arrange elements to minimize hazards and errors: most used elements, most accessible; hazardous elements eliminated, isolated, or shielded.

- Provide warnings of hazards and errors.

- Provide fail safe features.

- Discourages unconscious action in tasks that require vigilance.

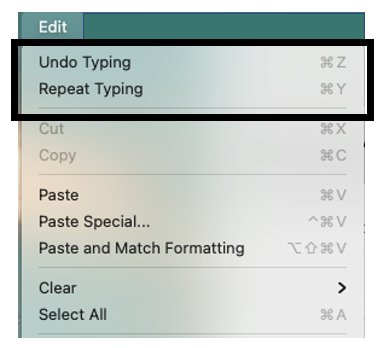

- A good example of minimizing hazards is the undo, redo, and repeat features of most word processors.

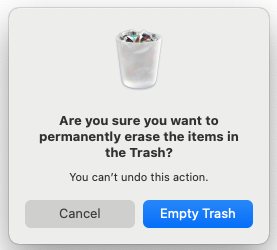

- Another good example is the warning message when you try to empty your trash file on a Mac.

Rad Resource

- Universal Design for Evaluation Checklist (5th Ed) Principle 5 Tolerance for Error – this research-based checklist was developed specifically for evaluators by the DUP TIG at AEA to assist evaluators in designing inclusive evaluations.

The American Evaluation Association is hosting the Disabilities & Underrepresented Populations TIG (DUP) Week. The contributions all week come from DUP members. Do you have questions, concerns, kudos, or content to extend this AEA365 contribution? Please add them in the comments section for this post on the AEA365 webpage so that we may enrich our community of practice. Would you like to submit an AEA365 Tip? Please send a note of interest to AEA365@eval.org . AEA365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.