Hello, AEA365 community! Liz DiLuzio here, Lead Curator of the blog. This week is Individuals Week, which means we take a break from our themed weeks and spotlight the Hot Tips, Cool Tricks, Rad Resources and Lessons Learned from any evaluator interested in sharing. Would you like to contribute to future individuals weeks? Email me at AEA365@eval.org with an idea or a draft and we will make it happen.

Hello. From 2016 to 2023, Sharon Twitty, Natalie Lenhart, and I (Paul St Roseman) have implemented an evaluation design that incorporates empowerment evaluation, development evaluation, and evaluation capacity building. This blog series, which we call Walking Our Talk, presents the process in four parts:

- Laying the Foundation – The Rise of The Logic Model

- Using the Data – Emerging a Data Informed Evaluation Design through Peer Editing

- New Insights – Emerging A Collaborative Diagnostic Tool and Data Visualization

- Charting the Course of Data Use – Collaborative Data Analysis

Today’s post is Part 2 in the series.

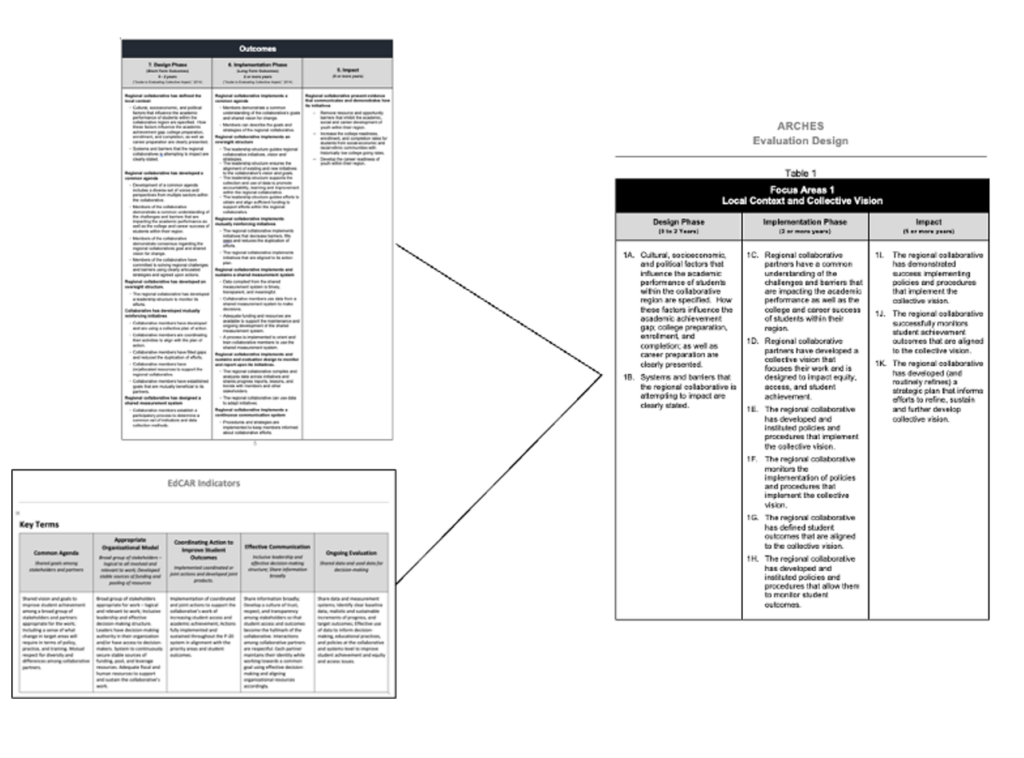

The Emergence of Data Informed Evaluation Design

The Evaluation Teams’ work in 2017 also intersected with the development of an evaluation design. In a previous evaluation effort, ARCHES had developed a self-assessment survey tool that was used to document the development of intersegmental collaboratives. From this tool a list of indicators was developed and comparatively analyzed to outcome indicators listed in the logic model. This process resulted in a refined set of outcome indicators that served as the foundation for developing an evaluation design for ARCHES. As the lead evaluator, I developed an initial draft of the Evaluation design and presented it to Sharon and Natalie in January of 2018. They were tasked with peer editing the document which would be finalized and approved by March 2018.

Cool Trick #1: Evaluation Capacity Building Through Peer Editing Develops Trust, Strengthens Capacity to Focus Work, and Distributes Evaluation Tasks Across Stakeholders

The use of peer editing via administrative meetings, workshop activities, and Google Suite documents had become an essential strategy to develop evaluation capacity throughout ARCHES. This approach allowed meetings and workshops to be working sessions that would result in documents that could be used as sources of data or refined deliverables like a logic model or evaluation design. This approach to collaboration places an emphasis on doing versus planning as well as critical discussion versus check-in. As the evaluation team developed trust and strengthened capacity to focus its work, deliverables were developed quicker, and evaluation tasks began to distribute across the group.

Cool Trick #2: Collaborative Agenda Development and Note Taking Develops Voice and Agency

A routine peer editing artifact present in the evaluation efforts were collaboratively developed meeting agendas. The lead evaluator would draft the agenda, and each team member was able to edit the document and add topics. These agendas, in turn, were used as templates for compiling notes which became essential data sources for tracking, documenting and planning evaluation efforts. This approach modeled the importance of developing voice and agency within evaluation efforts, as well as a strategy of collaboration that could be used across ARCHES with internal stakeholders and clients.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.