Hi, I’m Grisel M. Robles-Schrader, Director of Evaluation and Stakeholder-Academic Resource Panels (ShARPs) and Director of the Applied Practice Experience at Northwestern University. I am also co-chair of the Latinx Responsive Evaluation Discourse (La RED) TIG.

Hi, I’m Keith Herzog, Administrative Director and Assistant Director of Evaluation, Northwestern University Clinical and Translational Sciences Institute (NUCATS) and Communications Co-chair of the Translational Research Evaluation (TRE) TIG.

Evaluating support for community engagement (CE) in research work can be challenging as we try to systematically document the fluid and time-intensive nature of fostering community-engaged research, ranging from identifying collaborators, to coaching academics or creating a climate that values engaged scholarship. As CE is increasingly a requirement of many federal grants, organizations must demonstrate integration of CE strategies and their impact on clinical and translational research.

The mission of the Center for Community Health (CCH) at Northwestern University is to catalyze and support meaningful community and academic engagement across the research spectrum to improve health and health equity. The center offers services and support in six domain areas, including consultation services, capacity building, fiscal support, partnership development, and collaborative work to create change at the institutional-level and community-level.

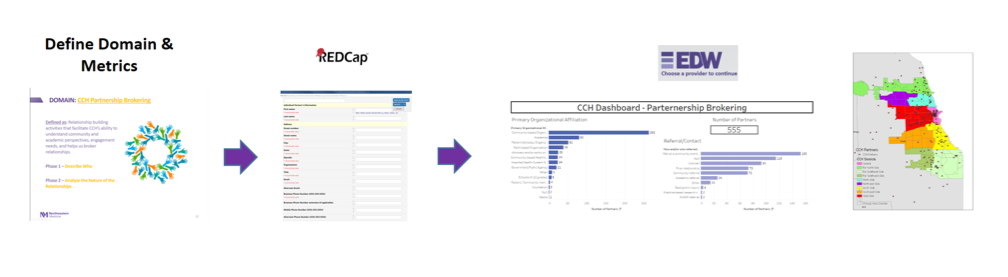

Given the vast nature of these efforts, an interdisciplinary team of practitioners from public health, research evaluation, and data sciences developed a comprehensive evaluation plan. Utilizing REDCap software in combination with Excel, the team created and implemented a data collection system to monitor and report on the full spectrum of engagement activities. Data are being compiled and reported via an on-line Tableau dashboard, to help leadership and staff analyze:

- Quality improvement issues: Are we providing resources that meet the needs of community partners? Academics? Community-academic partnerships?

- Qualitative process analysis: In what research phase are we typically receiving requests for support (e.g. proposal development phase, implementation phase, etc.)? What types of projects are applying for seed grants?

- Outcomes: Are new partnerships stemming from our support? Are supported research projects leading to new policies, practices, and/or programs?

The CCH team is developing plans with community and academic partners to share these data with community stakeholders, funders, and institutional leadership. We anticipate that our CE support and evaluation efforts will continue to improve and/or adapt as we engage these different groups.

Lessons Learned:

- Engage key stakeholders throughout the evaluation process in meaningful ways

- Establish strong project management skills

- Create feedback mechanisms, including piloting tools with real data

- Support standardization by:

- Developing manuals with definitions, concrete examples, and instructions

- Organizing team training sessions and hosting regular check-in meetings

Rad Resources:

- People – engaging stakeholders with different perspectives EARLY and OFTEN ensured that the dashboard reflected meaningful and actionable metrics in strategic areas

- Resource Data Capture software to organize and manage data collection activities and generate simple reports.

- Tableau to create interactive dashboards, enabling use of real-time data to inform program and strategic decision-making – turning evaluative data into action!

The American Evaluation Association is celebrating Translational Research Evaluation (TRE) TIG week. All posts this week are contributed by members of the TRE Topical Interest Group. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.