Hi, I’m Nicole Turcheti and I’m a social research scientist at Public Health – Seattle King County. I am the lead evaluator for the Trauma-Informed and Restorative Practices (TIRP), one of county’s strategies within the Best Starts for Kids initiative. Working with community, it became clear that not fully understanding what evaluation is and what it entails is one of the main barriers for folks to give inputs to the evaluation. In response to that, I developed some tools to better communicate what the steps of the evaluation are and how the data the community provides us will be used to inform the evaluation.

Having community residents participate is essential to the TIRP evaluation, which is intended to build upon community knowledge to shed light on the programs’ implementation, outcomes and opportunities for improvement. Hopefully these resources can support you in providing the pathway for communities to bring their voices to your evaluation!

Rad Resource: Nicole Turcheti’s Rad Resource for Participatory Evaluation – You can download this resource to see all of the details and visuals.

Hot Tips:

- Use metaphor to explain evaluation

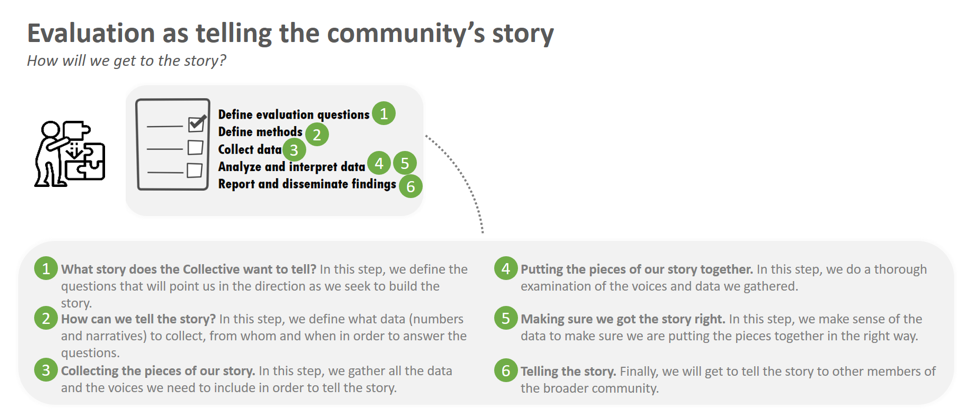

I work with communities that have been historically marginalized and, while discussing the purpose of the evaluation, they expressed that “we want to tell our story!” This inspired me to describe the evaluation to them through the metaphor of a story. I explained what the different steps of evaluation are by conveying them as the different steps it would take to write and tell a story with data. Slide 1 shows these different steps. I used them also when building the timelines (slide 4).

- Highlight the community’s contribution to evaluation

Another thing that I learned in the process of a participatory evaluation is that sometimes these partners did not even realize that they were contributing to the evaluation. I wanted to make that clear to folks and show how their ideas were contributing to our efforts to “tell their story”. For that, I listed all the different community events that took place in each of the evaluation phases. Community meetings, advisory board meetings, calls, online surveys – every opportunity to collaborate was listed. This helped folks feel included and have more ownership over the work.

- Use a visual that explains coding to non-technical audiences

I wanted to share with the community organizations how the narrative reports they submitted were going to be used to help us answer the evaluation questions. Slide 3 shows the visual I created to explain how coding works, and how looking at themes across different reports would help us achieve the evaluation goals. This has also reinforced folks’ understanding of why putting an effort to answer the report questions accurately and in detail was so important.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hi Nicole,

Thank you for sharing your tips to enhance community involvement in the evaluation process. I too have seen firsthand how a lack of evaluation knowledge has led to decreased participation or indifference in getting involved in an evaluation. I like that you have developed tools to better communicate the steps of your evaluation and how the community data will be instrumental to the evaluation process. I particularly appreciated your first two tips on using a metaphor to explain evaluation and highlighting the community’s contribution to evaluation. I think these two tips alone could significantly increase stakeholder contributions.

I have been teaching high school students for the past 12 years, am currently completing my master’s of education, and have chosen the assessment and evaluation concentration. As a result, I am learning about different evaluation approaches and how to best conduct a quality evaluation. Through my research, I have gained an appreciation for the shift towards more stakeholder-centered approaches to evaluation, such as empowerment, collaborative, and participatory evaluation approaches. Participation in evaluation gives stakeholders confidence in their ability to use research procedures, trust in the quality of the generated information, and a sense of ownership in the evaluation results and their applications (Ayers, 1987, as cited in Shulha and Cousins, 1997). Collaboratory methods promote capacity building, organizational learning, and stakeholder empowerment (Weiss, 1998).

As a leader at my school holding a position of influence, I try to encourage all teachers, regardless of age and stage, to participate in school-wide decision-making and evaluations. As a leader, I strive to ask the right questions and open communication channels between staff, students, and parents. It is important to gather information and observations from all stakeholders and not to assume my view of reality is a shared one. Despite my efforts, I often find many of the stakeholders are not willing toparticipate. Usually, this is due to a lack of time, a busy schedule, fear of saying something administration won’t like, or simply because they don’t think their thoughts will be appreciated or used. Do you have any insight into making stakeholders feel more valued and heard? How to better promote more engagement? As a participatory approach is only effective when all stakeholders are represented.

Another evaluation method I have read about is appreciative inquiry. Do you have any experience with this approach? I like moving away from a deficit-based model and toward a strengths-based one. It sounds like this approach might be helpful in building a positive mindset amongst stakeholders by identifying what works well and then brainstorming what else might be possible. In this approach, individuals feel appreciated as this model focuses on their strengths and values instead of their faults. Positive feedback often reaffirms self-worth and motivates individuals to further improve (both as individuals and as a team). Do you have any experience using the 4D model (discovering what is currently being done well, dreaming about what else could possibly be done, designing what these possibilities would look like, and finally delivering the new product/design/program). While appreciative inquiry also can result in more collaboration, empowerment, and motivation amongst the members of various organizations, it might also have more bias. While you cannot eliminate all biases (we all have biases), have you found ways of reducing/minimizing internal biases to better produce a quality evaluation? Any tips for framing and legitimizing the parameters of an evaluation and reducing the assumptions and biases within which evaluation takes place?

Finally, have you found that more collaborative and participatory evaluations lead to less accountability than previously used technocratic approaches to evaluation? If so, how do you overcome this?

Thanks so much for your insight,

Megan

Hi Nicole,

I am a high school teacher from Ottawa, Canada currently working on a master’s degree in education through Queens University in Kingston Ontario, Canada. In my research for my Program Inquiry and Evaluation course I came across your post.

What drew me to your post was Trauma Informed and Restorative Practice (TIRP). I am a restorative practitioner and trainer who has worked in the Arctic with Inuit students for a number of years. This was a proving ground for me in the use of the restorative process in trauma informed education. Intentional listening to stories became a salient component of my ability to connect with the students and community members. Storytelling is how indigenous peoples pass on knowledge and cultural teachings to their children. To witness the respect and attention that elders received from the children as they told their stories was something to behold.

Your resource, Evaluation as telling the community’s story, stood out as an important tool in the collaborative evaluation process. What better way to break down barriers and have a community buy into the process than to have their voice shown to be valued.

This will be a resource I will carry with me as I journey through my professional practice.

Best,

Kevin Nearing

Hi Nicole – thanks for the great post! I am always looking for ways to help explain the evaluation process to different community groups. You mentioned a visual that helps explain coding on slide 3 – was that the visual that was included in the blog? If not – could you send it my way?

Thanks in advance from your fellow Seattlite — Erin Hertel erin.m.hertel@kp.org