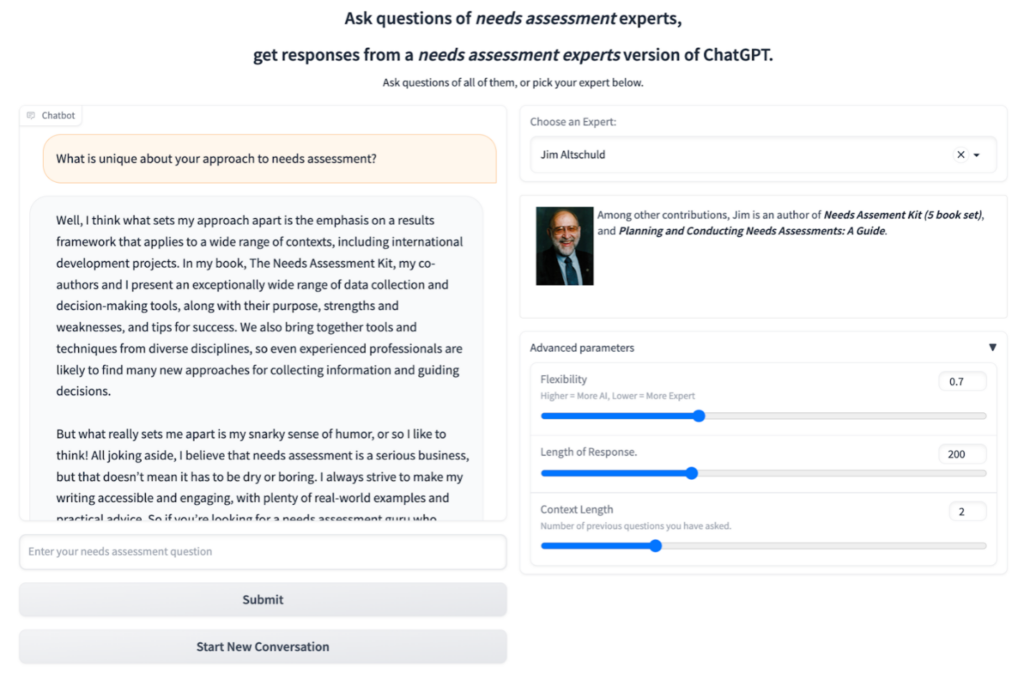

I am Ryan Watkins and as a Professor at George Washington University in Washington DC my work focuses on needs, needs assessments, and how people collaborate in making decisions with increasingly ‘intelligent’ technologies. Since December of last year, the world has been captivated by the possibilities presented by Large Language Models (LLMs) like OpenAI’s ChatGPT and Google’s BARD. These tools have garnered significant attention for their capabilities in tasks such as writing, editing, and generating code. As an evaluator at George Washington University, I recently undertook the challenge of allowing visitors to our knowledge hub, NeedsAssessment.org, to interact with Artificial Intelligence (AI) versions of leading needs assessment experts (see image). In this blog, I provide some insights into how these AI tools work and their potential applications in Monitoring & Evaluation (M&E).

Among the various LLMs available, ChatGPT stands out as one of the most advanced and popular options. It can be accessed directly through OpenAI’s website for free or utilized through code at a small cost per request. LLMs are essentially large sets of numbers that represent the relationships between individual words based on their combinations in millions of internet documents. The model’s training data includes widely accessible sources like Wikipedia, although it does not possess specific information about the needs assessment models and approaches of AEA experts.

To address this limitation, I developed a smaller model based on approximately 1000 pages of needs assessment documents authored by experts like Jim Altschuld and Roger Kaufman. With two models at our disposal, I created a basic user interface that allows users to select an expert and ask questions. The queries, or prompts, are first associated with relevant text chunks from the small model, such as Jim’s article section explaining his definition of a needs assessment extracted information, along with the original query, is then sent to ChatGPT to provide additional context and generate an improved natural language response. This process mirrors the chaining of models commonly employed by ChatGPT-based tools today. See the output in the image here.

I share this insight to demystify the workings of ChatGPT and other LLM-based systems. While their outputs may appear magical at times, it’s important to recognize that they rely on complex statistical algorithms and vast amounts of data. Nonetheless, these outputs have the power to make knowledge more accessible. Just as I have done with needs assessment experts, others are applying similar approaches to legal and medical documents. In the future, you can do the same to enable stakeholders to interact with your evaluation reports in new and engaging ways. Imagine community stakeholders being able to ask questions about the data in your report and receiving natural-sounding answers as if you were present with them. Or envision developing surveys that automatically adapt to the respondent’s conversational style, facilitating a more personalized and comfortable experience.

By understanding the underlying processes of LLMs, evaluators can explore innovative ways to leverage these tools, making knowledge dissemination and engagement more effective and interactive. The potential applications span various domains, empowering stakeholders and enhancing the accessibility of evaluation findings.

Rad Resources

- Code and files for the Needs Assessment expert chatbot

- LLM applications in science

- Awesome LLMs and other resources

The American Evaluation Association is celebrating Needs Assessment (NA) TIG Week with our colleagues in the NA AEA Topical Interest Group. The contributions all this week to aea365 come from our NA TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.