Hello! My name is Toi Wise. I am an Evaluation and Data Specialist for the education department at Enlace Chicago, a community-based nonprofit organization which serves a predominantly Latinx/e neighborhood. I also serve as the Secretary for AEA’s local affiliate, the Chicagoland Evaluation Association. This year, I was fortunate enough to have the opportunity to co-present on the topic of “Collaborative Usage of Data and Evaluation with Stakeholders” at ACT Now’s Illinois Statewide Community School Convening. The audience included stakeholders ranging from program staff to school administrators, many of whom play a role in completing an annual evaluation of their out-of-school time programs. I am excited to share some of the key takeaways from this presentation.

Key Takeaways

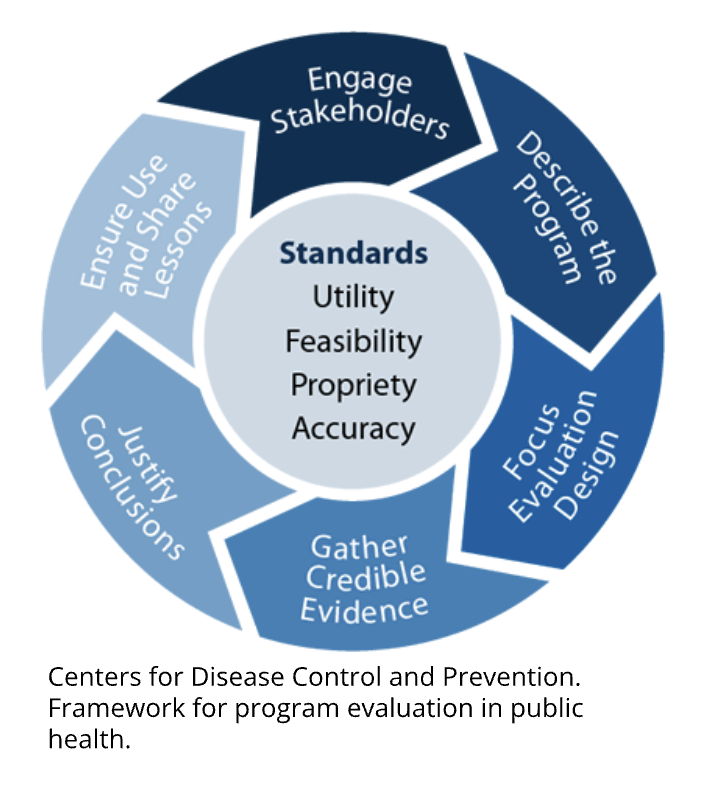

Using the Center for Disease Control’s framework for program evaluation as a foundation, we can begin thinking about a proactive participatory approach that stems from identifying stakeholders and creating a plan for collaboration from the beginning.

- Engage Stakeholders – Although this is at the top of the framework’s cycle, stakeholder engagement happens throughout this entire process. Equity among stakeholders is important; it’s not just about a hierarchy based on roles and titles.

- Define the Program – In cases where the program objectives have already been defined by funders, it is still important to collaborate with administrators and staff to get a thorough understanding of the overarching goals, activities, and curriculum of each program.

- Focus Evaluation Design – Including administrators and staff at this stage helps to increase buy-in and ownership in the evaluation process. This can include partnering to develop evaluation questions, determine data collection methods, and create or modify data collection tools.

- Gather Credible Evidence – Piloting the data collection tools and incorporating the expertise of your participants, staff, and/or advisory boards to confirm validity before gathering evidence is another way to make the evaluation more participatory.

- Justify Conclusions – Plan to bring any preliminary findings back to priority stakeholders to discuss conclusions. Are the findings valid based on their firsthand knowledge? Is any additional context needed?

- Ensure Use and Share Lessons – Create a dissemination plan that includes a variety of stakeholders and consider ways to make the final report more accessible. This may include writing an executive summary, creating a 1-page summary or infographic, and/or translating a summary into another language. Hosting a data party to bring the final evaluation findings and recommendations back to priority stakeholders promotes further discussion and brings all of the collaborative efforts full circle.

Challenges

As we know, when working for a nonprofit organization you are often met with a set list of objectives that you must measure and report back to funders. By nature, this removes a major collaborative piece out of the evaluation puzzle: the coming together of minds to reflect on the program’s mission and set your own objectives and goals. While it is still essential to do this, regardless of funder requirements, it presents a set of fixed standards that can make the participatory approach a bit more challenging.

For me, this has raised the questions, “How can I engage the staff at my organization in this evaluation process, in spite of the outcomes already being established? How can I incorporate their knowledge and expertise, and foster a participatory environment?”

Lessons Learned

- Take a proactive approach to collaboration

- Acknowledge and seek out the expertise of individuals and groups with vested interests

- Be intentional about including stakeholders in various areas of the evaluation process

- Include details about the who, what, when, and where in the evaluation plan

- Remember that this is an iterative process, not a fixed one

The American Evaluation Association is hosting Latina/o Responsive Evaluation Discourse TIG Week. The contributions all this week to AEA365 come from LA RED Topical Interest Group members. Do you have questions, concerns, kudos, or content to extend this AEA365 contribution? Please add them in the comments section for this post on the AEA365 webpage so that we may enrich our community of practice. Would you like to submit an AEA365 Tip? Please send a note of interest to AEA365@eval.org. AEA365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.