Hello, AEA365 community! Liz DiLuzio here, Lead Curator of the blog. This week is Individuals Week, which means we take a break from our themed weeks and spotlight the Hot Tips, Cool Tricks, Rad Resources and Lessons Learned from any evaluator interested in sharing. Would you like to contribute to future individuals weeks? Email me at AEA365@eval.org with an idea or a draft and we will make it happen.

My name is Svetlana Negroustoueva and I lead Evaluation Function in CGIAR, an agriculture research for development organization.

And my name is John Gargani. I’m a former AEA President and a member of CGIAR’s Evaluation Reference Group.

What is Research for Development?

Research for development (R4D) is scientific research undertaken to improve the lives of people and the environment. Often, it produces innovations with the potential to create transformational impacts. When evaluating research for development, two domains must be addressed: the quality of science and development impacts. We describe how this is done at CGIAR, which recently published a new framework for evaluating R4D.

What is CGIAR?

CGIAR is the world’s largest global agricultural innovation network. Its 13 centers span five continents and conduct scientific research aligned to a common purpose—a future in which food is secure for all—by transforming food, land, and water systems in a time of climate crisis. For example:

- the International Food Policy Research Institute (IFPRI) partnered with the Pakistan Ministry of National Food Security and Research to develop market reforms in Punjab that improved the livelihood of farmers and made food more widely available at lower prices;

- Dr. Shakuntala Haraksingh Thilsted, Global Lead for Nutrition and Public Health at WorldFish, was awarded the World Food Prize in 2021 for innovating the production of small native fish species that improved the nutrition of vulnerable families across Asia, Africa, and the Pacific; and

- the International Institute of Tropical Agriculture (IITA) and Centro Internacional de Agricultura Tropical (CIAT) developed new varieties of cassava that increased production by Nigerian smallholder farmers by 64% and added 2.7 million hectares of cassava cultivation in South and Southeast Asia.

Work like this is critical for the wellbeing of people around the world. Evaluating it well matters.

Evaluating Quality of Science and Development Outcomes

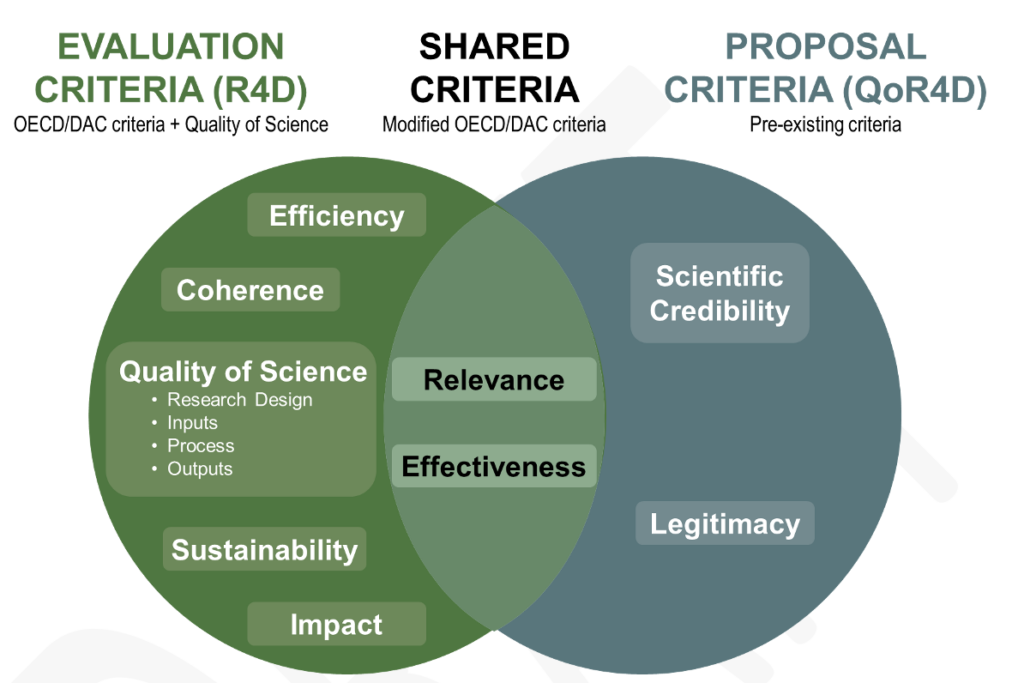

The job of evaluating CGIAR’s extensive research portfolio falls to its Independent Evaluation Function. Recently, it introduced guidelines for evaluating R4D using seven criteria. One criterion is Quality of Science, which is composed of four elements—research design, inputs, process, and outputs. The remaining six criteria—relevance, coherence, effectiveness, efficiency, sustainability, and impact—come from the OECD/DAC criteria. Two of these—relevance and effectiveness—are modified to reflect how the concepts have been used by CGIAR to evaluate research proposals. This may be a point of confusion to audiences outside of CGIAR because the new guidelines are used to evaluate Research for Development—R4D—and the pre-existing approach to selecting research proposals evaluates Quality of Research for Development—QoR4D.

The R4D criteria are intended as a starting place that evaluators may modify, expand, or condense, depending on their purposes and contexts.

The Need for Peer-to-Peer Learning

The development of CGIAR’s guidelines benefited greatly from peer-to-peer learning. In particular, discussion on EvalForward community suggested concrete ways to structure R4D evaluative criteria. This led the Evaluation Function to develop criteria that:

- are clear, flexible and adaptable;

- encapsulate lessons from a decade of CGIAR evaluations;

- include set of methods and questions useful in many evaluation contexts;

- may be used at different stages of the project cycle;

- emphasize the importance of building and leveraging partnerships; and

- build on industry standards, such as the OECD/DAC criteria.

Next Steps and Opportunities to Contribute to Our Work

Guidelines are useful, but on their own may not be effective. Consequently, CGIAR plans to modify the guidelines based on what we learn by:

- providing training sessions, online resources and, mentor partners;

- pilot-testing the Guidelines in evaluations, notably CGIAR’s GENDER and Genebank Platforms; and

- continued learning from peers in the evaluation community.

Rad Resources

CGIAR has a trove of evaluation resources, including the new guidelines.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.