My name is Sue Ann Corell Sarpy, Principal of Sarpy and Associates, LLC and Program Chair of the Disaster and Emergency Management Evaluation (DEME) TIG. This week, contributions represent research and practice in DEME encompassing a national and international scope. This week starts with an evaluation study I conducted concerning resiliency training for workers and volunteers responding to large-scale disasters.

The National Institute of Environmental Health Sciences (NIEHS) Worker Training Program with the Substance Abuse and Mental Health Services Administration (SAMSHA) identified a need to create trainings that promoted mental health and resiliency for workers and volunteers in disaster impacted communities. We used a developmental evaluation approach that spanned two distinct communities (e.g., Gulf South region; New York/New Jersey region) disasters (e.g., Gulf of Mexico Oil Spill; Hurricane Sandy) and worker populations (e.g., disaster workers/volunteers; disaster supervisors) to identify effective principles and address challenges associated with these dynamic and complex training needs. We used an iterative evaluation process to enhance development and delivery of the training such that project stakeholders provided active support and participation in the evaluation and discussion of findings and incorporated the effective principles (best practices/lessons learned) into the next iteration of training.

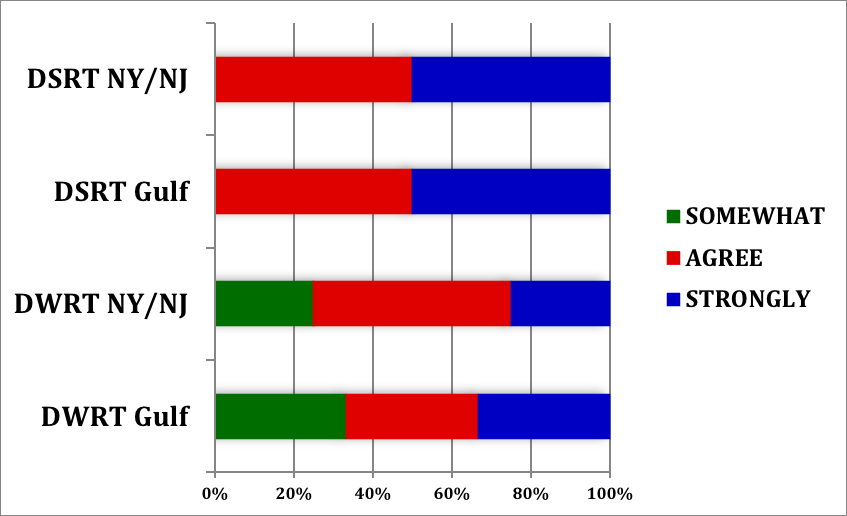

Evaluation results supported the usefulness of this type of developmental evaluation approach for designing and delivering disaster worker trainings. The training effectiveness was demonstrated in different geographic regions responding to different disaster events with different target audiences. This graphic depicts percentage of ratings of agreement with how well the training met the needs of the various target audiences. We found that ratings increased as we continued to integrate the best principles in the training, starting with disaster worker training in the Gulf South region to the final phase of the project – the disaster supervisory training in the New York/New Jersey region.

Lessons Learned: Several key factors were critical for success in evaluating resiliency training for workers and volunteers responding to large-scale disasters:

Major stakeholders actively involved in development, implementation, and evaluation of trainings. We included information from workers, supervisors, community-based organizations, and subject matter experts in the evaluation data and discussion of findings.

Evaluation conducted early on in the training design and feedback of effective principles used in each iteration. Evaluators were brought in as a key stakeholder early in the process and we were integral in revising and refining training products as the project progressed.

Build relationships and trust with various stakeholders to gather information and refine curriculum. The inclusion of stakeholder feedback, so that everyone gets a voice in the system, built trust and buy-in in the evaluation process and was key to its success.

Creating balance between standardized training and flexibility to tailor training to meet needs (adaptability). The best principles that emerged across worker populations, communities, and disasters/emergencies provided a framework that allowed for a structure for the trainings but afforded flexibility/adaptability needed to meet specific training needs.

Rad Resources: Visit the NIEHS Responder and Community Resiliency website for training resources related to this project.

The American Evaluation Association is celebrating Disaster and Emergency Management Evaluation (DEME) Topical Interest Group (TIG) Week. The contributions all this week to aea365 come from our DEME TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.