Hi y’all, Daphne Brydon here. I am a clinical social worker and independent evaluator. In social work, we know that a positive relationship built between the therapist and client is more important than professional training in laying the foundation for change at an individual level. I believe positive engagement is key in effective evaluation as well as evaluation is designed to facilitate change at the systems level. When we engage our clients in the development of an evaluation plan, we are setting the stage for change…and change can be hard.

The success of an evaluation plan and a client’s capacity to utilize information gained through the evaluation depends a great deal on the evaluator’s ability to meet the client where they are and really understand the client’s needs – as they report them. This work can be tough because our clients are diverse, their needs are not uniform, and they present with a wide range of readiness. So how do we, as evaluators, even begin to meet each member of a client system where they are? How do we roll with client resistance, their questions, and their needs? How do we empower clients to get curious about the work they do and get excited about the potential for learning how to do it better?

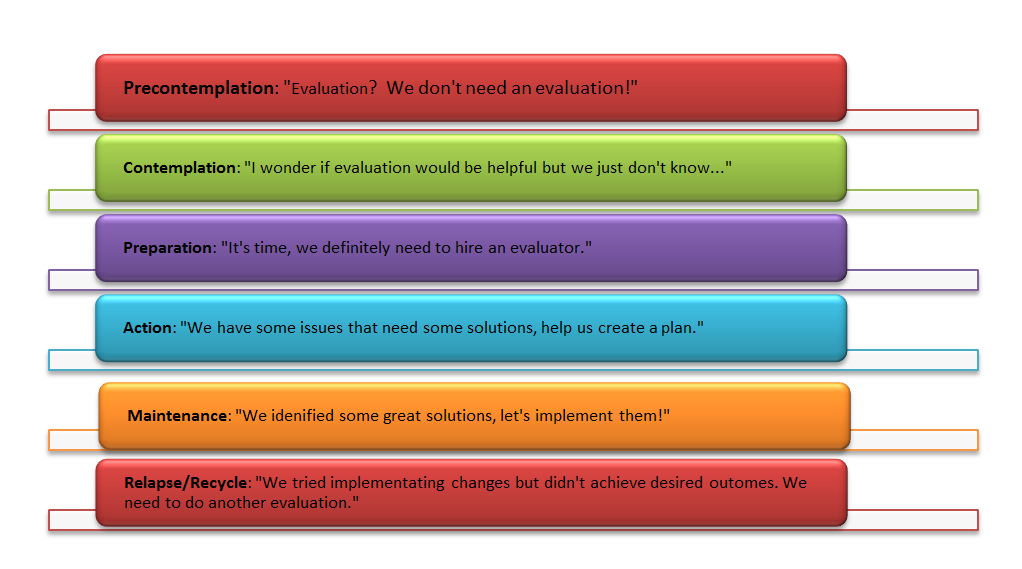

Hot Tip #1: Engage your clients according to their Stage of Change (see chart below).

I borrow this model most notable in substance abuse recovery to frame this because in all seriousness, it fits. Engagement is not a linear, one-size-fits-all, or step-by-step process. Effective evaluation practice demands we remain flexible amidst the dynamism and complexity our clients bring to the table. Understanding our clients’ readiness for change and tailoring our evaluation accordingly is essential to the development of an effective plan.

Stages of Change for Evaluation

Hot Tip #2: Don’t be a bossypants.

We are experts in evaluation but our clients are the experts in the work they do. Taking a non-expert stance requires a shift in our practice toward asking the “right questions.” Our own agenda, questions, and solutions need to be secondary to helping clients define their own questions, propose their own solutions, and build their capacity for change. Because in the end, our clients are the ones who have to do the hard work of change.

Hot Tip #3: Come to my session at AEA 2015.

This contribution is from the aea365 Tip-a-Day Alerts, by and for evaluators, from the American Evaluation Association. Please consider contributing – send a note of interest to aea365@eval.org. Want to learn more from Daphne? She’ll be presenting as part of the Evaluation 2015 Conference Program, November 9-14 in Chicago, Illinois.

Hi Daphne,

My name is Claire Shorthill and I am enrolled in a Program Inquiry and Evaluation course through Queen’s Professional Masters of Education program. Your argument about the importance of establishing a framework for positive engagement between clients and evaluators really connects to the discussions and debates about effective program evaluation we have been having throughout our program evaluation course. I have also seen in my own practice, as a British Columbia secondary school teacher, how establishing positive relationships with intended users is a key factor in facilitating long-term growth and change with students and colleagues. Your second tip about encouraging the intended users to take the lead on establishing evaluation questions and intended outcomes really resonated with me. As a Canadian teacher working overseas I have collaborated with educators, students and administrators from many parts of the world and learning to listen and, as you outline in the post, ask the “right questions” is one of the most valuable lessons I have learned during my time overseas. If we are to truly engage with intended users throughout the program evaluation process, then we must resist making assumptions about the intended users’ needs and goals.

I appreciate your focus on empathy in your discussion about the challenges we all face when it comes to implementing change. As you point out in your post, “[u]nderstanding our clients’ readiness for change and tailoring our evaluation accordingly is essential to the development of an effective plan.” However, have you ever found that a client’s readiness for change or lack of readiness completely stalls the program evaluation? If a client or intended user is at the Precontemplation stage on the Stages of Change for Evaluation, then how do we connect and engage with these intended users? Is there a point where a lack of readiness for change means a program evaluation, no matter how carefully the program evaluator tailors the evaluation to fit the concerns and goals of the intended users is a non-starter? I am also curious about how we engage clients or intended users in effective program evaluation while being mindful of the potential for evaluation misuse in the process.

The more research I have done on effective program evaluation, the more I appreciate the moral complexities of engaging intended users in the evaluation process while conducting an unbiased program evaluation. However, your argument for creating a framework for positive engagement with intended users seems to be an essential starting point when it comes to conducting an effective and meaningful program evaluation. Thank you for sharing your tips about how program evaluators can positively engage with intended users during the evaluation process.

Thank you,

Claire

Dear Daphne,

Thanks for such a compelling article. I am taking a course in Evaluation Inquiry and your post really resonated with me.

You make a great point and I agree that the clients must be met where they are at, or every effort to convince them an evaluation is necessary may be moot. In reading your reflection, I wondered if the term “client” for you applies to all users and stakeholders. I would like to touch on points you have made that have really made me think.

Although I agree with involving clients in the process of evaluation, I worry about biased views and opinions influencing the findings and/ or the outcomes; i.e. if the clients are too involved, they may influence the evaluator who should, instead, be unbiased. However, if clients are not involved, I do not believe the evaluator could have a complete picture of the program just by sheer observation; I maintain that a proactive and constant interaction would be necessary. I was wondering what your position on such dilemma would be. In your experience, do you think it is worth to take the risk of influencing the evaluation process by involving all parties, or should one refrain from being too close to clients in order to avoid their bias about the program?

Also, I have been reflecting a lot on the topic of misuse and non-use of findings which you have mentioned and about which I have read from several sources. After reading some literature, I had meaningful discussions with my peers about the topic; I have mixed feelings about the role of an evaluator in use of findings based on the Weiss-Patton debate (1988, ans cited in Schula & Cousins, 1997). Should an evaluator just report satisfying data and findings according to Weiss’ view, or should he/she assure the findings are properly used according to Patton’s view? I see merits in both views; I do, nonetheless, tend to side with Patton, for it seems, in his approach, there is more emphasis on minimizing the risk of misuse and non-use.

I am very curious to hear your thoughts based on your experience in the field.

Thank you,

Daniela

Shulha, L., & Cousins, B. (1997). Evaluation use: Theory, research and practice since 1986. Evaluation Practice, 18, 195-208.

Hi Daphne,

My name is Gagan and I am a junior high science educator. I am currently taking an evaluation course and planning a hypothetical evaluation design for a volunteer-based mentorship program.

Your sentiments about engaging clients to set the stage for change resonated with my recent learning from “The Sociological Roots of Utilization-Focused Evaluation” (Patton, 2015). Patton emphasizes fostering feelings of ownership by making the evaluation process personal for clients (Patton, 2015, p. 458). This can be achieved with your suggestion of meeting clients where they are as delineated by the stage of change chart.

The teacher in me found your first two tips very appealing and I realized that there is overlap between engaging clients in evaluation and the educational paradigm. For example, teachers need to know their students’ level of understanding in order to provide the correct amount of support for their growth. Additionally, teachers are often encouraged not to assert their own knowledge on students. Rather, they should ask probing questions to get the students actively involved. In essence, I felt that your suggestions made complete sense.

As I delve further into my evaluation design, particularly regarding program staff involvement, I will be considering the implications of your suggestions. The program I’m evaluating is purely a volunteer run organization. In this context, volunteers may need to dedicate extra time and energy to implement change in addition to the voluntary time they already devote to the program. As you mentioned, they will be the ones who do the heavy lifting, without any compensation or tangible incentive. So, I will have to reflect deeply on your question “How do we empower clients to get curious about the work they do and get excited about the potential for learning how to do it better?”

Thank you for the great insights!

Gagan

Patton, M. Q. (2015). The Sociological Roots of Utilization – Focused Evaluation. The American Sociologist, 46, 457 – 462.

Dear Daphne,

I feel very fortunate to have come across your post, as it speaks to many of the questions I have been recently asking myself. I am currently designing a theoretical program evaluation design, and I found your thoughts regarding evaluation use to be especially helpful to this end. As a K-12 teacher, I decided that a participatory approach would be the most appropriate as it would give teachers the chance to evaluate the program that they are ultimately implicated in. I totally agree with your point that positive engagement and building relationships with clients are critical for facilitating change. In this way, the evaluator can ensure they are doing their utmost to ensure that the results of the evaluation are not merely relevant but in fact are tailor-made for the context. As you say, it is this engagement with clients that will ultimately lead to the evaluation leading to change.

I was particularly struck by the chart outlining the stages of change for evaluation that you included, as this spoke to me of the ‘readiness’ of clients to engage with the evaluation. For my own context, I could foresee teachers being at almost every level at once for a given evaluation and this fact raised questions for me as to how this could be dealt with. To a certain extent, I wonder if this necessitates a high degree of flexibility in evaluating such that an evaluation can meet the diverse needs of all of the clients within a client system. I think that this flexibility would represent a challenge in and of itself, but it would also be critical for the evaluator to ensure that while varying readiness’ are considered that the ultimate results of the evaluation are valid and meaningful. I think that this is where your assertion that evaluators need to focus on asking the right questions becomes so important. In an education context, I see this as providing teachers with the opportunity to participate in the process and propose their own solutions for their classroom. In doing so, teachers will have ownership over the change process.

Thank you very much for sharing your thoughts! I will certainly be incorporating these ideas into my own program evaluation design.

Best Regards,

Adam Pirie

Hi Daphne,

Thank you for giving us your perspective and professional opinion as a clinical social worker on client engagement. I agree that relationship building and positive engagement is key to undertaking an effective collaborative evaluation. Using the term client, you’re referring to a single person which has different implications when considering community evaluation planning. The addition of more stakeholders may warrant a different approach when planning an evaluation considering your guiding questions of; How do we meet a client where they are? Roll with client resistance, questions, and needs? How do we empower clients to get curious about the work they do and get excited about the potential for learning how to do it better? The chart you’ve attached seems like a great way to guide the process and assist with developing an evaluation at the proper stage for each client. I believe this can be adopted when considering a community, but there would need to be much more data involved to identify the correct stage of change with the increased number of stakeholders.

Some literature discusses potential issues with stakeholder involvement in collaborative evaluation programming, including use/misuse, political, and ethical issues. With the approach of heavily involving stakeholders in the evaluation planning stage, I think the relationship is developed enough to balance any future involvement and avoid any influences that could skew the evaluation. The second tip presented is a great point of view. In my current work, I often find a disconnect between policy planners and operators. This disconnect becomes even greater when the user was disregarded throughout the planning and process but asked to make changes based on a report which they had no involvement with. So allowing the client, the expert, to contribute in the evaluation planning process pushes us towards asking the right questions which effectively supports the goal of supporting the client.

Thank you for sharing,

C. Elliott

Hi Daphne,

I read your post on engaging clients in evaluation planning and thought that you made some really great points. I also agree with your point that “relationship built between the therapist and client is important…in laying the foundation for change at an individual level” for almost all community service programs. I further believe that it is just as important as professional training which involves building respectful, responsive relationships with clients. This is a vital professional skill. This also includes working with individuals or groups, as well as with adults or children. Building a good rapport is ideal. In the paper, Evaluation use: Theory, Research and Practice Since 1986, Shulha and Cousins (1997) reminds us of the serious concerns common to any relationship as “Evaluators build partnerships, so they need to take measures to guard against idealism about the program, undesirable influences and systematic bias” this includes relationships with clients.

You stated that you “believe positive engagement is key in effective evaluation as well as evaluation is designed to facilitate change at the systems level” this is an important point that many evaluators can attest to. In the article, Have we Learned Anything New about the Use of Evaluation? Carol Weiss (1997, p.31) states that “any theory of evaluation use has to be a theory of change” supporting your point of positive engagement. I strongly agree with your statement “When we engage our clients in the development of an evaluation plan, we are setting the stage for change…and change can be hard” so true.

You asked the question, “How do we empower clients to get curious about the work they do and get excited about the potential for learning how to do it better?” It appears that client participation is an effective way to empower them. According to Shulha and Cousins who referenced Murphy stated ‘“consequences of having participants govern the many elements of evaluation, reported (among other things) that the quality of participants’ training was essential to both immediate evaluation use and their willingness to re-engage in evaluation activities”’ (1997, p. 201). This is a question that you have answered very in your post!

Best regards,

Donna

References

Shulha, L., & Cousins, B. (1997). Evaluation use: Theory, research and practice since 1986. Evaluation Practice, 18, 195-208.

Weiss, C. H. (1998). Have we learned anything new about the use of evaluation? American Journal of Evaluation, 19, 21-33.

Hi Daphne,

Thank you for your post.

I’m very curious about the relationship between evaluators and clients, as they are the ones that will ultimately benefit from the evaluation results.

Your comment about an evaluator’s ability to meet the client where they are and really understand the client’s needs really resonated with me. Evaluators wear many hats in an evaluation process your advice about the stages of change in a client is brilliant.

The chart can be customized so easily, and if used correctly, it can keep evaluators on track and ultimately, keeping clients happy. But this requires a lot of flexibility and adaptability on behalf of the evaluators themselves.

I also appreciate your second tip. It’s very easy for evaluators to feel protective over their own work, and get lost in that bias and in that process, neglect their clients.

Maryam

Dear Daphne Brydon,

Your post ‘Engaging Clients in Evaluation Planning’ really struck a chord in me. As a classroom teacher, I can relate to your idea about positive engagement as a key to effective evaluation. Participation in evaluation gives stakeholders confidence in their ability to use research procedures, confidence in the quality of the information that is generated by these procedures, and develop a sense of ownership in the evaluation results and their application. This type of approach to evaluation ((Utilization Focused Evaluation) was one of the approaches i chose to evaluate a social program I am currently designing (as a students of Program Inquiry and Evaluation) even before I came across your article and had an understanding of Michael Patton’s body of work in the field of evaluation. However, reading Weiss’ article, “Have we learned anything new about the use of evaluation?” makes me think about the issue of bias. What is your view about employing an outside evaluator to evaluate the program rather than an internal assessor in order to eliminate this issue?

I agree with your view about engaging the clients according to their stage of change and the “one size does not fit all” approach. Evaluator needs to be flexible to accommodate the dynamism and complexity of the intended users of evaluation information. Similarly, my classroom is filled with diverse learners who differ not only culturally and linguistically but also in their background knowledge, and learning preferences, therefore, it is paramount that the instruction is differentiated in order to effectively address all students’ learning needs and enhance the success of all.

Finally, I really like the last tip in your post which calls on evaluators to respect

the intended users and take a non-expert position by “not being a bossypants”. This is especially true because developing relationship with the stakeholders is an important step in the evaluation process and this can help in sustaining their interest in the evaluation process.

Once again, thank you for your article. It has shed some light on the importance of engaging clients in evaluation findings. Your article has given me some advice to put in my toolbox as I continue my journey into the program evaluation world.

Sincerely,

Awawu Agboluaje

Relating social work and program evaluation is a a parallel that I had never thought about before. It is true that the relationship with the therapist and client are extremely important in how successful the therapy’s outcome is. Similarly, the relationship between an evaluator and the client are also very important and dependent on the trust that that the evaluator is able to develop with the client. Without that, it’s unlikely that the changes that are needed, no matter how accurate or necessary, are actually going to be implemented. Great tips! (especially about being a bossypants, that’s great advice for all life relationships!)

What happens when the client is the entire community, like the project seeks to end domestic violence or eliminate homelessness? How can academics measure success?