Greetings! We are Larry Dershem (Senior Advisor, Research & Evaluation), Ashley Bishop (Monitoring, Evaluation and Learning Advisor), and Brad Kerner (Senior Director, Sponsorship Program) working for Save the Children (SC), which is an international relief and development organization that seeks to ensure children survive, learn, and are protected internationally and in the US.

A retrospective impact evaluation (RIE) is an ex post evaluation of an evaluand to assess its value, worth, and merit, with a special focus on examining sustainability of intended results as well as unintended impacts. However, due to resource constraints, international development donors and organizations cannot afford to conduct many RIEs, limiting our ability to truly understand longer-term outcomes and impacts after the closure of a program. Save the Children’s sponsorship programs are currently investigating the feasibility of conducting RIEs in order to optimize learning from scarce resources.

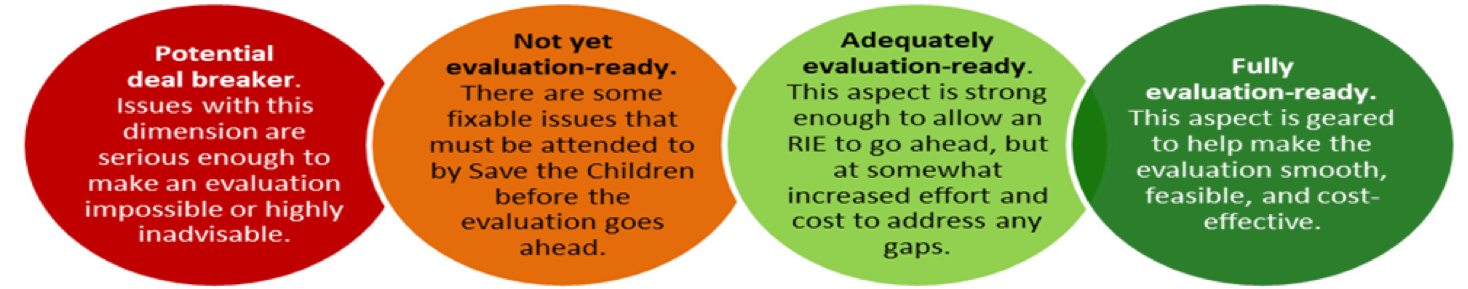

With invaluable consultation from E. Jane Davidson (RealEvaluation) and Thomaz Chianca (independent consultant), we developed a RIE Evaluability Scoping Guide to assess the feasibility of conducting an RIE. The RIE Scoping Guide assesses 24 issues categorized into the following four dimensions: 1) Internal Stakeholder Support, 2) External Stakeholder Support, 3) Availability of Evidence & Documentation, and 4) Context. After program staff review, discuss and score each of the 24 issues, a tally of the scores indicate which of the four feasibility categories best describes the feasibility of a RIE.

RIE Feasibility Categories

To date, five sponsorship programs implemented for 10-years, and ended 5 to 9 year ago in Bangladesh, Nepal, and Ethiopia, have been assessed. All five programs are either “Adequately Evaluation Ready” or “Fully Evaluation Ready”; therefore, in the next year SC plans to commission at least one RIE. The RIE Scoping Guide will be presented during a demonstration session at the AEA 2018 annual meeting in Cleveland, Ohio, October 28 – 3 November.

Lesson Learned:

- To avoid confusion, be clear with program staff that an evaluability assessment of a program is NOT an evaluation of a program.

- For program staff to clearly asses the evaluability issues under the 4 dimensions, each issue should have a short description and set of questions to be answered.

Hot Tips:

- Having specific dimensions and issues that are critical for a RIE to be feasible after a program has ended allows staff to incorporate these issues into the program from the beginning.

- SC has established an optimal window of 5-10 years after completion of a program to conduct a RIE which allows for longer-term impacts to occur but not too long to limit the number of confounding factors.

Rad Resources:

- Davies, Rick. (2013). Planning Evaluability Assessments: A Synthesis of the Literature with UK Department for International Development.

- Dunn, Elizabeth (2008), “Planning for cost effective evaluation with evaluability assessment.” Impact Assessment Primer Series, Publication #6, United States Agency for International Development (USAID).

- Khatiwada, Lila Kumar. (2017).Implementing a Post-Project Sustainability Study (PSS) of a Development Project: Lessons Learned from Indonesia. Reconsidering Development. Vol 5 No 1.

- Peersman, Greet, Irene Guijt & Tiina Pasanen. (2015). Evaluability Assessment for Impact A Methods Lab Publication. ODI.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

I am enrolled in the Professional Master’s of Education program at Queen’s University in Kingston, Ontario. Currently I am taking a program evaluation course and one of the requirements is to connect to an AEA365 article and I have chosen your article on retrospective impact evaluation.

I am new to program evaluation and I am quite intrigued with the process that surrounds the idea of program design and evaluation.

I find that I am drawn to the end results, the short and long-term outcomes of program evaluation, this is what I find fascinating. This is why I chose your article to respond to.

I do have a few questions from reading your article and the implementation of this type of evaluation.

With the idea that the program ended years later and now the focus is on observing the impacts of the program that have actually occurred, one would need to truly understand the program looked at.

I would hope that all retrospective impact evaluations are positive in displaying that the program did have integrity and success with the all participants and sponsors. I would also hope that the findings are all positive to show that the correct program design, decision making processes and overall outcomes were effective as well.

The “quick” scoping guide to analyze the data collection is readily available and comprehensible. The evaluation time frame is reduced and supported from the idea of using the scoping guide as a mini-rubric to determine what the results are. The scoping guide is organized into four areas: potential deal breaker, not yet evaluation-ready, adequately evaluation-ready and full evaluation-ready. By utilizing the four categories’ programs, essentially from worst to best, will determine the overall score. I would think that it would be hard to not change any inconsistencies that are found. As the idea that the evaluation will have unforeseen events and may not come to light until years later is, I assume, expected.

This would bring up new questions why the program had weaknesses and strengths. Would it be possible in the future that they would consideration using the data from the retrospective impact evaluation to go back and reorganize the program if they were not scoring high in that area?

I see this as a process to fill in the final gaps to display the quality of the program. The methods provide useful tools for evaluating long-running programs in which the findings could add to the concept of long-term sustainability of the outcomes.

Retrospective impact evaluation would entail to have an open mindset and the readiness to incorporate an attitude of openness towards the findings. Overall, I think being patient, honest and one’s integrity will need to be utilized. After all one is trying to relook at the existing information and finding new information and if they connect with the start to finish of the program and years later.

I also see this as a method is not to recreate new problems. I like how the program outcomes are categorized into four areas of retrospective impact evaluation need. This would eliminate the idea of having to observe every and all programs and focus on the ones that bring up new interests. Where these new ideas allow an opportunity to go back and revisit an idea or issue. This will bring up new thoughts on and maybe challenge the current views of the program. For me I see this as a challenging task as one would have to truly understand the program they are viewing.

The idea is to look at what happened in the past and trace back to the origins, this would take a keen vision. One would have to vision the individuals in the program and how they were impacted in the end. Again, these findings will be able to identify the benefits of the program to ensure future integrity. I would think the hardest concept would be not to go back and revamp or implement the data from the findings and not being able to use them.

Questions:

If the program has ended in the time frame and now ready to be retrospective impact evaluated, is the data still relevant?

Since retrospective impact evaluation is made to build on from information that already exists, are there biases that are associated with it?

Is it necessary to focus on all long-term outcomes in the program?

Thank you for an insightful article on retrospective impact evaluation.

Joanne Tremblay