Hello, AEA365 community! Liz DiLuzio here, Lead Curator of the blog. This week is Individuals Week, which means we take a break from our themed weeks and spotlight the Hot Tips, Cool Tricks, Rad Resources and Lessons Learned from any evaluator interested in sharing. Would you like to contribute to future individuals weeks? Email me at AEA365@eval.org with an idea or a draft and we will make it happen.

Hello. From 2016 to 2023, Sharon Twitty, Natalie Lenhart, and I (Paul St Roseman) have implemented an evaluation design that incorporates empowerment evaluation, development evaluation, and evaluation capacity building. This blog series, which we call Walking Our Talk, presents the process in four parts:

- Laying the Foundation – The Rise of The Logic Model

- Using the Data – Emerging a Data Informed Evaluation Design through Peer Editing

- New Insights – Emerging A Collaborative Diagnostic Tool and Data Visualization

- Charting the Course of Data Use – Collaborative Data Analysis

Today’s post is Part 1 in the series.

The Context

In 2005, The Alliance for Regional Collaboration to Heighten Educational Success (ARCHES) was established in California as a statewide voluntary confederation of regional collaboratives. Over the years, it provided technical assistance to alliance members. In 2016, ARCHES decided to refocus its services. Sharon Twitty (Executive Director, ARCHES) and I have worked to respond to this new charge by helping to develop evaluation products and systems that support the work of the Regional Intersegmental Collaboratives.

The first entry in this series presents how ARCHES collaboratively developed a logic model as it re-focused the services provided to its members. We used a three-step approach to develop the logic model.

Step 1: Collaborative Working Group (Document Review)

A project team was organized to create a working draft of the logic model. This was necessary given the number of documents requiring review. It was also a useful way to efficiently “prime the pump” for the larger group of leaders.

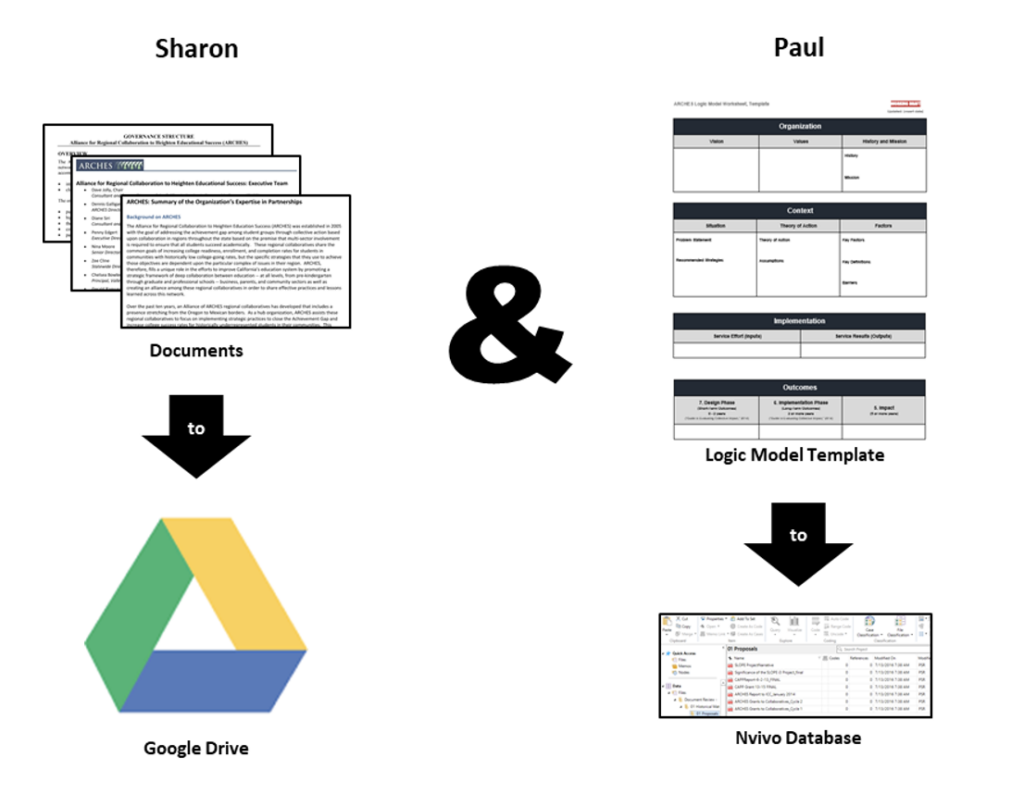

Sharon organized and placed all program files onto Google Drive. Simultaneously, I developed a logic model table in Google Documents and a Nvivo database to code and analyze the documents.

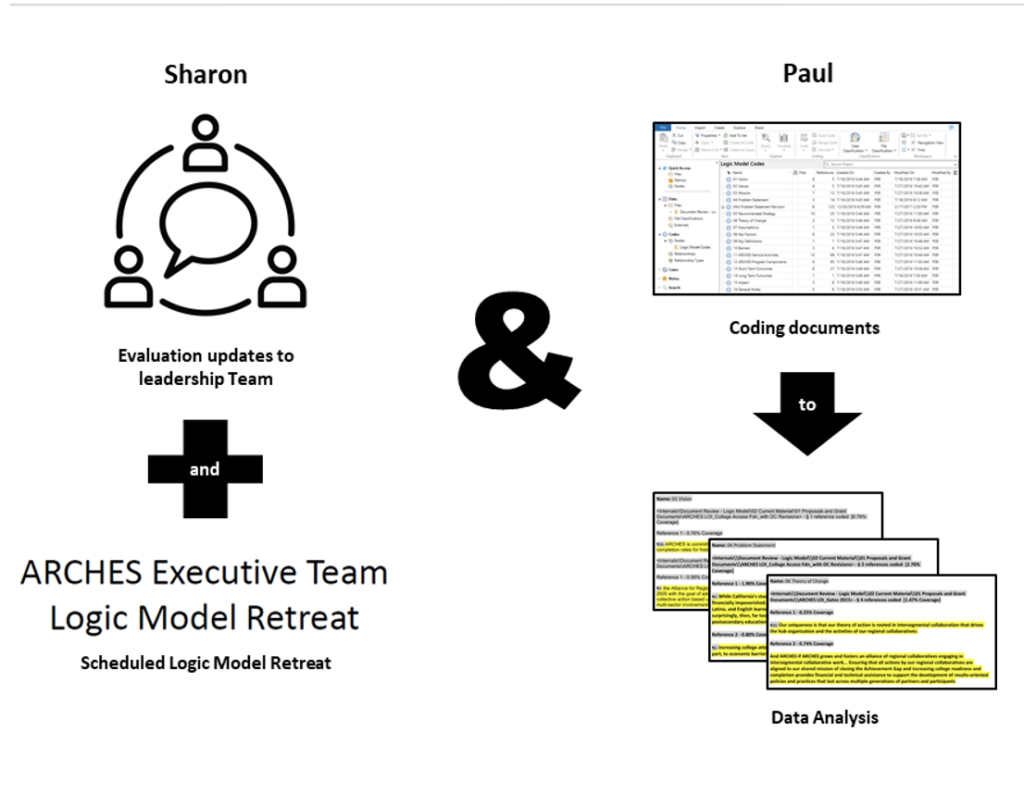

While I completed the initial coding and analysis of the documents, Sharon kept the leadership updated on the project. She highlighted the importance of the upcoming logic model retreat and included an evaluation update on all Executive Team meeting agendas.

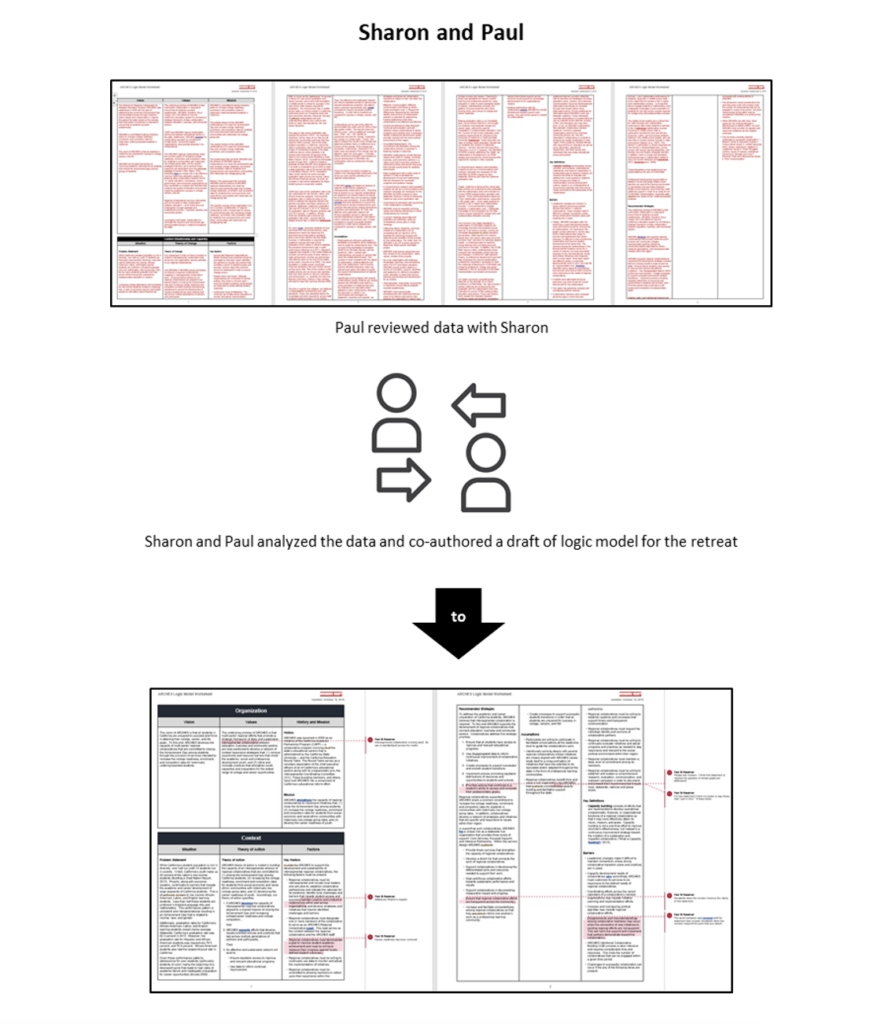

Once the coding process was complete the data was copied to the logic model table. Sharon and I reviewed the content and used a peer editing approach to draft the logic model. I would synthesize and summarize the data, while Sharon would review and reframe the content to fit the ARCHES service priorities. Through this process, the first draft of the logic model was created.

Step 2: Collaborative Logic Model Retreat (Retreat)

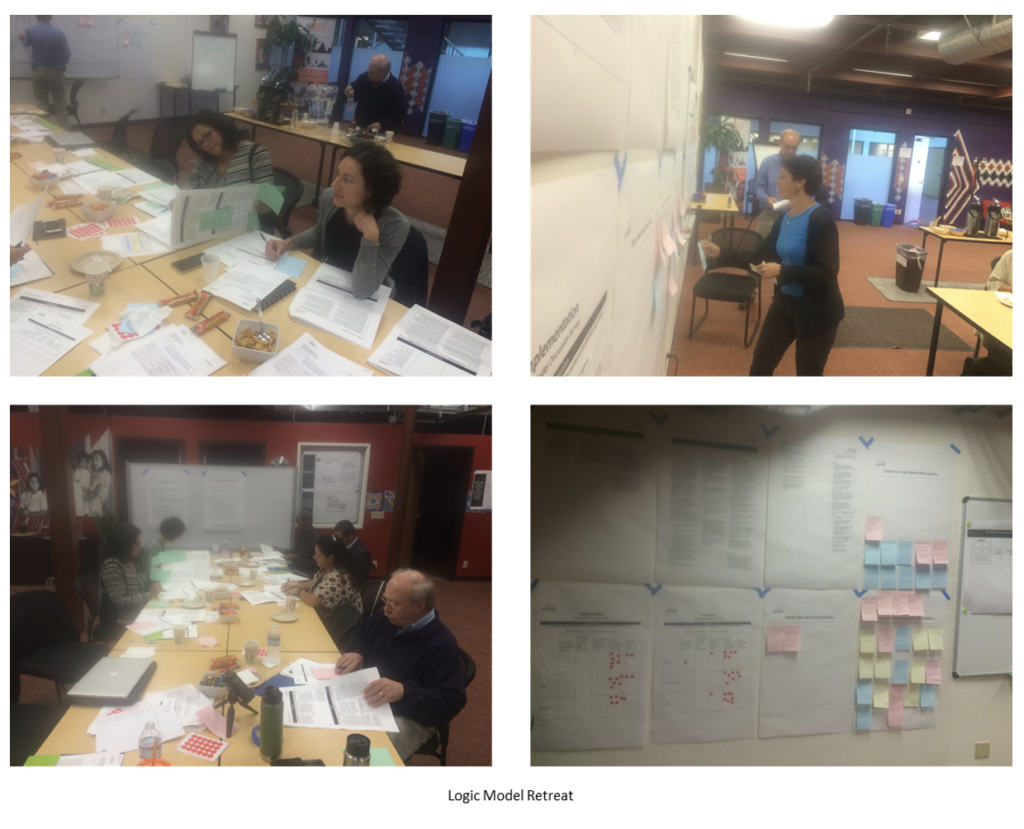

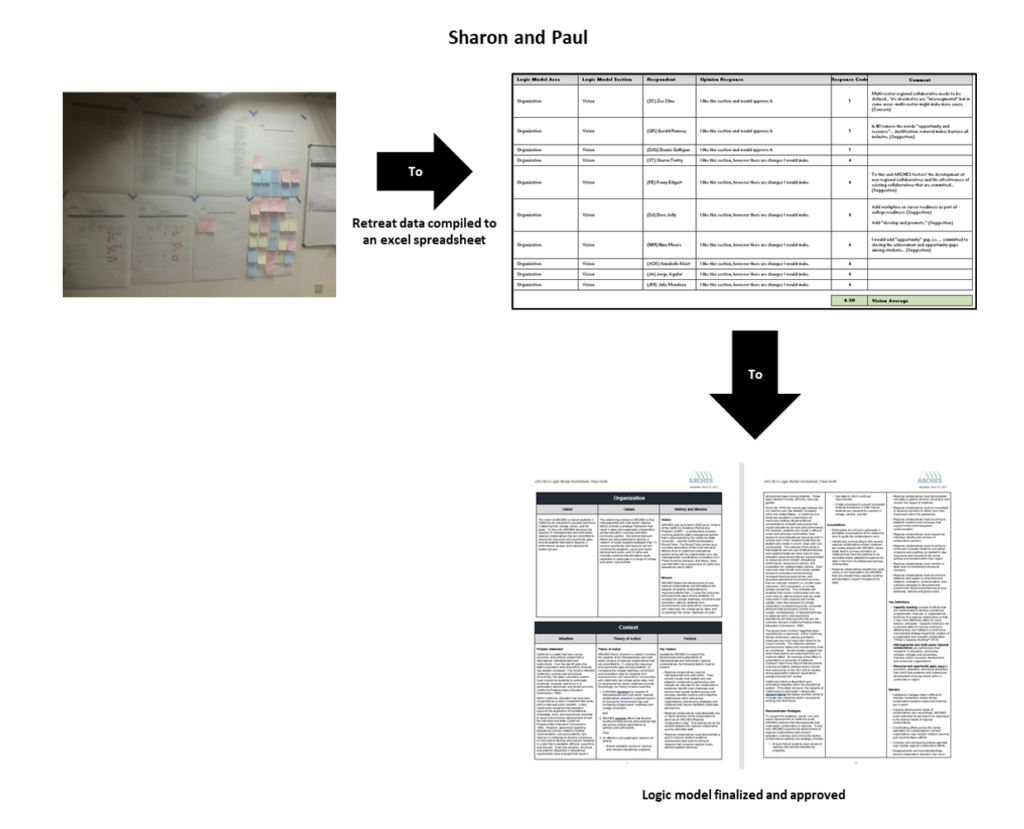

ARCHES hosted a day and a half Logic Model Retreat for its executive leaders to critically reflect on the draft logic model. Participants reviewed and critiqued the logic model using a discussion survey that framed and standardized analysis. Their feedback was captured using post-its that were placed on poster size survey worksheets.

Step 3: Collaborative Working Group Revisited (Enter Data, Final Analysis, and Approval)

After the retreat, the project team entered participant comments onto an Excel spreadsheet. The draft logic model was revised accordingly. The revision was submitted to leadership for review and approval. Additional revisions were suggested, revisions were made, and the final draft of the logic model was approved.

Lesson Learned: Collaborative Decision-Making Supports Consensus Development

The Logic Model Retreat helped ARCHES leadership develop consensus as they refocused services. It also modeled how evaluation can efficiently support collaborative decision making that prioritizes communication, critical deliberation, and the co-development of documents. This collaborative approach set a foundation for co-developing the evaluation design.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.