Hello, I’m Susanna Dilliplane, Deputy Director of the Aspen Institute’s Aspen Planning and Evaluation Program. Like many others, we wrestle with the challenge of evaluating complex and dynamic advocacy initiatives. Advocates often adjust their approach to achieving social or policy change in response to new information or changes in context, evolving their strategy as they build relationships and gather intel on what is working or not. How can evaluations be designed to “keep up” with a moving target?

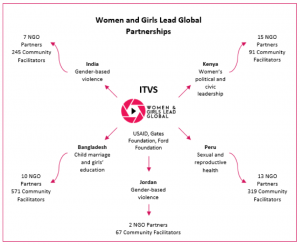

Here are some lessons learned during our five-year effort to keep up with Women and Girls Lead Global (WGLG), a women’s empowerment campaign launched by Independent Television Service (ITVS).

Through a sprawling partnership model, ITVS aimed to develop, test, and refine community engagement strategies in five countries, with the expectation that strategies would evolve, responding to feedback, challenges, and opportunities. Although ITVS did not set out with Adaptive Management specifically in mind, the campaign incorporated characteristics typical of this framework, including a flexible, exploratory approach with sequential testing of engagement strategies and an emphasis on feedback loops and course-correction.

Lessons Learned:

- Integrate M&E into frequent feedback loops. Monthly reviews of data helped ITVS stay connected with partner activities on the ground. For example, we reviewed partner reports on community film screenings to help ITVS identify and apply insights into what was working well or less well in different contexts. Regular check-ins to discuss progress also helped ensure that a “dynamic” or “adaptive” approach did not devolve into proliferation of disparate activities with unclear connections to the campaign’s theory of change and objectives.

- Be iterative. An iterative approach to data collection and reporting allowed ITVS to accumulate knowledge about how best to effect change. It also enabled us to adjust our methods and tools to keep data collection aligned with the evolving theory of change and campaign activities.

- Tech tools have timing trade-offs. Mobile phone-based tools can be handy for adaptive campaigns. We experimented with ODK, CommCare, and Viamo. Data arrive more or less in “real time,” enabling continuous monitoring and timely analysis. But considerable time is needed upfront for piloting and user training.

- Don’t let the evaluation tail wag the campaign dog. The desire for “rigorous” data on impact can run counter to an adaptive approach. As an example: baseline data we collected for a quasi-experiment informed significant adjustments in campaign strategy, rendering much of the baseline data irrelevant for assessing impact later on. We learned to let some data go when the campaign moved in new directions, and to more strategically apply a quasi-experiment only when we – and NGO partners – could approximate the level of control required by this design.

Establishing a shared vision among stakeholders (including funders) of what an adaptive campaign and evaluation look like can help avoid situations where the evaluation objectives supersede the campaign’s ability to efficiently and effectively adapt.

Rad Resources: Check out BetterEvaluation’s thoughtful discussion and list of resources on evaluation, learning, and adaptation.

The American Evaluation Association is celebrating APC TIG Week with our colleagues in the Advocacy and Policy Change Topical Interest Group. The contributions all this week to aea365 come from our AP TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Pingback: Venturuso - Hispanic Venture Capital News