Deborah Wasserman, Kit Klein, Carey Tisdal, and Priya Mohabir are Co-PIs on Roads Taken: A Retrospective Study of Program Strategies and Long-term Impacts of Intensive, Multi-year, STEM Youth Programs (NSF AISL #1906396). Deborah is a senior researcher with COSI Center for Research and Evaluation in Columbus, OH; Kit and Carey both independent contractors have been evaluating Youth Alive programs since their inception more than 25 years ago; and Priya, a Youth Alive Alumna, is Senior Vice President of Youth Development and Museum Culture at the New York Hall of Science.

Where are they now? Or where will they be ten years from now? And what did this program have to do with getting them there? (Rather than alumni or alumnae, we refer to these people as alums, the term we’ve come to use so we don’t make assumptions about gender).

Those are the questions most of us ask, even informally, if we’re involved with youth program evaluation. Answering those questions, as it turns out, is quite challenging.

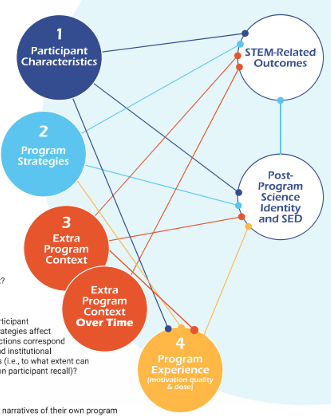

Sure, there are wonderful methodologies – I find contribution analysis one of the most promising. And in that spirit, we at COSI’s Center for Research and Evaluation created an instrument that measures relative influence over time. But instruments aren’t enough. Evaluators looking for long-term understanding of outcomes and their influences probably need to come together, join forces, to create the resources we need.

At the AEA conference this year, we ran a think tank to answer the question – what are those resources? While we don’t yet have the think-tank results, I can share in this post the six areas of resources a joint effort could build. We’ve learned these as the result of our efforts to follow-up on 2200 people who participated in the Youth ALIVE! (Youth Achievement through Learning, Involvement, Volunteering, and Employment) Initiative 15 to 25 years ago. This effort, Roads Taken (funded by NSF-AISL # 1906396) has taught us some important lessons.

Lessons Learned

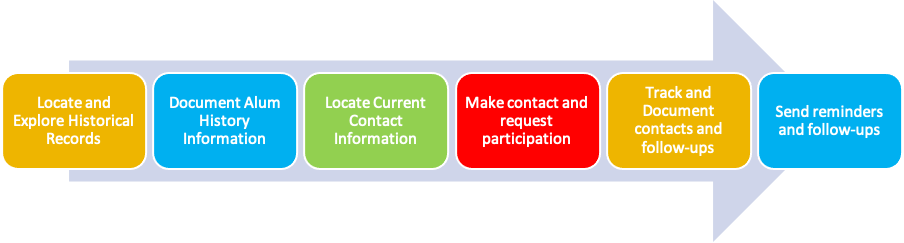

Roads Taken has involved six Youth ALIVE! programs that were running in 1995 and, in some form, are still running today AND for which, administrators believed they had participant records from back then. Some did. As it turned out, in some cases, “records” consisted only of lists of names of youth, nothing more. Youth ALIVE! involved intensive (at least 100 hours/year participation) multi-year, multi-faceted programs that engaged young people with learning about science and working with the public in their respective informal STEM learning institutions. We contracted with each sponsoring museum to hire a program alum or staff as liaison to sift through historical records, document alum information, locate current contact information, make the contact and request to complete our questionnaire, track and follow-up contacts and completions, and then send reminders and thank-yous.

This complicated and time-consuming process produced only 216 responses. Enough to conduct some very interesting analyses (results coming soon!) but certainly far short of our aims. Our lesson? DON’T LOSE TOUCH!

Based on what we’ve learned, along with some “Cadillac” models that have been emerging (e.g., NYC SRM Consortium; STEM PUSH Network; to name a few) we are now considering creating a national network with the following six elements:

- Alumnae/i Involvement (Alum Benefits and Program Benefits): If we want to find out what happens to alums after they leave youth programs, we need to maintain contact. That means we need to create reciprocal benefit – networks that provide program graduates with connections and resources that make it worth their while.

- Tracking Alumnae/i Whereabouts: Then, we need to track their whereabouts so that if they drop from active involvement in the network, there’s still a good chance of finding them. These data might include social network handles, email addresses, snail mail addresses, etc.

- Tracking Networks (Who Knows Who): In addition, we’ve found it’s important to keep network activity and track who stays in touch with whom.

- Tracking Education, Careers, and Other Goals: Then, of course there are the data that will provide the answers to questions long-term follow-up studies may ask in the future. What are their academic and career goals, what influences them, what steps are they taking toward meeting their goals?

- Tracking Supports and Barriers Toward Goals: Just as important as tracking what these alums do, are what are the supports and barriers that influence these trajectories?

- Program Data (Years, Dose, Etc.): And finally, in addition to tracking what actually happened during the program, it might be important to understand what affects how they value those experiences. For example, perhaps a particular opportunity helped someone recognize they are drawing from their high school experience in a way that they hadn’t done before. Valuing a high school experience can ebb and flow over a lifetime.

Is it possible to combine these elements into a single, national network and database from which evaluators can combine efforts across programs, creating datasets large enough to yield generalizable results? That’s the next question we seek to answer. Please do contact me if you’re interested in joining with others in this important work.

The American Evaluation Association is celebrating Youth Focused Evaluation (YFE) TIG Week with our colleagues in the YFE Topical Interest Group. The contributions all this week to aea365 come from our YFE TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.