Hello, AEA365 community! Liz DiLuzio here, Lead Curator of the blog. This week is Individuals Week, which means we take a break from our themed weeks and spotlight the Hot Tips, Cool Tricks, Rad Resources and Lessons Learned from any evaluator interested in sharing. Would you like to contribute to future individuals weeks? Email me at AEA365@eval.org with an idea or a draft and we will make it happen.

Hello, AEA365 community! We are G.M Shah (Principal Evaluation Specialist) and Farid Ahmad (Chief, Strategic Planning, Monitoring, Evaluation and Learning). We both work with the International Centre for Integrated Mountain Development (ICIMOD). ICIMOD is a regional intergovernmental knowledge organization serving eight Regional Member Countries (RMCs) of Hindu Kush Himalaya (HKH) Region. Our headquarters is based in Kathmandu, Nepal.

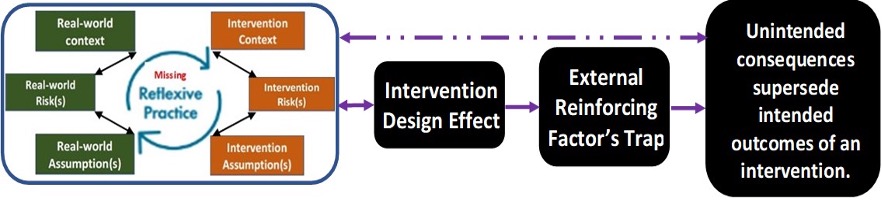

In today’s blog we are introducing two new terminologies and unique concepts – ‘The Intervention Design Effect or IDE’, and ‘External Reinforcing Factor’s Trap or ERFT’. We understand that these terms are not only new but also innovative in relation to programme designs, evaluation, and learning. We believe that ‘evaluation science’ has thus far overlooked these critically important concepts. In today’s blog, we are coining these terms/concepts for the first time.

The intervention design effect measures the degree to which design of an intervention affect the variability of the intended outcomes of an intervention beyond what can be attributed to external sources of variation. The more an intervention is poorly designed/planned or executed, more distracted the effects of the intervention.

The underlying principle is such that – while designing an intervention, it is fundamental to identify, challenge, and explicitly define assumptions and risks evolving from both the internal and external contexts of the intervention. By identifying and challenging these assumptions, it becomes possible to ensure that the intervention is realistic and relevant to the context in which it is being implemented. This, in turn, increases the likelihood that the intervention will be successful in achieving its intended outcomes. Explicitly defining these assumptions is also important, as it enables stakeholders to better understand the rationale behind the intervention and how it is expected to work. It also helps to ensure that all stakeholders involved have a shared understanding of the intervention. Finally, it is essential to constantly test these assumptions throughout the implementation of the intervention. This allows for adjustments to be made as needed, and for any new challenges or barriers to be identified and addressed. By doing so, the intervention can remain adaptive and responsive to the changing needs and circumstances.

Lessons Learned:

Poorly designed interventions easily become susceptible to IDE. Investigating and better understanding IDE is critically important in programme designing, planning, monitoring, evaluation and learning, and implementation in dynamic settings. Also, it helps optimizing intervention design and maximise the likelihood of detecting potential distracting effects for an intervention. Undermining IDE puts an intervention into an External Reinforcing Factor’s Trap (ERFT) leading to unintended consequences of an intervention superseding intended outcomes from an intervention.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.

Thank you Mazhar. Time to broaden the horizon of assumptions in evaluation. Carol Weis’s ‘effective program designs’ findamentaly lays foundation. Hoever, limited in scope!!

Excellent contribution in the field of project design, implementation and MEAL..IDE and ERFT are noval constructs for me. Thanks..