We are internal evaluators Cath Kane, of Weill Cornell Clinical & Translational Science Center, and Jan Hogle, at the University of Wisconsin-Madison Institute for Clinical & Translational Research. The 62 Clinical and Translational Science Award (CTSA) hubs — along with community-based and industry partners — collaborate to advance translational research from scientific discoveries to improved patient care by providing academic homes, funding, services, resources, tools, and training.

Lesson Learned: How do CTSA hubs define “translational?” Translation is the process of turning observations from the laboratory, clinic, and/or community into interventions that improve individual and public health. Translational science focuses on understanding the principles underlying each step of that process.

Hot Tip: Ask “What are we evaluating?” Internal evaluators determine whether programs are efficiently managed, effective in meeting objectives, and ultimately impacting the process and quality of biomedical research — involving multiple variables in complex systems. Case studies use qualitative data to complement quantitative data on research and training productivity. Analysis of multiple case studies identifies factors that facilitate or impede successful translation.

Retrospective or prospective? Retrospective analyses use an abundance of data to deeply study known successes. Prospective case studies can identify factors that influence translation in real time. Questions might include:

- What does ‘successful translation’ mean?

- Which operational process markers are most important?

- What are the ideal duration metrics?

- How does collaboration and the individual CTSA hub move translation along over time?

- How can we better support the translational process?

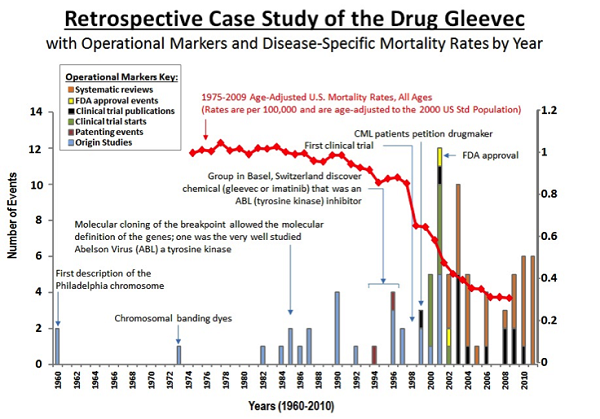

The retrospective case study below tells a story of key operational markers in the development of Gleevec, used to treat leukemia. Public data such as publications, FDA approval and patenting events, and mortality data were overlaid with interview data from key informants.

Lessons learned:

Lessons learned:

- An individual face-to-face interview is indispensable for obtaining an insider’s view of the research process.

- Record the interview and take notes. Often the research details are unfamiliar to the interviewer. Hand-written notes alone may not capture the interview accurately.

- Ask investigators for their interpretation of “translation.” People have different ideas about the meaning of translation. What are the key moments or markers in translation?

- Case studies can serve many needs from short summary “stories” for public relations and newsletters, to more rigorous approaches paired with quantitative data for decision making by internal and external stakeholders.

Perhaps the most important lesson learned is the value CTSA evaluation teams bring to their hubs, not only for long term objectives, but also in the shorter term through contributions on a daily basis to programming adjustments and course corrections using a mixed methods approach to understanding complex change.

The American Evaluation Association is celebrating Translational Research Evaluation (TRE) TIG week. All posts this week are contributed by members of the TRE Topical Interest Group. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.