Hi! We are Allison Prieur, Kari Ross Nelson, and Michael Harnar. Allison and Kari are PhD students who took a virtual Foundations of Evaluation course taught by Michael in the fall of 2021. The course was designed to help advanced students learn evaluation theory. During the course, we participated in a role play activity, inspired by an activity Christina Christie used in Michael’s graduate education at Claremont.

For the activity, students were divided into three groups. Each group was assigned a scholar, one from each of the branches of Alkin and Christie’s Theory Tree (methods, valuing, and use). Each group prepared to embody their scholar and speak from their perspective. A debate component required the students to also understand the perspectives of the other groups’ scholars, to be able to ask and respond to questions.

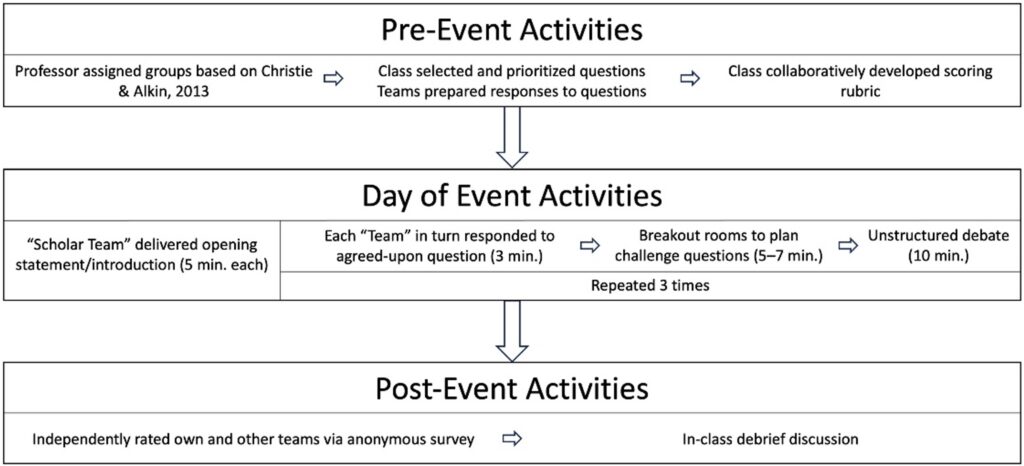

In the weeks leading up to the activity, we used class time to determine questions to be addressed. On the “day-of,” the class began with participants delivering prepared introductions, speaking in first-person as their scholar about their background and theories of evaluation. These introductions were followed by three cycles of “debate”: addressing the predetermined questions, strategizing challenge questions in group breakout rooms, and posing and responding to the challenge questions. Michael served as moderator and timekeeper. The figure below shows the pre-, day-of, and post-event activities.

With class permission, Michael recorded the activity. He invited students to participate in a research project about the experience. Kari and Allison volunteered. We hoped to answer two questions: Is there evidence to support the use of a role-play to develop knowledge of evaluation theories by graduate students in evaluation? And, what do different levels of cognitive complexity look like in graduate students’ knowledge of evaluation scholars?

We chose to use collaborative autoethnography (CAE), building on our shared experience of participating in the activity and incorporated a taxonomy to help us understand student learning through the activity. We used Biggs & Collis’ Structure of the Observed Learning Outcome (SOLO) taxonomy because it helped us look for evidence of advanced levels of cognitive complexity demonstrated through the role-play activity.

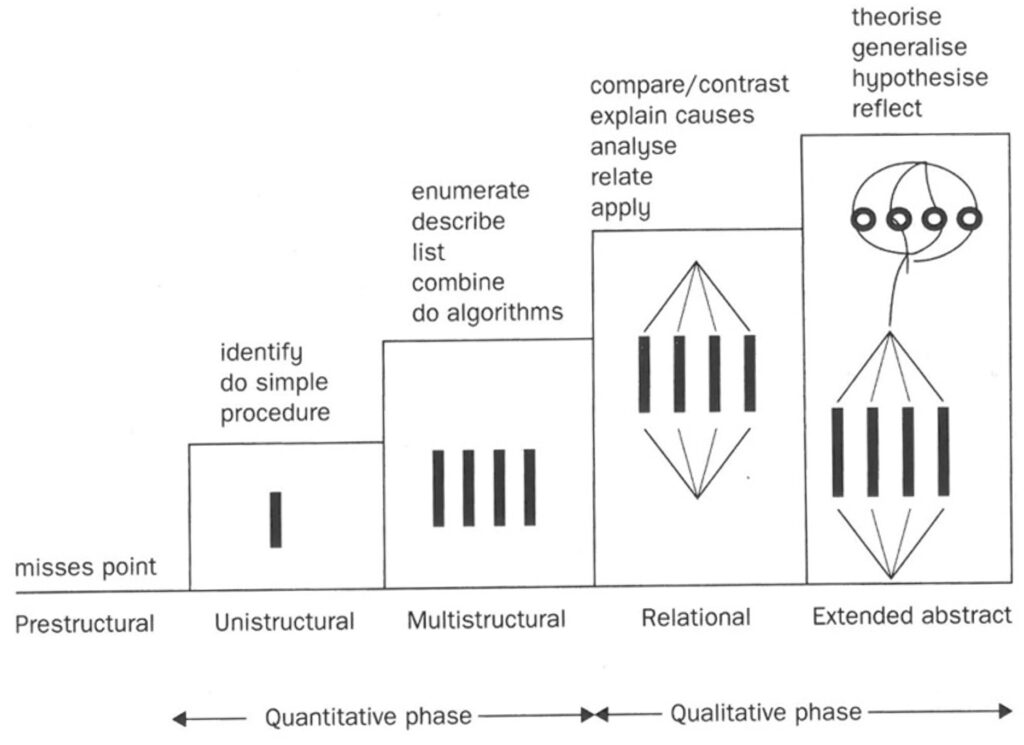

We transcribed the recording using otter.ai and coded the transcript one section at a time using the taxonomy as a coding framework. The SOLO taxonomy, pictured below, represents five levels of cognitive complexity – prestructural, unistructural, multistructural, relational, and extended abstract. We looked for evidence of these, collaboratively developing definitions and inclusion/exclusion criteria for each. We coded at the response level, rather than the sentence or phrase, because we realized that some responses built from unistructural or multistructural into relational or extended abstract. Considering the entirety of the response provided evidence for the complete thought process rather than small components of it.

We found that the majority of responses were at the multistructural and relational levels. Generally, students demonstrated higher cognitive complexity in the debate-style challenge questions and responses. The challenge responses, in particular, elicited the greatest cognitive complexity.

The debate-style role-play activity was a useful way to help graduate students develop knowledge of evaluation scholars and theories. Additionally, it challenged them to demonstrate their understanding of relationships, as well as their ability to generalize, aligning with the relational and extended abstract levels of the SOLO taxonomy.

Rad Resources

- Check out our recent article for more on the activity and our research findings

- Clinton & Hattie’s work on the cognitive complexity of evaluator competencies

- Alkin & Christie’s description of role-play for experiential learning

The American Evaluation Association is hosting Teaching of Evaluation TIG week. All posts this week are contributed by members of the ToE Topical Interest Group. Do you have questions, concerns, kudos, or content to extend this AEA365 contribution? Please add them in the comments section for this post on the AEA365 webpage so that we may enrich our community of practice. Would you like to submit an AEA365 Tip? Please send a note of interest to AEA365@eval.org. AEA365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.