I’m Bethany Laursen, an independent consultant and evaluation specialist for several units at the University of Wisconsin. I fell in love with social network analysis (SNA) as a graduate student because SNA gave me words and pictures to describe how I think. Many evaluators can relate to my story, but one of the challenges to using SNA in evaluation is identifying what counts as a “good” network structure.

Hot Tip: Identify keywords in your evaluation question(s) that tell you what counts as a “good” outcome or goal for your evaluand. An example evaluation question might be: “How can we improve the collaboration capacity of our coalition?” The stated goal is “collaboration capacity.”

Hot Tip: Use published literature to specify which network structure(s) promote that goal. Social networks are complex, requiring rigorous research to understand which functions emerge from different structures. It would be unethical for an evaluator to guess. Fortunately, a lot has been published in journals and in gray and white papers,

Continuing our example, we need to research what kinds of networks foster “collaboration capacity” so we can compare our coalition’s network to this standard and find areas for improvement. You may find a robust definition of “collaboration capacity” in the literature, but if you don’t, you will have to specify what “collaboration capacity” looks like in your coalition. Perhaps you settle on “timely exchange of resources.” Now, what does the literature say about which kinds of networks promote “timely exchange of resources”? Although many social network theories cut across subject domains, it’s best to start with your subject domain (e.g. coalitions) to help ensure assumptions and definitions mesh with your evaluand. Review papers are an excellent resource.

Lesson Learned: Although a lot has been published, many gaps remain. Sometimes the SNA literature may not be clear about which kinds of networks promote the goals you’ve identified for your evaluand. In this case, you can either 1) do some scholarship to synthesize the literature and argue for such a standard, or 2) go back to your evaluation question and redefine the goal in narrower terms that are described in the literature.

In our example, the literature on coalition networks may not have reached consensus about which types of networks promote timely exchange of resources. But perhaps reviews have been published on which types of brokers foster diversity in coalitions. You can either 1) synthesize the coalition literature to create a rigorous standard for “timely exchange of resources,” or 2) reframe the overall evaluation question as, “How can brokers improve diversity in our coalition’s network?”

Rad Resources:

This white paper clearly describes network structures that promote different types of conversations in social media

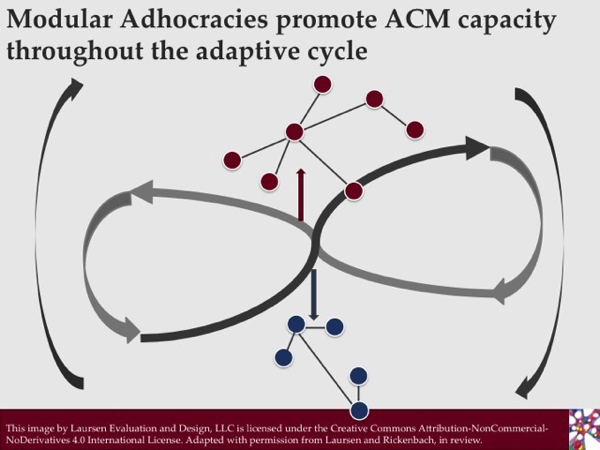

This short webinar reports a meta-synthesis of which networks promote adaptive co-management capacity at different stages of the adaptive cycle

The American Evaluation Association is celebrating Social Network Analysis Week with our colleagues in the Social Network Analysis Topical Interest Group. The contributions all this week to aea365 come from our SNA TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

This white paper is a GREAT rad resource, am enjoying digging through it. More broadly, this is a very important topic.

As we seek to move network analysis forward within the field of evaluation, I’ve noticed that often people often struggle to appreciate the value of network analysis – if not careful, it can easily be reduced to a cool way to visualize data. Continuing to flesh out standards for the practice is a necessary step to showing that there is a science and a method behind these visualizations.

In particular, when we talk about “collaboration capacity”, I think we need more reference to standards in terms of concrete levels for various network and node measures, such as centralization, distance, and density among cliques. Drawing a parallel with classical statistical analysis, for example, there are fairly well-recognized thresholds for what counts as a notable significance level or correlation coefficient. In psychometrics, there’s a decent literature to draw upon when one needs to interpret Cronbach’s alpha. White papers like the one you’ve linked to provide useful standards we evaluators can refer to when we interpret data.

One of the challenges with network analysis, however, seems to be that what is expected can vary so widely depending on the type of network and how the data were collected. A Twitter network is going to show completely different interaction structures than a network generated from survey-questionnaire data. But this is an exciting challenge for our field that we need to embrace head on.

So, thank you for a great post, and in full agreement: analysis should never occur in a vacuum!