Hello, my name is Michel Laurendeau, and I am a consultant wishing to share over 40 years of experience in policy development, performance measurement and evaluation of public programs. This is the last of seven (7) consecutive AEA365 posts discussing a stepwise approach to integrating performance measurement and evaluation strategies in order to more effectively support results-based management (RBM). In all post discussions, ‘program’ is meant to comprise government policies as well as broader initiatives involving multiple organizations.

This last post discusses the creation and analysis of comprehensive and integrated databases for ongoing performance measurement (i.e., monitoring), periodic evaluation and reporting purposes.

Step 7 – Collecting and Analysing Data

Program interventions are usually designed to be delivered in standardized ways to target populations. However, standardization does not often take into consideration variations in circumstances that may affect the results of interventions, such as:

- Individual differences (e.g., demographic and psychological factors);

- Contextual variables (e.g., social, economic and geo-political factors/risks);

- Program and institutional variables (e.g., type and level of services, delivery method, accessibility).

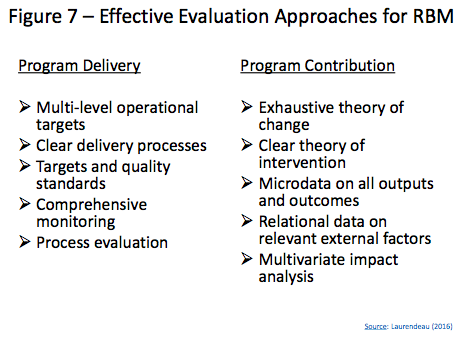

Focusing exclusively on program delivery (i.e., economy and efficiency) through the assessment of the achievement of delivery targets or compliance with delivery standards may be quite appropriate when programs are mature, and the causal relationships between outputs and outcomes are well understood and well established. But this is not always the case, and definitely not so when programs are new or in a demonstration phase (e.g., pilot project) and relying on uncertain or unverified underlying assumptions. In those situations, more robust and adapted analytical techniques should be used to measure the extent to which programs interventions actually contribute to observed results while taking external factors in to account. This is essential to the reliable assessment of program impacts/outcomes.

It is well known in econometrics that incomplete explanatory models lead to biased estimators because the variance that should have been taken by missing variables is automatically redistributed among the retained explanatory variables. Translated for evaluation, this means that excluding external factors from analytics creates a risk of incorrectly crediting the program with some levels of impacts that should instead have been attributed to the missing variables (i.e., having the program claim undue responsibility for observed results).

Dealing with this issue would require collecting appropriate microdata and creating complete data sets, holding information on all explanatory variables for each member of target populations, which can then be used to:

- Conduct robust multivariate analysis to isolate the influence of program variables (i.e., reliably assessing program effectiveness and cost-effectiveness) while taking all other factors into account;

- Explore in a limited way (using the resulting regressive model to extrapolate) how adjustments or tailoring of program delivery to specific circumstances could improve program outcomes;

- Empirically assess delivery standards as predictive indicators of program outcomes (rather than rely exclusively on benchmarking) to determine requisite adjustments to existing program delivery processes.

Developing successful program interventions will require the evaluation function to successfully deal with the above challenges and more effectively support management decision-making processes.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.