Hello, my name is Michel Laurendeau, and I am a consultant wishing to share over 40 years of experience in policy development, performance measurement and evaluation of public programs. This is the sixth of seven (7) consecutive AEA365 posts discussing a stepwise approach to integrating performance measurement and evaluation strategies in order to more effectively support results-based management (RBM). In all post discussions, ‘program’ is meant to comprise government policies as well as broader initiatives involving multiple organizations.

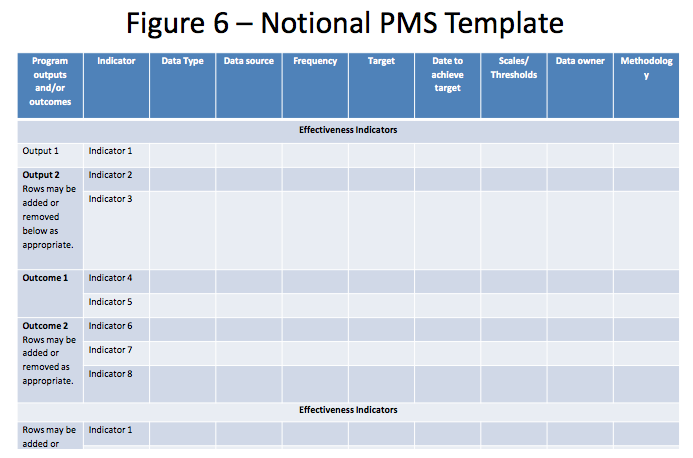

This post discusses how to identify comprehensive sets of indicators supporting ongoing performance measurement (i.e. monitoring) and periodic evaluations, from which subsets of indicators can be selected for reporting purposes.

Step 6 – Defining Performance Indicators

When a logic model is developed using the structured approach presented in previous posts, and gets validated by management (and stakeholders), it can be deemed to be an adequate description of the Program Theory of Intervention (PTI). The task of identifying performance indicators then requires determining a comprehensive set of indicators that includes some reliable measure(s) of performance for each output, outcome and external factor covered by the logic model. In some cases, as discussed in the previous post, the set of indicators may extend to cover management issues as well.

Most performance measurement strategies (PMS) and scorecards also require the identification, for each output and outcome, of success criteria such as performance targets and/or standards, which are usually based on some form of benchmarking. This is consistent with a program design mode (i.e. top-down approach to logic models) based on inductive logic where each result is assumed to be a necessary and/or sufficient condition (as discussed in the TOC literature) for achieving the next level of results. This is however very limiting as it reduces the discussion of program improvement and/or success to the exclusive examination of performance in program delivery (as proposed in Deliverology).

Additional useful information that may be required includes the following:

- Data type (quantitative or qualitative);

- Data source (source of information for data collection);

- Frequency of data collection (e.g. ongoing, tied with specific events, or at fixed intervals);

- Data owner (organization responsible for data collection);

- Methodology (any addition al information about measurement techniques, transformative calculations, baselines and variable definitions that must be taken into consideration in selecting analytical techniques);

- Scales (and thresholds) used for assessing and visually presenting performance;

- Follow-up or corrective actions that should be undertaken based on performance assessments.

Many organizations further require that performance measurement be designed with a view to address evaluation needs and adequately support the periodic evaluation of the relevance, efficiency and effectiveness of program interventions. However, evaluation and performance measurement strategies are most often designed separately, with evaluation strategies usually being arrested just before the actual conduct of evaluation studies. Evaluations are then constrained by the data collected and made available through performance measurement. In order for evaluation and performance measurement strategies to be coordinated and properly integrated, it would be necessary to develop them concomitantly at an early stage of program implementation.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.