Hello! I am Asher Beckwitt, Research and Evaluation Project Director with Ripple Effect, and I have expertise in qualitative methods including interviews, focus groups, and content analysis. Hi! I am Alexis (Lex) Helsel, Lead Program Evaluator with Ripple Effect, and I have extensive experience in quantitative methods including survey design and analysis, administrative data analyses, and bibliometric analysis. Today we are sharing some tips based on our complementary expertise and experiences integrating mixed methods in support of large and small-scale holistic evaluations of federally funded biomedical research programs.

Incorporating multiple and mixed analytic methods into your evaluations helps to provide a comprehensive understanding of program outcomes and impacts. Each method has its own strengths and limitations and using multiple methods helps mitigate the limitations of any one method. Comparing and contrasting findings across multiple methods is referred to as triangulation. Triangulating findings across methods allows evaluators to demonstrate the validity of data, enhance confidence in the results, and gain deeper insight into the data from multiple perspectives.

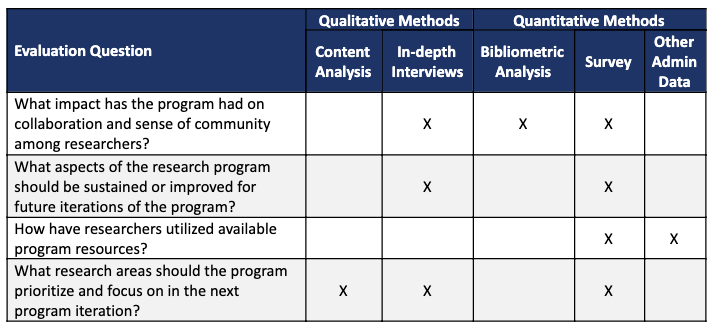

- Hot Tip: Start from the beginning with your evaluation questions and choose the methods best suited to address them. Use an evaluation matrix that maps and keeps track of which methods are aligned with each evaluation question to ensure sufficient coverage to allow for triangulation. Below is a snapshot of a sample evaluation matrix that we create for these purposes.

- Lesson Learned: Schedule regular weekly or bi-weekly check-ins with all team members supporting the evaluation to share findings and insights to inform the data collection and analysis. Don’t wait until the end of the project when it’s time to write the report to bring everything together! Triangulating findings can be most effective and meaningful as an ongoing process and not a throw-away last step.

- Hot Tip: Organize reports and findings according to evaluation questions rather than by separate methodologies. Help your audience understand the findings by using visualizations to show how data from one method supports data from other methods. Explore and try to explain why any findings may contradict the others – interviews with stakeholders or having them review your results can be a great resource for helping to interpret the findings and investigate any discrepancies.

- Hot Tip: If you don’t have the in-house expertise, skills, or resources to include multiple methods, you may compare and contrast the literature with your findings. Are they the same or different? If they are different, ask “why” they are different. This can provide additional evidence and support in the absence of multiple methods and can also be an additional resource to help better understand any discrepant findings.

The American Evaluation Association is celebrating RTD TIG Week with our colleagues in the Research Technology and Development TIG. All of the blog contributions this week come from our RTD TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hello Asher and Lex,

Thanks for describing, so succinctly, an easy to understand method for triangulating across data, and also for keeping the whole team on track in understanding the goals/objectives and thinking through various aspects early in the process! We’re all in need of ways to be able to do this better–particularly within interdisciplinary teams.

Hello Asher and Alexis,

Thank you for sharing such an informative article regarding triangulation of qualitative and quantitative evaluation methods. I am currently completing a course in Program Inquiry and Evaluation at Queen’s University and have been asked to comment on an article of interest.

UNICEF Innocenti (2014) explains, “to increase the credibility of the data, both qualitative and quantitative data needs to be collected from multiple sources to answer the key evaluation questions.” The hybrid integration of both your expertise enables rich evaluation methods to come into fruition to best support a holistic approach in acquiring data for meaningful evaluation use.

I appreciate the mention of each method having their own advantages and challenges. Recognizing the unique demands and limitations of different approaches requires a careful consideration in determining which combination of evaluation methods will best serve the purpose of the evaluation. Utilizing triangulation approaches to evaluation provides a means to maximizing the potential of the advantages while offsetting the limitations as much as possible.

The evaluation matrix you have designed does a wonderful job aligning appropriate data collection methods to key evaluation questions. According to Shulha and Cousins (1997), “… the evaluation practice community needs to be versatile in order to be responsible to the needs of its clientele” (p. 205). The use of diverse methods allows for the discovery of greater insights and a strong validity of the data collected.

In regard to your “Lesson Learned,” I like how your emphasized regular weekly or bi-weekly check-ins will all team members from beginning to end, rather than solely producing a summative report at the end of a program. An ongoing approach of regular check-ins enriches opportunity for evaluative learning between the team of evaluators and stakeholders. Additionally, the collaboration presents greater prospects for improvements during a program’s implementation at the onset of new findings.

I found that your collaborative work really highlights the key understandings I have gained throughout my current course. Thank you for sharing such an insightful learning piece!

Briana Raposo

References:

Shulha, L., & Cousins, B. (1997). Evaluation use: Theory, research and practice since 1986. Evaluation Practice, 18, 195-208.

UNICEF Innocenti. (2014, Dec 15). Data Collection & Analysis. [Video file]. Retrieved from https://www.youtube.com/watch?v=HFGVJJMDo4I

Hi Asher and Alexis,

Thank you for sharing your expertise in this intricate (and at times, convoluted!) field of program evaluation. I am currently taking a course in Program Inquiry and Evaluation at Queen’s University and we are nearing the end of our course. I must say that my learning curve has been nothing short of being steep. It has been challenging tackling a whole new field that I was not even aware of initially. After reading your blog, I’m happy to report that I can actually begin to understand your language!

I appreciate your clear description in defining triangulation – using multiple analytic methods to compare and contrast findings. In doing so evaluators can help ensure that biases from the use of single method are overcome. I learned as well that the use of a team of evaluators may help reduce biases over the use of a single evaluator. In your experience, is it common for a program evaluation to use a number of evaluators? Is this often feasible for social programs that have limited funding/budget to put towards a team of evaluators?

I also notice that your ‘hot tips’ and ‘lesson learned’ are supportive of the learning that I have gained in my course. Making the sharing of evaluation findings an on-going process throughout the evaluation and not waiting until the end has many benefits. As you mentioned, this will allow triangulation findings to be more effective and meaningful to the evaluation. This process use not only encourages collaboration between stakeholders and evaluators, but it also keeps the communication open so that early investigations can be made should any discrepancies arise in the finding. I’ve learned that process use is very beneficial in a program evaluation; however, from your experience, what are some of its’ drawbacks?

Thank you for your insights,

Tram