My name is Sara Dodson, and I work in the Office of Science Policy at the National Institutes of Health (NIH), where I have led the development of a series of case studies on biomedical innovations. Today I’ll kick off the Research Technology & Development TIG week by discussing the challenging work of tracing public research investments to societal impact, framed by the question most germane to NIH, “How do we – a federal agency who supports scientific research on the basic side of the R&D spectrum – track progress towards our mission of improving health?” The complexities of this question are rooted in several factors: the long, variable timelines of translating research into practice, the unpredictable and nonlinear nature of scientific advancement, and the intricate ecosystem of health science and practice (the multitude of research funders & policymakers, academic & industry scientists, product developers, regulators, health practitioners, and a receptive public), just to name a few.

My team started out with two core objectives: 1) develop a systematic approach to tracing the contribution of NIH and other key actors to health interventions, and 2) identify a rich tapestry of data sources that provide a picture of biomedical innovation and its attendant impacts.

Hot Tip:

To make this doable, we broke off bite-sized pieces, conducting case studies on specific medical interventions. We chose an existing intervention in health practice and performed both a backward trace and forward trace. Moving backward from the intervention, we searched for and selected pivotal research milestones, reaching back into basic research findings that set the stage for progress and documenting the role of NIH and others along the way. Moving forward, we looked for evidence of the intervention’s influence on health, knowledge, and other societal impacts.

Rad Resources:

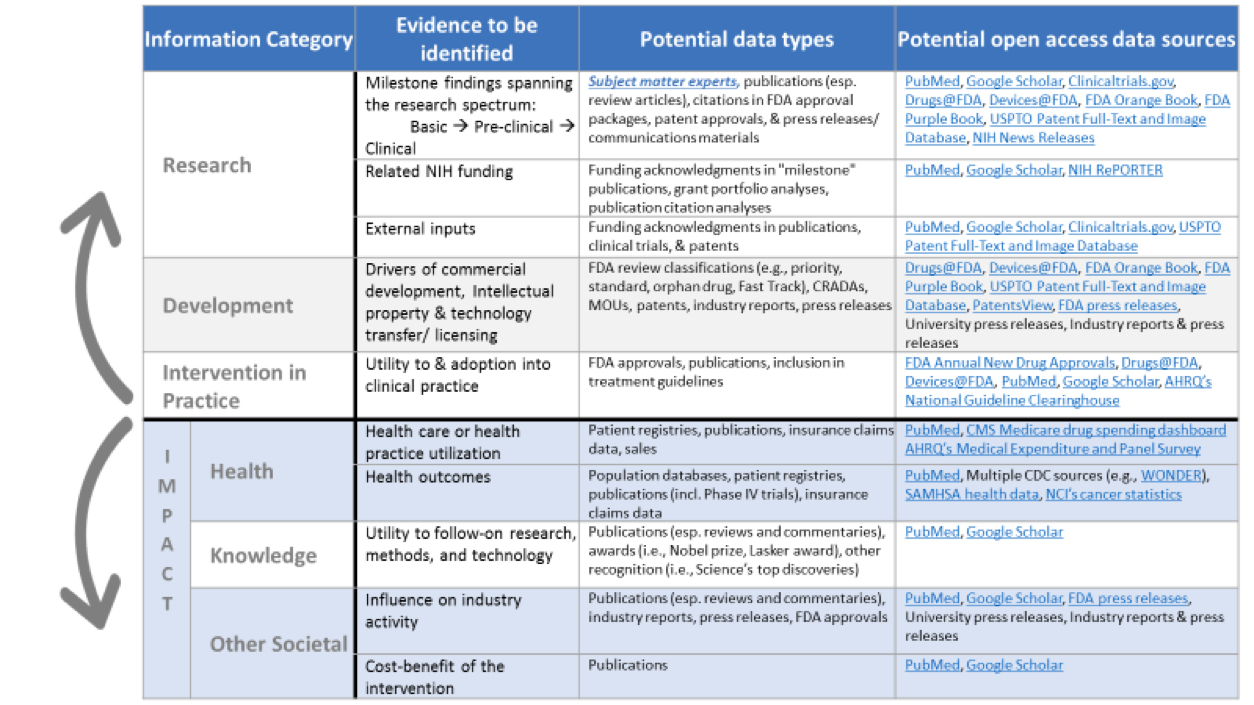

Dozens of data sources proved useful. For each of the information categories we examined, the table above illustrates the types of evidence that we searched for and some of the data sources that we utilized.[1]

Lessons Learned:

Data needs – The development of more comprehensive and structured datasets (e.g., data related to FDA-approved drugs, biologics, and devices) with powerful search and export capabilities are needed. Even further, wide-scale efforts to mine and structure citations in various sources – like FDA approval packages, patents, and clinical guidelines – would be very useful.

Tool needs – These studies are data and time-intensive, requiring a couple months of full time effort to conduct. Sophisticated data aggregators could help semi-automate the process of identifying “milestones” and linking medical interventions to changes in population-level health outcomes and other societal impacts.

Uses – There are many potential uses of these studies, including for science communication and for revealing patterns of successful research-to-practice pathways & the influence of federal funding and policies. We have published a handful of case studies as Our Stories on the Impact of NIH Research website – I invite you to take a look!

[1] Note that only open access data sources are included here. We also made use of proprietary data sources and databases available to NIH staff.

The American Evaluation Association is celebrating Research, Technology and Development (RTD) TIG Week with our colleagues in the Research, Technology and Development Topical Interest Group. The contributions all this week to aea365 come from our RTD TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.