My name is Sondra LoRe and I often have the pleasure of evaluating STEM education programs which include the training and development of students, educators, and community advocates in outreach and information science education. I work as the evaluation manager for the National Institute for STEM Evaluation & Research (NISER) and as an adjunct professor for the Evaluation, Statistics, and Measurement Program at the University of Tennessee. NISER evaluates projects serving under-represented groups in STEM, where we currently serve seventeen programs across the US.

Lessons Learned: The “data” baking challenge

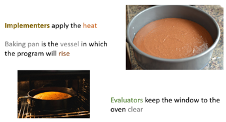

Much like baking a great layered cake, evaluation requires combining various elements to increase its value. Outreach, informal science, and training are all often implemented by people who did not participate in the design of the curriculum and activities, they are “implementers”. For example, graduate students in school counseling may implement an outreach education program to high school students as part of their practicum, or a docent in a mobile science lab may implement STEM activities with inner city youth. While most evaluations gather data from all stakeholders involved, without layering the data collected from program implementers intentionally and formatively, opportunities to make data-informed decisions may be lost, much like leaving out an ingredient or important baking step in your recipe.

Cool Trick: Begin with the end in mind – The mission and vision of the program will help you layer a delicious cake recipe to make for an ingredient rich evaluation. The layers of your cake – or data collected – should inform the design of the program.

Hot Tip #1: Gather ingredients– Which stakeholders, or implementers, are frontline to the population being served by the program? What are their needs? What formative data will you collect so that adjustments to the program can be made in a culturally responsive way? What “fixes” or “adjustments” are being made to the curriculum to improve the program and service?

Hot Tip #2: Bake your layers- Collect and analyze your data with an eye toward keeping a consistent temperature.

- How are the layers of data collection informing the development of the program?

- What can we learn about the program from implementers in the field?

- What factors impact the experiences of program implementers?

- In what ways have the experiences of the program implementers changed over the course of the outreach?

- What is the value of data to program designers?

Hot Tip #3: Assemble your cake– The layers of data should be held together in a cohesive unit holding the structure of the program elements. An uneven layer will disturb the structure of the program, and your cake.

Cool Trick: Having your cake and eating it too

Valuing implementer feedback improves the effectiveness of the evaluation for all stakeholders. Formative and multi-faceted approaches to data collection and reporting allow for real-time engagement in the valuing of the evaluation. Serve and enjoy!

The American Evaluation Association is celebrating RoE TIG Week with our colleagues in the Research on Evaluation TIG. All of the blog contributions this week come from our RoE TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hi Sondra,

I am currently working my way through a Masters program at Queens University and am just finishing up a course learning about Program Evaluation Design. It has been a very interesting course for me, as I have zero experience in this area. Throughout the course we have been using this blog site, finding articles that are of interest to us in this area. I have found them, and yours in particular, very helpful as they use very clear terms and language to describe the process of evaluation. Many of our course readings have been very technical in language, which has been tricky at times to understand. Your analogy of baking a cake illustrates the process very clearly for me. We were required to design a program evaluation based on a program of our choice. I found the most difficult part to be figuring out how I can ensure that all stakeholders are able to have a voice in the evaluation (ie making sure that no one is left out). Do you have any suggestions that would help with this. Thanks so much for the clarity of your article!

Dan

Thank you Dan! I appreciate the kind words!