Greetings! My name is Juan D’Brot, Senior Associate at the Center for Assessment. I serve as the National Council on Measurement in Education (NCME) representative for the Joint Committee on Standards for Educational Evaluation and will be discussing the relationship among measurement, research, and evaluation.

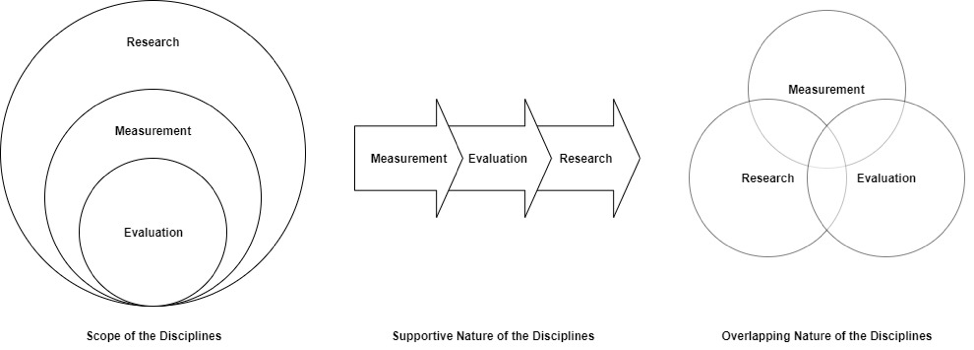

My first job out of graduate school (Research and Evaluation Specialist) needed someone who could navigate the interplay among measurement, research, and evaluation. During my interview, I (like an amateur) mistakenly used the terms research and evaluation interchangeably. While my work helped me crystallize the interconnected nature between the three, I wish I understood it earlier in my career. One could think of their interaction like this:

Any of these three could work, but you need to know ahead of time when and where to use the methods for each. Here are three main takeaways I’ve learned.

Lesson Learned 1: Be clear about your purposes and intended uses.

Consider the following (abridged) definitions:

- Measurement focuses on accumulating evidence to support specific interpretations of scores for an intended purpose or use

- Research tests theories to generalize findings and contributes to a larger body of knowledge

- Evaluation is a systematic method for collecting, analyzing, and using information to evaluate or improve a program

Depending on the purpose of your work, you may use some or all of these things. Beginning with the end in mind will help organize your efforts.

Lesson Learned 2: Borrow from other disciplines where appropriate.

It’s important to know how each approach can serve the other. Consider this quote:

Everything that can be counted does not necessarily count; everything that counts cannot necessarily be counted. –William Bruce Cameron (1963)

Recognize the value in using literature to understand the context, intent, and interpretations of tools that are used in evaluations. Leverage other disciplines to help understand those context-dependent and context-independent conditions that inform good evaluations.

Lesson Learned 3: Extend your findings beyond your own project or discipline.

Generalizing beyond a specific program can be challenging. However, we should strive to identify context dependent or independent conditions for future evaluators. Correspondingly, researchers should extend findings to interpret results in other programs or contexts.

I believe we can place a greater emphasis on the impact and applicability of our research in measurement. Generalization and contribution of knowledge are important, but we should better extend findings to the real-world. The Program Evaluation Standards can be useful in helping us apply and communicate our research more broadly. By applying an evaluative perspective we can better apply our research to determine the effectiveness of programs, models, or systems.

This week, we’re diving the Program Evaluation Standards. Articles will (re)introduce you to the Standards and the Joint Committee on Standards for Educational Evaluation (JCSEE), the organization responsible for developing, reviewing, and approving evaluation standards in North America. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hello Juan,

My name is Natalie Lenzen and I am a graduate student at Arizona State University. I am currently enrolled in a course that is focusing on understanding the difference between research and evaluations. In the process of completing my discussion for my class, I came across your work. Similar to your anecdote of mistakenly using these terms interchangeably, I have been found guilty of this as well. I found your diagrams and descriptions to be extremely helpful in my journey to better understand the differences between research and evaluation.

In the context of your article, do you consider evaluation to be a subset of research? Or do you consider research as a subset of evaluation?

Thank you for sharing your perspective and experiences with understanding research versus evaluation. I would greatly appreciate your insight on these questions.

Hi,

My name is Joanne Campa and I am in a graduate course titled, Introduction to Research and Evaluation in Education. Currently, our focus is identifying how research and evaluation differ. Your post has helped me understand not only that research and evaluation is not interchangeable, but how they are interconnected not just between the two, but how measurement is as well. Your visual reminded me a bit of another visual posted by Dr. Dana Wanzer however, hers included research as a sub-component of evaluation as well. What are your thoughts on research as a sub-component of evaluation?

Thank you,

Joanne

Hello, Juan.

I am so appreciative of the visuals you provide in your explanation of the interconnectedness between measurement, research, and evaluation. Each provides a valid explanation with an emphasis on the evaluator being certain of what their intended use for the evaluation is. The lessons you share allow for a clear understanding of the differences of each and have reminded me to articulate the language I use when evaluating a program.

I am currently taking a course about inquiry and evaluation as part of a master’s program and have been working on a program evaluation design for StrongStart BC. Systematically collecting, analyzing, and using the data appropriately will be imperative for positive improvements to the program.

Your quote:

Everything that can be counted does not necessarily count; everything that counts cannot necessarily be counted. –William Bruce Cameron (1963)

I’d like to share this thought-provoking quote in my resource room this fall, should we return. In a vastly changing classroom landscape, this would serve as a valid reminder to take a step back and look at the context of everything we ask of, assess and evaluate our students on. One of the biggest dilemmas most appear to encounter during program evaluation is the context in which it is used. There are just so many variables to consider that we cannot actually measure in a given circumstance.

You touch on this in Lesson Learned 3: Identifying context dependent and independent conditions for future evaluators. I again appreciate the emphasis you place upon sharing and developing findings. In a complex, evolving society blanketed with uncertainty at times, I agree that we need to find ways to communicate findings, extend discoveries and use an evaluative perspective to determine the effectiveness of programs.

It seems that the Scope, Supportive Nature, and Overlapping Nature of the Measurement, Evaluation, and Research disciplines could be called upon at different times during the learning process. Connecting measurement, research, and evaluation could be viewed as steps in a learning process. It is likely there are other learning paradigms that would support a different type of interaction between these disciplines. There may also be other disciplines to consider such as synthesis or reconciliation. The ability to move between these learning modes facilitates the application of domain specific learning to other domains. Thanks for the interesting post.