I am Carla Hillerns from the Office of Survey Research at the University of Massachusetts Medical School’s Center for Health Policy and Research. In 2015, my colleague and I shared a post about how to avoid using double-barreled survey questions. Today I’d like to tackle another pesky survey design problem – leading questions. Just as we don’t want lawyers asking leading questions during a direct examination of a witness, it’s also important to avoid leading survey respondents.

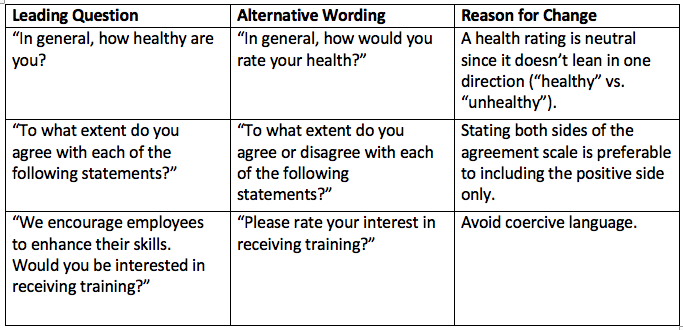

By definition, a leading question “guides” the respondent towards a particular answer. Poorly designed questions can create bias since they may generate answers that do not reflect the respondent’s true perspective. Using neutral phrasing helps uncover accurate information. Here are a few examples of leading questions as well as more neutral alternatives.

Hot Tips for Avoiding Leading Questions:

- Before deciding to create a survey, ask yourself what you (or the survey sponsor) are trying to accomplish through the research. Are you hoping that respondents will answer a certain way, which will support a particular argument or decision? Exploring the underlying goals of the survey may help you expose potential biases.

- Ask colleagues to review a working draft of the survey to identify leading questions. As noted above, you may be too close to the subject matter and introduce your opinions through the question wording. A colleague’s “fresh set of eyes” can be an effective way to tease out poorly phrased questions.

- Test the survey. Using cognitive interviews is another way to detect leading questions. This type of interview allows researchers to view the question from the perspective of the respondent (see this AEA365 post for more information).

Rad Resource: My go-to resource for tips on writing good questions continues to be Internet, phone, mail, and mixed-mode surveys: The tailored design method by Dillman, Smith & Christian.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hi Carla,

I thoroughly enjoyed reading your article. I am in the process of developing a survey for a youth cooking program and will definitely keep your hot tips in mind in avoiding leading language. I especially liked tip #1 “ask yourself what you are trying to accomplish through the research”. It is extremely important to try and avoid bias as an evaluator. Determining whether a survey is the best tool to use in data collection is helpful in exploring underlying goals of the evaluation.

Since I am writing the survey for youth, I want to keep it as straightforward and simple as possible, but do not want to accidentally guide them in any one direction! Do you have any more tips on phrasing and wording for conducting surveys for youth? Would a scale rating survey or using words such as “highly agree” and “highly disagree” but more beneficial?

As well, do you believe there is an optimal time frame to conduct a post-program survey? In my experience, when a survey is conducted right at the end of a program, participants can sometimes feel rushed or muddled by their thoughts as they haven’t had time to absorb and process them yet? On the other hand, if I wait too long, participants may begin to forget their feelings and attitudes during the experience. Any advice is appreciated, thanks again for taking the time to share your knowledge and expertise with us!

Vivian Cai

Hi Carla,

Thanks for your blog. I agree that the wording of a question is very important when you’re trying to elicit a non-biased response. I’m enrolled in the same class as Sarah and have some questions of my own. It would greatly appreciated if you could comment on them.

I’m a TESL teacher in Beijing. Mostly primary school but I do teach at universities part-time. If I was to conduct an evaluation, what questionnaire format would you use if the stakeholders don’t speak English as their first language? Also, would you have any advice on how to word the questions for non-native speakers?

I’m planning to be out here a long time, any feedback would be greatly appreciated!

Thanks again for your tips and resources!

Best,

Nadim

Hi Sarah,

My name is Nadim and I’m also in the Program Inquiry and Evaluation class at Queens University. I quite enjoyed your Blog. I think your suggestions are excellent. I agree that wording is extremely important if you want to elicit an unbiased response. Sometimes a simple rephrasing of the question can produce a more favorable outcome. I have an issue with my evaluation questions I hope you can comment on.

I’m teaching in Beijing at a public primary school at the moment. All my colleagues are Chinese, as are the students. I’m wondering if you have any advice on how to word questions for people who don’t speak English as their native language for an evaluation. I’m limited by how much time I can take up in class to administer the survey to my students. I would like to ask in depths questions to my colleagues but am not sure if that’s possible with certain phrases being lost in translation. Time is also limited with the teachers.

Again thanks for sharing your tips!

Hello Sarah,

Thanks for your comments! Here are some answers to your questions.

1. how much survey testing is sufficient?: You raise a tough question but it’s a good one. Situations vary so it’s difficult to assign a number to the interviews and test surveys. You also have to factor in your level of expertise to conduct cognitive interviews. Realistically, most of us are faced with budget and time constraints. In my experience, even a couple cognitive interviews can give you a good sense of problems with your survey questions. Ideally, you’ll do a few more – to the point that you’re not hearing new things. If you don’t have the time or resources for cognitive interviews and pilot testing, I consider peer review to be an informal version of testing. It’s certainly better than no testing at all.

Regarding your second and third questions, yes, I do think that cognitive interviews can reveal some potential bias due to question wording. The interviews can uncover different types of problems that you might not have been aware of beforehand.

Regarding your last question, you may want to check out the University of Illinois at Chicago’s webpage on survey research. You can sign up for free weekly emails that cover survey topics that might be of interest to you. http://www.srl.uic.edu/Publist/bulletin.html

Best of luck to you.

Carla

Thank you Carla!

I will take your advice into consideration as I continue to move forward with my program evaluation design. Once again, thank you for taking the time to answer my questions!

Sarah

Hello Ms. Hillerns,

My name is Sarah El-Rafehi and I am currently a student at Queen’s University pursuing my Professional Master’s of Education degree with a speciality in assessment and evaluation. Your article post on the AEA365 website, “Objection! That’s a Leading Survey Question” caught my interest because it relates to several aspects of my program evaluation design as part of the course I am currently enrolled in called “Program Inquiry and Evaluation.” I think it is essential that when designing survey questions, evaluators should pay close attention to how the question is framed, it’s intention or goal, and whether or not the questions may lead to biases. You bring up a key concept that all evaluators should be aware of when designing survey questions – that is, leading questions. Poorly designed survey questions can result in completely different outcomes or respondent perspectives because people have different means of understanding a question that isn’t clear, direct, or explicit. The way a question is worded can have a huge impact on its true meaning. Hence, your article provides key strategies that evaluators could use to avoid falling in the trap of creating leading questions. I think bias is a significant concept that evaluators need to be more aware of. The fact that you suggested exploring underlying goals of the survey may help in exposing potential bias is significant because it allows one to self-reflect on the purpose of the survey. Self-reflection and a greater awareness of potential survey question biases should help evaluators create neutral questions. Your second hot tip relates to the concept of peer review – when others review work done by the author, it provides the author with alternatives and possible suggestions for improvement. At times, when we are creating survey questions we may not recognize faulty mistakes, typos, or unintentional biases, so it’s important to have someone else review the work. Your third tip is quite thought stimulating – how much survey testing is sufficient? When you highlight the use of “cognitive interviews,” do you imply that providing the survey to stakeholders during an interview would be useful in identifying possible leading questions or biases? Would interviewees’ literacy ability affect the accuracy/possibility of identifying leading questions or biases in the survey? My final question, is there a specific survey format that would result in insignificant stakeholder bias? The three hot tips you listed on avoiding leading questions will be of great use to me when I begin designing my survey questions – as part of my final aspect of my program evaluation design.

Thank you for sharing your advice and tips! I will definitely check out the resources you provided. Your article has helped me think carefully about how I want to frame my survey questions.

Sincerely,

Sarah El-Rafehi