Hi there! We are Harry Daley, Director of Community Investment & Learning and Carrie Tanasichuk, Director of Impact Measurement & Evaluation at the Greater Saint John Community Foundation. While we both have worked predominately in the non-profit sector, Harry brings experience as a skilled facilitator and community developer and Carrie brings experience as an evaluator. One recent task was to create a reporting structure for the Foundation centred on learning.

Among non-profits, the word evaluation often comes hand-in-hand with the concept of accountability. Are projects meeting their pre-determined outcomes, and if so to what extent? Is the funder’s money being spent on projects that have the impact advertised in their initial application? As a result of this line of questioning, it is not uncommon for a sense of dread to overcome staff in organizations when funders reach out for their mid-term and final reports. Trust, on both sides of this relationship, can feel non-existent.

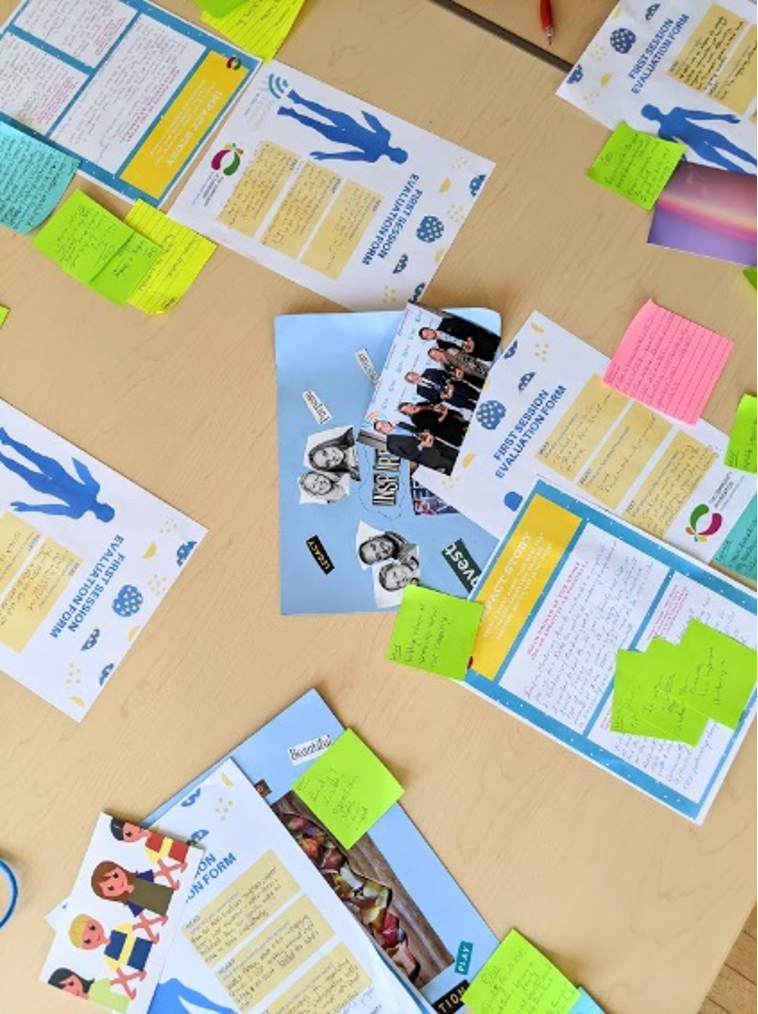

As we work to create the Impact Measurement & Evaluation branch at our local Community Foundation, we have focused on creating a culture of curiosity and reflection within our relationships with the organizations we fund. We have re-oriented our reporting process to focus on stories and reflective questions that bring the funded projects and our Foundation to a place of deeper understanding of the successes, challenges, and emergent needs of the community. In the process, it is bridging the divide between our Foundation and the organizations working to create change, amplifying the impact within our community.

Lessons Learned:

- Although many of the projects we fund dread the thought of outcome accountability centred reporting, shifting to a process focused on learning has often been met with both enthusiasm and apprehension. It seems we’ve been wired to feel the need for something ‘more tangible’; unlearning is an important part of the process!

- Engaging all levels of staff involved in funded projects has been pivotal in experiencing rich learning. Creating a safe space and facilitating the learning process with intention is a must.

- Staff at non-profits spend a LOT of time reporting to their various funders. Before asking organizations to report information, funders should ask themselves how they will use the information being asked for.

Rad Resources:

- Check out the Centre for Public Impact’s blog series called Measurement for Learning. Author John Burgoyne and his colleague Toby Lowe are pioneering this approach

- https://www.centreforpublicimpact.org/measurement-learning-different-approach-improvement/

- Comic Relief has implemented a number of programs and organizational processes focused on learning including their reporting structure found here: https://www.comicrelief.com/funding/managing-your-grant/learning-and-reporting/

The American Evaluation Association is celebrating Nonprofits and Foundations Topical Interest Group (NPFTIG) Week. The contributions all this week to aea365 come from our NPFTIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hi Harry and Carrie,

Thank you very much for sharing your blog post!

I am a student who is about to finish a course on Program Inquiry and Evaluation, and my ongoing project was creating a program with subsequent evaluation processes.

Since this is an entirely new arena for myself, I certainly appreciated that there is an online community where I am able to read through a vast array of content- some much more applicable than others.

You mention in the article how with non-profits, evaluation equates to accountability. Since there is a shared objective of success from the organization and the funders, are there ever instances where mis-utilization of data clouding the process of impact?

Given your emphasis on trust being vital to all entities, do you have any additional recommendations that assist with both building and maintaining such?

I very much appreciate the sense of vulnerability in your post, especially in the lessons that you have learned. Enthusiasm and apprehensiveness are not commonly used together when describing… well… anything! I find it impressive how despite the common anxieties within your domain, you continue to strive toward creating safe environments that promote learning.

You also shared that you re-oriented the reporting process of impact measurement to provide more reflective questions for the purpose of building deeper understanding of emerging needs, successes and challenges. Since you have noticed the process to be aiding in bridging the divide, would you at all be able to share some of the differences in your questions from now, and say a year ago? I am very curious to learn more about how to authentically build positive relationships between all entities involved in evaluation.

Thank you again! I hope to learn more about how facilitating a learning process that is focused on building relationships and culture can further the outcomes of evaluation.

Best,

Anna