Hi! We are Allison Meserve (Prosper Canada), Rochelle Zorzi and Liz Martin (Cathexis Consulting). In this post, we highlight the process we used to co-develop shared indicators for six organizations involved in a national project and what we’ve learned along the way. We hope this will be useful for your own funder-non-profit relationships!

When Prosper Canada received funding for the project from the Government of Canada’s SDPP, financial empowerment (FE) was a new field in Canada that included a variety of organizations and funders. To develop a meaningful set of 18 indicators, we used a collaborative process that included:

- A review of published research and grey literature on FE measures and FE funding agreements

- Interviews with participating organizations to learn about their services, clientele, and established data collection processes/tools

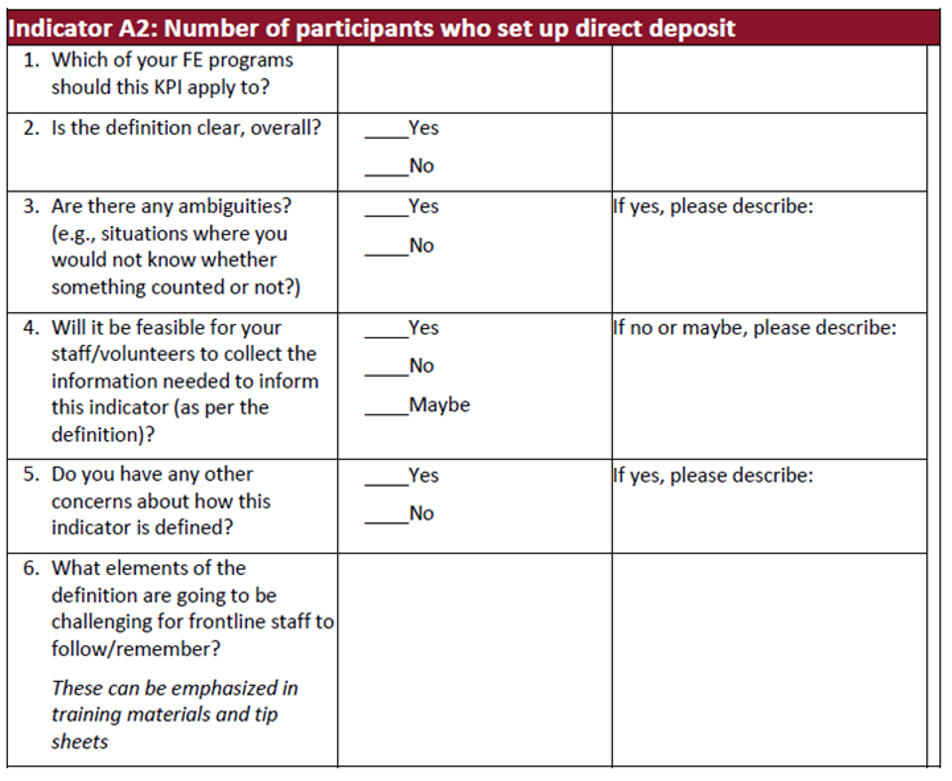

- Requesting written feedback on the draft indicators from six lead partners in advance of teleconferences (see image)

- Three all-party teleconferences to confirm the indicator definitions and come to consensus where there was disagreement

- Piloting the indicators with six lead partners to ensure the indicators were clear, feasible and relevant in practice

- Respecting the autonomy of our partners to determine which of the indicators they would use

- Providing financial support for organizations to update their databases and train staff

Lessons Learned:

- Make real commitments to the co-development process: It was critical to engage our partners as the end users and the providers of the data. Prosper Canada’s priority as the pass-through funder was to support the organizations in reaching consensus. With this vision, we took the time to develop trust and buy-in. We have had minimal challenges since putting the indicators in place which we believe is due to the collaborative process.

- Hire consultants external to the organizations: Cathexis acted as a neutral party without a vested interest in the indicators nor any preconceptions or biases. This created a safe environment for everyone to speak plainly. Getting to an agreement was easy enough once everything was put on the table.

- Involve other funders in the process: This was a shortcoming of our process. Our partners receive funding for FE services from multiple funders. Rollout of the indicators would have been more streamlined for partners if all funders were aligned around the same indicators for their FE projects.

- Use surveys to speed up the easy conversations: By getting feedback in advance, we were able to focus teleconferences on the most challenging issues. This also allowed partners to consult with other FE organizations in their community.

Rad Resources:

- Red Cap and Power BI: Not all organization have databases; by centralizing data management and analysis we were able to provide an attractive output for organizations

- Teagle Foundation’s Collaboration Continuum: A commitment to collaboration requires additional capacities and support

- Mark Cabaj’s Shared measurement paper: As these indicators were used in five different communities, we also had to address how they would influence Collective Impact efforts

The American Evaluation Association is celebrating Nonprofits and Foundations Topical Interest Group (NPFTIG) Week. The contributions all this week to aea365 come from our NPFTIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.