Greetings! My name is Jacqueline Singh. I’m an Executive Evaluation and Program Design Advisor at Qualitative Advantage, LLC and the Program Design TIG Program Chair. I’ve often observed conflation with research, evaluation, assessment and performance measurements when working with education, government, or nonprofit organizations. This may be in part due to the common methods, designs, approaches, and tools these modes of inquiry share—although, their “purposes” differ. It is noted that individuals may be unaware that “purpose” informs the type(s) of “questions” asked, which then inform research design and evaluation plans that get implemented.

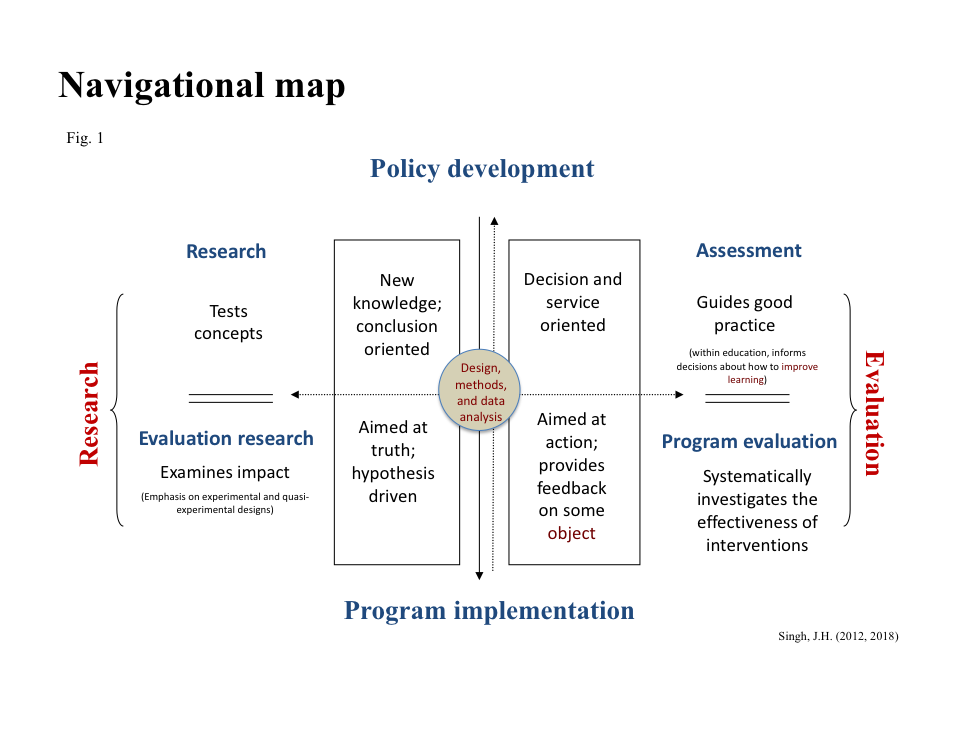

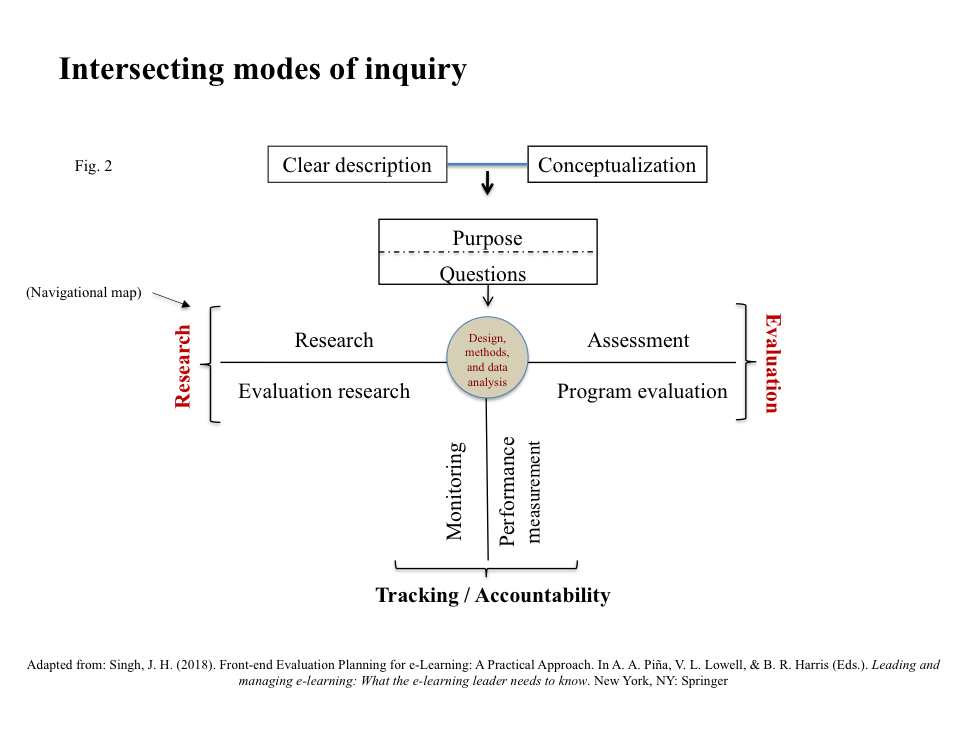

In this AEA365 article, I share two unique models: “Navigational map” (fig. 1) and “Intersecting modes of inquiry” (fig. 2). Each model captures major quadrants that differentiate research, evaluation research, assessment (within education settings), and program evaluation. I’ve developed and successfully applied these to reduce conflation and increase alignment among stakeholders. The models show where “intent” of an inquiry places in a graphic, as well as, two major “influencers” that shape an evaluation (i.e., policy development and program implementation). Once familiarized or oriented to either model, there’s a clear notion or sense of whether one should proceed with research or evaluation approaches, and/or engage in monitoring and performance measurement. It’s not unusual for an investigation to be a hybrid of two or three quadrants, especially in educational contexts or settings. For example, a principal investigator (PI) might propose an experimental or quasi- experimental design and develop a tool (e.g., rubric) to “assess” learning gains attributable to a mentoring program that uses specified instructional strategies (e.g., mentoring techniques, e-portfolio, problem-based learning). Such an approach would be considered “evaluation research,” as it seeks to contribute to generalizable knowledge—also known as, evidence-based decision making.

Sometimes interventions are not amenable to rigorous designs. Other modes of inquiry may intersect with research and evaluation; or, become conflated with program evaluation—but, they’re not the same. For example, monitoring and performance measurement involve ongoing measurements; whereas, program evaluation occurs less often. Individuals (subject matter experts or SMEs) responsible for managing interventions frequently help develop performance measures to systematically track and report performance as part of program accountability.

Hot Tips: 1) Don’t assume all stakeholders (e.g., government, grantors, nonprofits, academe, researchers, administrators) define or differentiate research, evaluation research, assessment, and program evaluation the same way; 2) it’s best to have meaningful conversation to align primary information users from the outset regarding the appropriate mode(s) of inquiry, purpose, and question(s) to be answered; 3) clearly articulate or define: the intervention’s design; its components or subcomponents; respective goals; objectives; its context and stage of maturity; timeline, etc. 4) display interventions in a graphic (e.g., conceptual framework, program theory, logic model).

Rad Resources: These resources are helpful for stakeholders’ understanding varying modes of inquiry:

- gov (regulations and policy/decision charts) The Office for Human Research Protections (OHRP) graphic aids help decide if an activity is research that must be reviewed by an IRB.

- Program Evaluation and Performance Measurement: An Introduction to Practice

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.