I’m Erika Steele, a Health Professions Education Evaluation Research Fellow at the Veteran Affairs (VA) National Center for Patient Safety (NCPS). For the past few years I have been collaborating with Research Associates at the Center for Program Design and Evaluation at the Dartmouth Institute for Health Policy and Clinical Practice (CDPE) to evaluate the VA’s Chief Resident in Patient Safety Program (CRQS). The CRQS is a one-year post-residency experience to develop skills in leadership and teaching quality improvement (QI) and patient safety (PS). Since 2014, Research Associates at CDPE have conducted annual evaluations of the CRQS program. In 2015, we began evaluating the CRQS curriculum by developing a reliable tool to assess QI/PS projects lead by CRs.

One of the joys and frustrations of being an education evaluator is designing an assessment tool, testing it and discovering that your clients, apply the tool inconsistently. This blog will focus on the lesson learned about norming or calibrating a rubric for rater consistency during pilot testing the Quality Improvement Project Evaluation Rubric (QIPER) with faculty at NCPS.

Hot Tips:

- Develop Understanding the goals of the assessment tool

Sometimes raters have a hard time separating grading from assessing how well the program’s curriculum prepares learners. To help faculty at NCPS view the QIPER as a tool for program evaluation, we pointed out patterns in CRs scores. Once faculty started to see patterns in scores themselves, the conversations moved away individual performance on the QIPER and back evaluating how well the curriculum prepares CRs to lead a QI/PS project.

Once raters understood the goal of using the QIPER, insistences of leniency, strictness and first impression errors were reduced and rater agreement improved.

- Create an environment of respect

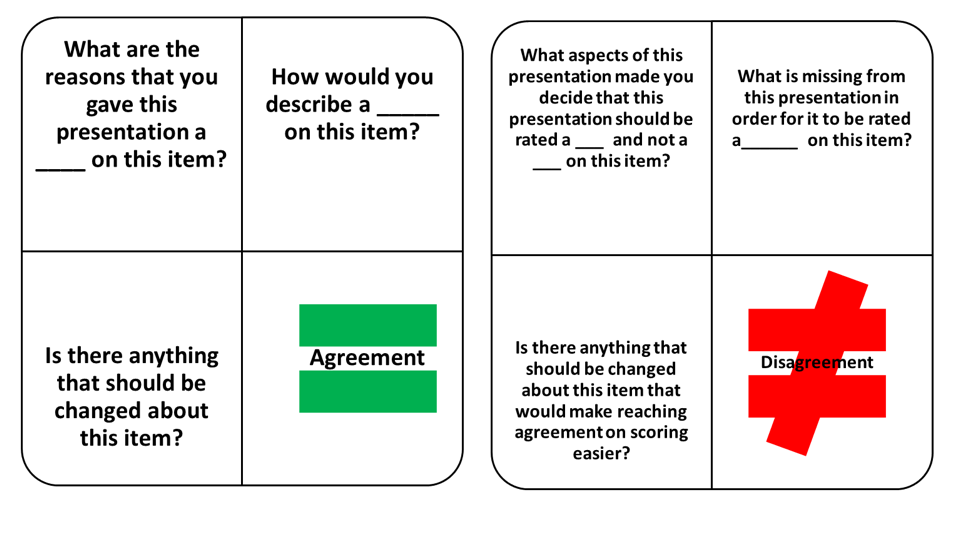

All raters need the opportunity to share their ideas with others for score negotiation and consensus building to occur. We used the Round Robin Sharing (RRS) technique to allow faculty to discuss their expectations, rationale for scoring, and ways to make reaching consensus easier. We used the graphic organizer in Figure 1 to guide discussions.

RRS helped faculty develop common goals related to program expectations for leading QI/PS projects which led to increased rater agreement on scoring projects.

Figure 1: Round Robin Sharing Conversation Guidance

- Build Strong Consensus

Clear instructions are an important aspect for ensuring that raters apply assessment tools consistently. Using the ideas generated during RRS, we engaged the faculty in building a document to operationalize the items on the QIPER and offer guidance in applying the rating scale. The guidance document served as a reference for faculty when rating presentations.

Having a reminder of the agreed upon standards helped raters to apply the QIPER more consistently when scoring presentations.

Rad Resources:

- Strategies and Tools for Group Processing.

- Trace J, Meier V, Janssen G. “I can see that”: Developing shared rubric category interpretations through score negotiation. Assessing Writing. 2016;30:32-43.

- Quick Guide to Norming on Student Work for Program Level Assessment.

The American Evaluation Association is celebrating MVE TIG Week with our colleagues in the Military and Veteran’s Issues Topical Interest Group. The contributions all this week to aea365 come from our MVE TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.