Hello, AEA365 community! Liz DiLuzio here, Lead Curator of the blog. This week is Individuals Week, which means we take a break from our themed weeks and spotlight the Hot Tips, Cool Tricks, Rad Resources and Lessons Learned from any evaluator interested in sharing. Would you like to contribute to future individuals weeks? Email me at AEA365@eval.org with an idea or a draft and we will make it happen.

Hello! I’m Jeff Kosovich and I am a senior evaluator at the Center for Creative Leadership. One of the challenges of producing technical reports and surveys meant for people without your expertise is avoiding unnecessary complexity and jargon. I’m currently testing the effectiveness of tools like ChatGPT as a time-saving method of making surveys and reports more accessible.

Language as a barrier to science access is a major problem. It’s worse because even many of us who want science to be open and accessible to everyone have a hard time writing technical content in an accessible way.

Over the past 3 years I’ve been developing a community scan meant to provide data from community members about the challenges they face and the unique tools they must use to address the challenges.

Two things that have kept me up at night were making sure the survey was not too academic for respondents, and making sure the results weren’t too technical for leaders who read the report.

Several rounds of testing have consistently led to feedback that the language is too academic for many people who might take the survey.

I’ve had some training in translating complex concepts into lay terms for client interactions. I’ve even been told I’m pretty good at it. But it’s not easy and it can be incredibly time consuming to consider different rewrites and decide on the right wording.

Cool Trick

I’ve been wanting to check out ChatGPT for a while, and I started wondering if it could help revise my text to be less wordy and more accessible. I know recommendations in the media vary, but various sources tend to suggest around 8th grade as the target reading level for the average US resident.

I don’t really like grouping people based on what grade level they read at, but I do think that as you get past 8th grade, the things you learn in school start to become increasingly different, which means people may not have the same vocabulary. Even if someone has a lot of education, they can still come across words they don’t know, especially if in technical language. And when you think about people who speak multiple languages and are still learning the language you’re using, it makes it even more important to make surveys and reports easier to understand.

Given the extensive research on reading comprehension and tools for grading the complexity of text, I decided to see how effectively ChatGPT could adapt text to different reading levels.

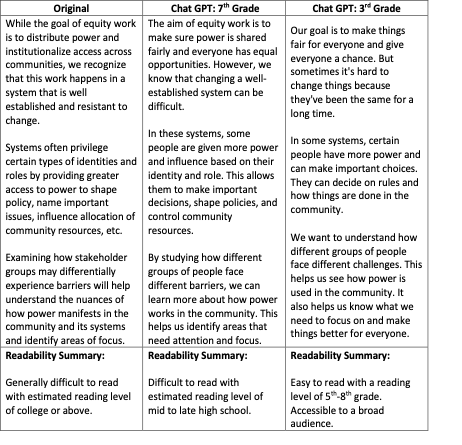

In the image below, I show my original text along with that same statement rewritten for 7th grade and 3rd grade reading levels. Below them I include some summaries from a reading level calculator website (I tried several and each provided similar results) and I think it looks pretty good.

As with any use of a tool like ChatGPT, it’s not perfect. Sometimes the rewrites became overly simplistic and lost some core meaning. Other times, they still seemed a little too complex. In both cases I was able to ask for additional rewrites. You can either resubmit your prompt with updated instructions or just tell it to try again. I found resubmitting the original text to be a little more reliable.

I have yet to test respondent reactions to the different revisions, but on the surface (and based on several different reading calculators), AI tools like ChatGPT seem promising for improving survey quality and improving the accessibility of scientific writing.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.

I tried this too, Jeff, a month ago for an assignment in Ann K. Emery’s Report Redesign course.

I compared my before-after changes to decrease reading level by 2 grades. Since I don’t write reports any more, I used a para from a post I wrote on LinkedIn for this (lowering the reading level) and did it by editing my para and then I decided to try a ChatGPT approach.

I posted this (w/a pic of before-after) in the course’s closed LinkedIn Group and this was my tl;dr:

-I can do a better job editing and revising the reading level downward, using Ann’s course guidance (3-grade decrease achieved ).

-Chat-GPT did a useful revision of my original, but it didn’t lower the reading level by 2 grades, only 1.

Hi Sue,

Thanks for reading and for your note. It is certainly the case that a completely manual approach is going to be more effective and is likely to achieve specific grade-level targets. As I mentioned it wasn’t perfect at it and the calculated grade levels varied. However, at scale it isn’t always possible to dedicate the time to apply an in-depth manual process to a 10+ page report–this work had been on my to-do list for quite some time and I’m not sure when I would have had time to fully revise it without the help.

I just wanted you to know you had support for this…and gave you my limited experience… I was just so glad to see this post on readability and its importance.