Hello. I am Karen Widmer, a 4th year doctoral student in the Evaluation program at Claremont Graduate University. I’ve been developing and evaluating systems for performance (business, education, healthcare, and nonprofits) for a long time. I think organizations are a lot like organisms. While each organization is unique, certain conditions help them all grow. I get enthusiastic about designing evaluations that optimize those conditions!

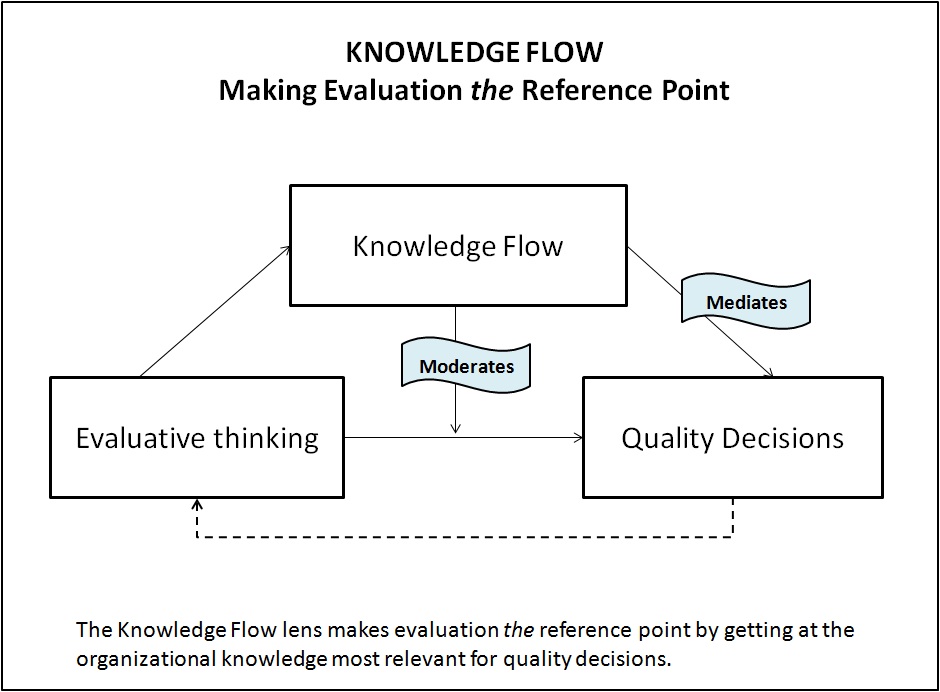

Theme: My master’s research project looked at evaluation-related activities shared by high-performing organizations. For these organizations, evaluation was tied to decision making. Evaluation activity pulled together knowledge about organizational impact, direction, processes, and developments, and this fed the decisions. The challenge for evaluation is to pool the streams of organizational knowledge most relevant for each decision.

Hot Tip:

- Evaluative thinking identifies the flow of organizational knowledge and this provides decision makers with a point of reference for quality decisions.

- In technical language, Knowledge Flow may mediate or moderate the relationship between evaluative thinking and decision quality. Moreover, the quality of the decision could be measured by the performance outcomes resulting from the decision!

Cool Trick:

- Design your evaluation to follow the flow of knowledge throughout the evaluand lifecycle.

- Document what was learned when tacit knowledge was elicited; when knowledge was discovered, captured, shared, or applied; and knowledge regarding the status quo was challenged. (To explore further, look to the work of: M. Polanyi, I. Becerra-Fernandez, and C. Argyris and D. Schon.)

- For the organizations I looked at, these knowledge activities contained the evaluative feedback desired by decision makers. The knowledge generated at these points told what’s going on.

- For example, tacit perceptions could be drawn out through peer mentoring or a survey; knowledge captured on a flipchart or by software; or a team might “discover” knowledge new to the group or challenge knowledge previously undisputed.

Conclusion: By design or still shot, evaluative thinking can view the flow of knowledge critical to decisions about outcomes. Knowledge Flow offers a framework for connecting evaluation with the insights decision makers want for reflection and adaptive response. Let’s talk about it!

Rad Resource: The Criteria for Performance Excellence is a great government publication that links evaluative thinking so closely with decisions about outcomes that you can’t pry them apart.

Rad resource: Neat quote by Nielsen, Lemire, and Skov in the American Journal of Evaluation (2011) defines evaluation capacity as “…an organization’s ability to bring about, align, and sustain its objectives, structure, processes, culture, human capital, and technology to produce evaluative knowledge [emphasis added] that informs on-going practices and decision-making to improve organizational effectiveness.”

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Yes, and. The point I wanted to stress was that evaluation of an organization’s knowledge IS the mechanism by which say, leadership does its strategic planning. At least for high-performing organizations! Evaluation should focus on assembling the knowledge needed for good decisions. Its virtue then speaks for itself. By “truth”, Chad, did you mean the knowledge held by the front-line? Do you see the drive for results happening without knowledge, Eric?!

Karen, here’s a recent example. I belong to an equity committee which spans multiple offices within my department. When I suggested that we needed to define equity if we wished to apply it in a way that you have outlined in your post, the reply was that I should interview senior staff for their opinion as to what it meant. In other words, senior staff was seen as the sensemaking mechanism. It’s ironic since one of the principles of this committee is “speak your truth.”

Chad

It was refreshing to look back at the Baldrige Criteria with the older eyes of an internal evaluator. “Hot” and “Rad” are apt phrases, because in the government circles where I run lately “leadership” and “strategic planning” are often hot and rad notions mainly because of their scarcity. Nevertheless, championing a frank assessment of leadership and strategic planning is a must for an evaluator. Resources being what they are, today’s emphasis is on the “results” criterion, leaving the others to fight for attention.

Thank you for your post.

Eric

Excellent post. I’ve extolled the virtues of Baldrige for years at my school district. If you want to do something like this in your organization, your leadership must let go of the notion that truth comes only from them.

Best,

Chad