We are Elyssa Lewis from the Horticulture Innovation Lab and Amanda Crump from the Western Integrated Pest Management Center. Our organizations fund applied research projects, and today we are exploring the topic of evaluating research for development projects.

In our experience, international donors and organizations have difficulty evaluating research projects. They are simply more accustomed to designing and conducting evaluations that focus on the impacts of interventions, counting large numbers and looking at population level outcomes. The impacts of research are often less tangible, and not easily incorporated into the implementation-oriented monitoring and evaluation systems of many donors.

This puts researchers in a difficult position. When they try to “speak the language” of donors, they often propose evaluation plans that are more suited to intervention-type projects rather than their actual research. We often receive proposals where the research team has tried to square peg their evaluation section to fit donor ideas of impact.

As funders, we need to come up with a better way of evaluating the research we fund because it is clear that we cannot evaluate the impact of research using an intervention-based framework.

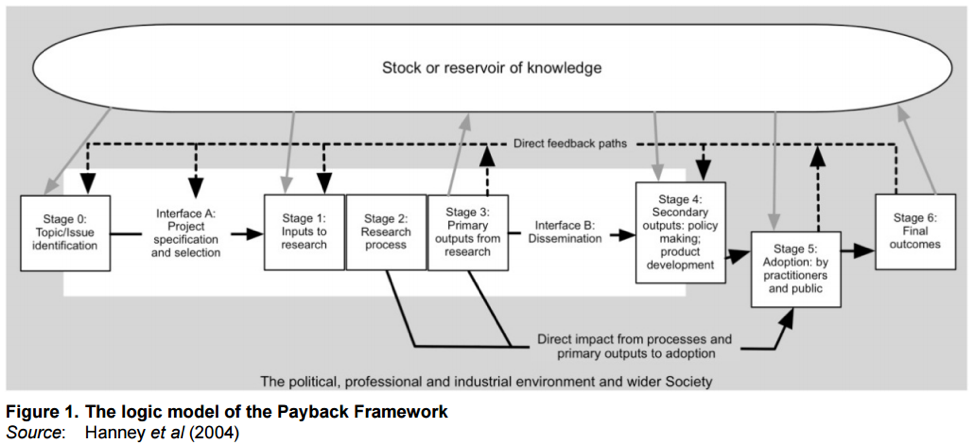

As evaluators, we have been thinking about this conundrum and stumbled across this framework. If you fund or write research proposals, we hope this will help you.

Hot Tips:

- Research for development lies on a continuum from basic research to actionable knowledge and/or product/service creation. Depending on one’s location along this continuum, the distinction between research and intervention can get murky. Therefore, it is important to be explicit about what the goal of the research actually is. What question(s) are researchers trying to answer? Getting a clear answer to this question will help funders understand whether they are indeed contributing to the global knowledge bank in the way that they hope to.

- Research evaluations should go beyond simply addressing whether the research is a success. We propose that researchers, especially when conducting applied research for development, think about how they could best position their research to be picked up by those who need it most and can take it to scale (if appropriate). This way, the evaluation of the research can look beyond the findings of the research itself, and begin to gain a greater understanding of the impact the research is having.

- Researchers should also make an effort to understand and disseminate negative information. Why? While often not publishable, we can learn a lot about international development from research that returns negative results. It is just as helpful, if not more so, to know which technologies and/or interventions don’t work. This way we are less likely to repeat the same mistakes over and over again.

Rad Resources:

Research Impact: creating, capturing and evaluating

Evaluating the Impact of Research Programmes – Approaches and Methods

The ‘Payback Framework’ Explained by, Claire Donovan and Stephen Hanney (2011)

The American Evaluation Association is celebrating International and Cross-Cultural (ICCE) TIG Week with our colleagues in the International and Cross-Cultural Topical Interest Group. The contributions all this week to aea365 come from our ICCE TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.