Hi. My name is Catherine Dun Rappaport. I am the Vice President of Learning and Impact Measurement at BlueHub Capital. BlueHub is a national, nonprofit, community development financial institution (CDFI) dedicated to building healthy communities where low-income people live and work. I used to work in applied evaluation and learning at United Way of Mass Bay. Before that, I did research (a lot of research) at Abt Associates.

My lessons learned are about making research useful and practical for smart, engaged constituents who are not evaluators. I am particularly interested in ensuring that research is relevant to people who spend 90% of their time implementing or financing programs for low-income communities.

So, what have I learned from years of doing research and consulting and being the in-house evaluation “expert”?

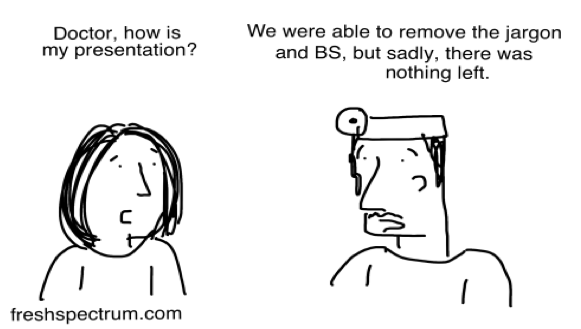

1.) Explain your work and findings in clear, plain language. Many smart people do not speak wonk. Terms such as “R-squared” and “statistical significance” are not accessible to people who have not spent their careers doing evaluation.

For a recent evaluation of a component of BlueHub’s community-focused work, we found terrific findings that we wanted to share with all staff. We were able to do this successfully by creating a non-technical slide deck that used infographics to highlight learnings.

2.) Start with a learning agenda and right-size. Sit down with your team or the community and determine what they want to learn, why, and how they expect to use evaluation findings. Then, consider resources and right-size the work.

For the evaluation I just noted, we took several months to work with the program leadership to determine what they wanted to learn and why. We also assessed the state of current data about the program. That enabled us to scope out a design that used current data, saved cost, and that met learning priorities.

3.) Invest time in helping constituents understand your assumptions and the generalizability of the work. Put limitations front and center; few people will read footnotes. Don’t apologize for your work, but make sure your audience understands what your findings do and do not prove.

For another BlueHub business component, we developed a social impact rating tool to quantify how projects we are considering financing align with organizational priorities. When we present findings from this tool, we celebrate what they can tell us and we highlight questions we cannot answer, usually because we implemented the tool before projects were final.

4.) Engage stakeholders in the work of evaluation. Research and evaluation are things we do together with stakeholders, whether colleagues or community members; not something we do to them.

On projects, my colleagues’ deep understanding of their practice informed and enhanced our work. We partner with staff to ensure that assessments speak to our CDFI’s value-add and to refine drafts and deliverables.

Rad Resource: I found helpful guidance about how to conduct research and applied learning in the CDFI context in the following document: https://www.urban.org/sites/default/files/publication/90246/cdfi_compliance_to_learning_0.pdf

The American Evaluation Association is celebrating Community Development TIG Week with our colleagues in the Community Development Topical Interest Group. The contributions all this week to aea365 come from our CD TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.