Hello, AEA365 community! Liz DiLuzio here, Lead Curator of the blog. This week is Individuals Week, which means we take a break from our themed weeks and spotlight the Hot Tips, Cool Tricks, Rad Resources and Lessons Learned from any evaluator interested in sharing. Would you like to contribute to future individuals weeks? Email me at AEA365@eval.org with an idea or a draft and we will make it happen.

Hi this is Holly Carmichael Djang and Rocío Bravo of the Monitoring, Evaluation, Research & Learning (MERL) Team at Tilting Futures. Tilting Futures equips young people worldwide to create meaningful impact on global issues. Our Immersive Learning Programs enhance students’ emotional and intellectual skills through collaboration with diverse communities and cultures worldwide. Tilters graduate into our lifelong network with a permanent perspective shift on the world, and what they’re capable of achieving within it.

Titling Futures has recently designed and launched a brand new program, Take Action Lab, informed by research and over a decade of experience. Given that we are an organization dedicated to learning (ours and our students), we knew it would be important to design a method to both capture and apply our learnings as the program was launching.

Rad Resource

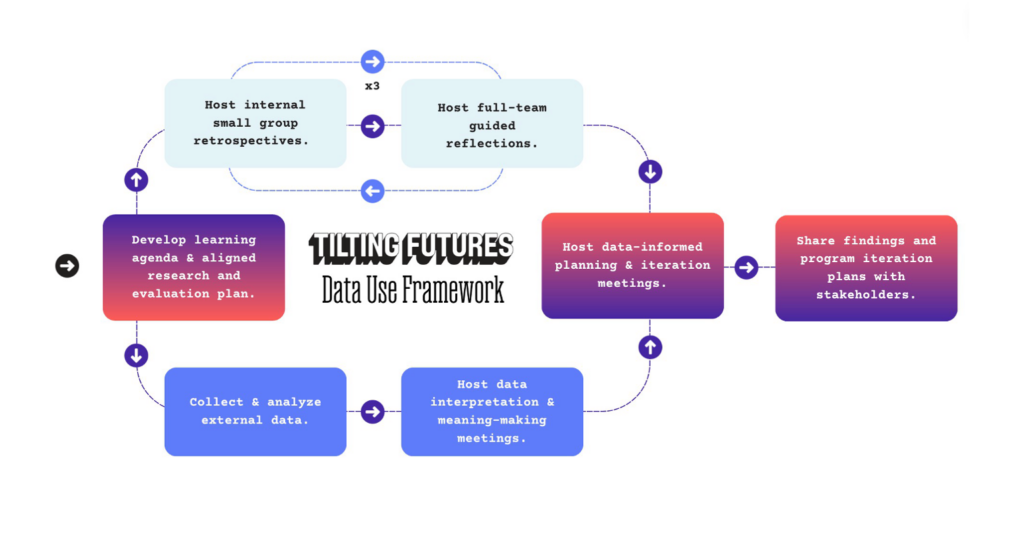

After a lot of online digging and a few conversations with organizations also interested in data application and use, we developed the following Data Use Framework. We’ve used this over the past three program terms and are excited to share it with others.

HOST DATA INTERPRETATION & MEANING-MAKING MEETINGS

After each data collection activity, aligned to our learning agenda, the MERL team meets with key internal stakeholders to collectively interpret the aligned findings. These meetings are intended to help the stakeholders understand the data, discuss context for the results, and co-develop recommendations and next steps.

HOST SMALL GROUP RETROSPECTIVES

Throughout each term, the MERL team holds three meetings with small, workstream-specific internal teams. These sessions provide a forum for team members to share challenges and observations related to program implementation, and we are able to capture and document insights that may not be evident in the data we’re collecting from other stakeholders.

HOST GUIDED REFLECTIONS

Following each series of Small Group Retrospectives, the MERL team synthesizes and reports on cross-workstream observations taken from the Retrospectives and then hosts a Full Team Guided Reflection. These meetings are designed to help the team process what we’re learning across workstreams, highlight key observations, and discuss how to make our learnings actionable.

HOST DATA-INFORMED PLANNING AND ITERATION MEETINGS

At the end of each program term, the MERL team collates all learnings and observations from the term and hosts workstream-specific data planning meetings. In these meetings, all relevant learnings from the term are reviewed together, and workstream owners are supported in reflecting on these learnings as a whole. Workstream owners are asked to reflect on and document:

- Approved Program Changes: These are changes that are already underway.

- Proposed Program Changes: These are changes the workstream owners would like to make, based on the data, and that need input or approval from others.

Proposed changes are then inputted into a Program Change Tracker, a shared document that helps appropriate Team Members provide input and approval on proposed changes, and helps the Full Team understand program changes, hold themselves accountable for making the changes, and ensure institutional knowledge of program iteration.

SHARE FINDINGS AND PROGRAM ITERATION PLANS WITH STAKEHOLDERS

Importantly, at the end of each term we share our findings and learnings with our key external stakeholders, including our students! We also keep them abreast of the changes that we’re making in order to highlight how their voices were used to inform our program design.

We’re very proud of how robust this process is, particularly given the size of our organization and our MERL team. We’d be happy to share some of our guiding documents and processes with others, and look forward to hearing about similar systems of practice at other organizations.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.