I’m Lee-Anne Molony and I’m a Principal Consultant at Clear Horizon. We’re an Australian consultancy who specializes in participatory monitoring and evaluation for environmental management and agricultural programs. This post will talk about a technique we developed, Collaborative Outcomes Reporting (previously known as Participatory Performance Story Reporting). This technique is currently being used nationally across the sector and has been endorsed by the Australian government.

Cool Trick: The Collaborative Outcomes Reporting technique presents a framework for reporting on contribution to long-term outcomes using mixed methods and a participatory process.

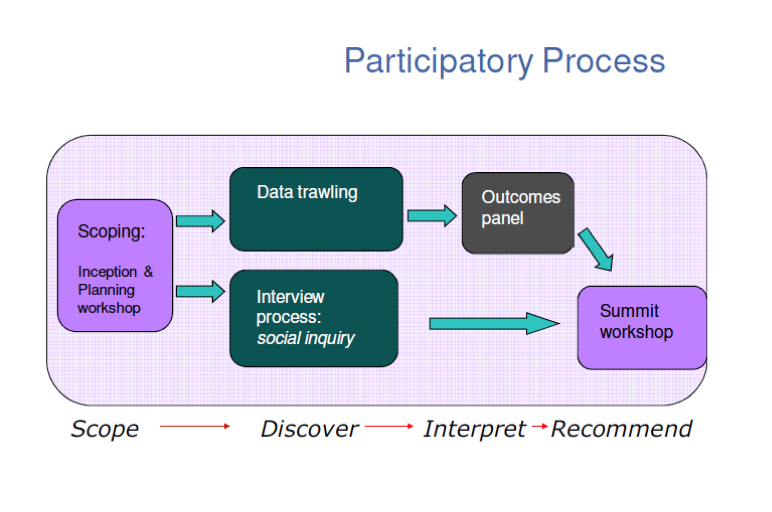

The process steps include clarifying the program logic, developing guiding questions for the social inquiry process and a data trawl. The approach combines contribution analysis and multiple lines and levels of evidence, it maps existing data against the theory of change, and then uses a combination of expert review and community consultation to check for the credibility of the evidence about what impacts have occurred and the extent to which these can be credibly attributed to the intervention.

A suggested process in undertaking Collaborative Outcomes Reporting

A suggested process in undertaking Collaborative Outcomes Reporting

Final conclusions about the extent to which a program has contributed to expected outcomes are made at an ‘outcomes panel’ and recommendations are developed at a large group workshop involving representatives of those with a stake in the program and/or its evaluation.

They have now been used in a wide range of sectors from overseas development, community health, and indigenous education, but the majority of work has occurred in the environmental management sector, with the Australian government funding 14 pilot studies in 2007-8, and a further 10 (non-pilot) studies in 2009.

Many organizations have since gone on to adopt the participatory process outright, or specific steps within the process, for their own (internal) evaluations.

Lesson learned: Organizations often place a high value on the reports produced using this technique because they strike a good balance between depth of information and brevity and are easy for staff and stakeholders to understand.

They help build a credible case that a contribution has been made. They also provide a common language for discussing different programs and helping teams to focus on results.

Rad Resource: A discussion of the process can be found on our website and the manual for implementing the technique is available at the Australian Government Department of Environment website.

The American Evaluation Association is celebrating Environmental Program Evaluation Week with our colleagues in AEA’s Environmental Program Evaluation Topical Interest Group. The contributions all this week to aea365 come from our EPE TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.