Hi! My name is Antonio Olmos, Executive Director of the Aurora Research Institute (ARI) in Aurora, Colorado. ARI, a subsidiary of Aurora Mental Health and Recovery, provides evaluation services in Mental Health, Substance Abuse, Law Enforcement (Crisis Response Teams), and Public Health. We have extensive experience with federal and state grants.

When we conduct evaluations, we always develop or revise the theory of change and logic models to understand how programs achieve their goals. In doing so, we’ve found that mediation and moderation are essential in uncovering the mechanisms driving program success. However, we rarely see these concepts in other evaluations and wonder why.

What Are Mediation and Moderation?

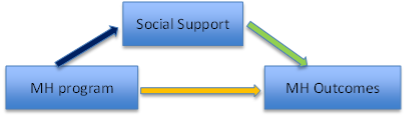

Mediation occurs when a variable (called a mediator) explains the relationship between treatment and outcome. For example, a program designed to improve mental health might lead to increased social support, which then improves outcomes. Social support is the mediator. A mediation model looks like this:

By identifying mediators, evaluators can better understand how/why a program works. If a program falls short of expectations, mediation analysis may reveal where the breakdown occurs.

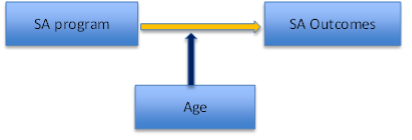

Moderation (often referred to as “interaction effects“), on the other hand, affects the strength or direction of the treatment-outcome relationship. For instance, a substance abuse program may work better for younger participants than older ones. Here, age is a moderator. A moderation model looks like this:

Understanding moderation helps evaluators see who benefits most from a program and under what circumstances.

Hot Tips

Using Mediation and Moderation in Evaluation

- Start with Your Logic Model: Use it to hypothesize potential mediators and moderators. Ask your program stakeholders: What mechanisms lead to change? Who benefits most?

- Collect the Right Data: Your data collection plan should include measures of mediators (e.g., behaviors, attitudes) and moderators (e.g., demographic characteristics). Ensure your design allows for testing these relationships.

- Use Simple Diagrams: Communicate complex ideas to non-technical stakeholders with clear, simple mediation and moderation diagrams. Simple diagrams can help bridge this gap and make the evaluation findings more accessible.

- Interpret with Caution: While mediation and moderation offer valuable insights, they don’t necessarily establish causality. Just because a variable mediates or moderates an effect doesn’t mean a causal relationship. Make sure claims are supported by rigorous testing.

Lessons Learned

At ARI, incorporating mediation and moderation in evaluations has deepened our understanding of program impacts. We can move beyond simple cause-effect models to explore how and why programs succeed or fail. The challenge often lies in communicating these advanced concepts to stakeholders, but with clear explanations grounded in real-world examples, we’ve found success.

Rad Resources

- Introduction to Mediation, Moderation, and Conditional Process Analysis by Andrew F. Hayes: A must-read for evaluators diving into these methods. Also check David MacKinnon and David Kenny’s websites

- G*Power: A free tool for conducting power analyses to ensure your sample size can detect mediation and moderation effects.

By integrating mediation and moderation into your program evaluations, you can unlock more meaningful insights and better inform decision-making. So next time you’re developing or revising your logic model, don’t forget these two key principles!

The American Evaluation Association is hosting Quantitative Methods: Theory and Research Evaluation Week. The contributions all this week to AEA365 come from our Quant TIG members. Do you have questions, concerns, kudos, or content to extend this AEA365 contribution? Please add them in the comments section for this post on the AEA365 webpage so that we may enrich our community of practice. Would you like to submit an AEA365 Tip? Please send a note of interest to AEA365@eval.org. AEA365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.