Hi, I’m Elizabeth McGee, Founder and Senior Consultant at LEAP Consulting, helping nonprofits, foundations, and public institutions achieve impact through meaningful evaluations and strategic support.

Lessons Learned:

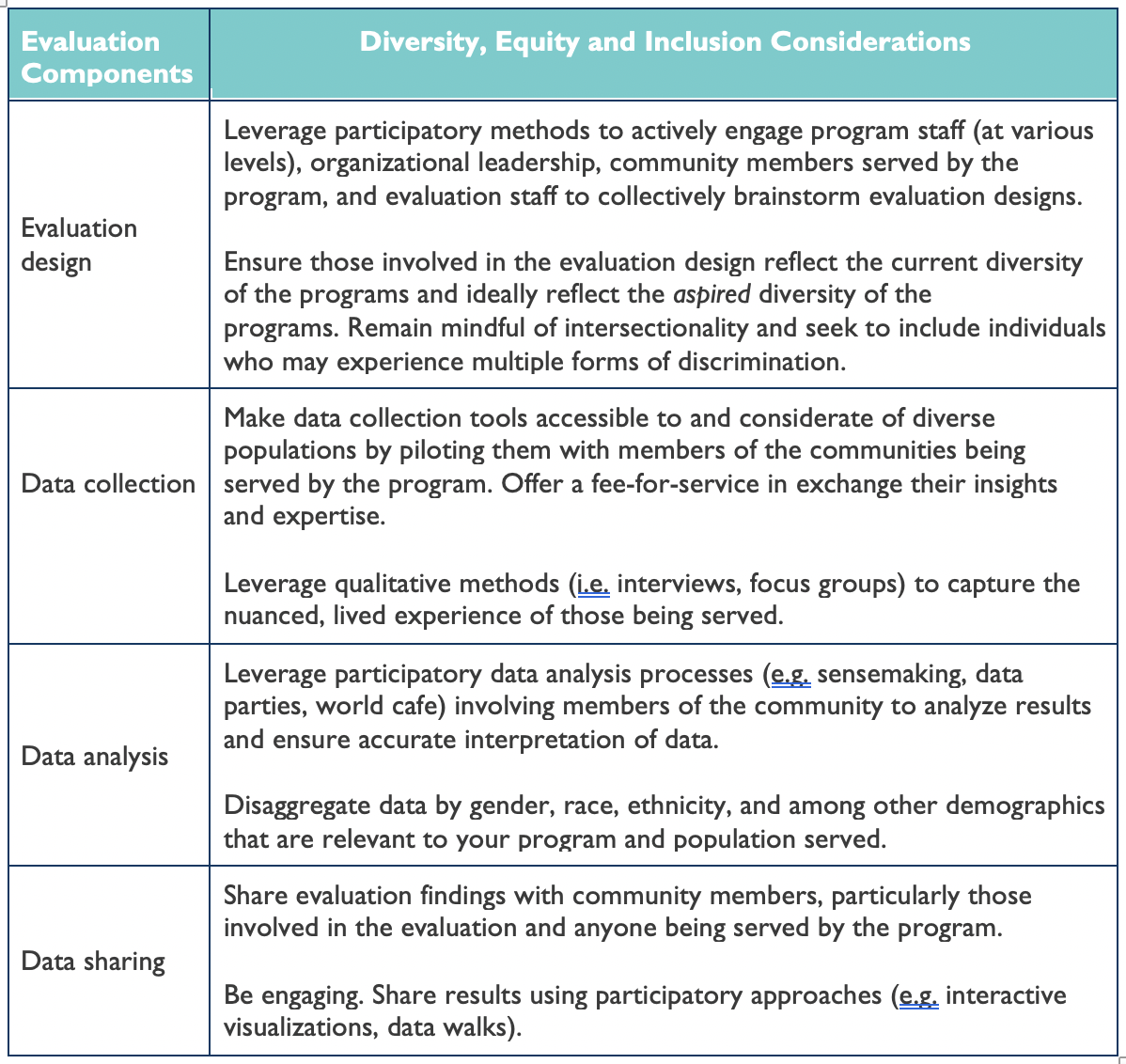

How we carry out our work as evaluators has a direct impact on the way programs and organizations see themselves, interpret their data, make recommendations and learn lessons. Ensuring that Diversity, Equity and Inclusion (DEI) considerations are built into all stages of the evaluation processes is critical to ensuring DEI isn’t just a touted evaluative value, but an integral part of our practice.

The following is an overview of practical ways to embed DEI considerations into each stage of your evaluation approach.

Rad Resource:

Public Profit offers a great resource called Dabbling in the Data, which offers a hands-on guide to participatory data analysis.

The American Evaluation Association is celebrating CP TIG Week with our colleagues in the Community Psychology Topical Interest Group. The contributions all week come from CP TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hi Elizabeth,

I found your blog post extremely interesting especially since diversity, equity, and inclusion (DEI) more familiar to me as the acronym EDI is a top priority across all fields and has great social relevance and impact. Full participation of equity seeking groups can help achieve inclusive excellence among populations. Your post shed light on how evaluations can be meaningful and useful for program stakeholders, staff, participants, and the evaluator. The table in your post is very self-explanatory and easy to follow and make connections between the different evaluation components. The evaluation components and DEI considerations are logically connected and seamlessly go together. There also seems to be the running theme about keeping the stakeholders in the loop at every step of the evaluation. When stakeholders know the program to every detail, they can provide valuable feedback for improvements or changes that may be needed. Another point that also stood out to me in your table is “Disaggregate data by gender, race, ethnicity and among other demographics that are relevant to your program and population served.” This highlights the many factors involved in any inclusive program and aspects to be considered for data analysis making it more wholesome and unbiased.

Finally the “Dabbling in the Data” resource is an interesting read and guide for proper data utilization and learning analysis techniques as well as the different activities and guidelines for data organization and analysis.

Thanks for this educative and interesting blog post.

Hi Elizabeth, thank you so much for sharing the importance of incorporating diversity, equity and inclusion within all stages of the evaluation process. I am in the process of obtaining my master’s degree and am taking a course in which we are learning about all the aspects of what goes into an evaluation as well as conducting our own evaluation on a non-profit program. This being my first time learning about evaluations, nevertheless conducting an evaluation, I found your article extremely helpful in regard to how evaluators can make their evaluation meaningful and useful for both program stakeholders, staff and the evaluator.

Your table on way to embed Diversity, Equity and Inclusion (DEI) was easy to read and gave ways for evaluators to make sure that considerations for DEI are included in all the evaluation components. I wish that I read your post when starting my evaluation design, as the table you have provided can be used as a checklist and reminder for evaluators (and myself) to ensure our evaluation is equitable, inclusive, and diverse. A big component and theme I see in the table is the need to include stakeholders constantly and consistently in the evaluation in a meaningful way. When evaluators make meaningful connections with stakeholders and involve them in the creation of the evaluation, there is more buy in. Furthermore, stakeholders can then be apart of analyzing the data if they understand the type of evaluation being conducted, which will lead, as you stated, to a more accurate interpretation of data.

Within my own evaluation, I have been recently looking at data analysis and I really like your statement of involving members of the community to analyze results. Would you say, that prior to inviting community members to help analyze data, that you would have to hold training sessions on analyzing? And would having training sessions be discourage community members to join in the analyzing process?

Finally, I also really love the Dabbling in the Data resource and how they provide activities to introduce/enhance learning analysis techniques as well as the different activities for how to organize and analyze data. I will be looking at this resource when analyzing my own set of data.

Hi Elizabeth,

I was drawn to your blog post after searching “equity” in evaluation. As a district principal with a school board just north of Toronto, equity, diversity and inclusion (we use the acronym EDI) is one of four strategic priorities. Monitoring and evaluation of our programs is an essential component of my role, and I continue to pursue opportunities to deepen my understanding of EDI practices.

I appreciate the chart you shared which offers specific ideas for embedding diversity, equity and inclusion within each stage of a program evaluation design. One particular idea piqued my curiosity: leveraging participatory data analysis processes. The Dabbling in Data resource you shared then provided more detail. Specifically, the “Contribution” activity which is “recommended for users working through data to understand how their work leads to change, both directly and indirectly.”

I am currently developing a program evaluation design for an initiative in my district focused on improving student performance in mathematics, and each of the suggested activities in this section – Easy as Pie, The Tip of the Iceberg and Force Field Analysis – could be used to support our team to make connections between the program’s elements and their impact on our broader district performance measures. Some of these measures include safe learning spaces, mentally healthy schools and graduation rates.

Thank you for your inspiring post and for sharing these “Rad” resources!

Kristen