Hi, I’m Barbara Klugman. My practice focuses on evaluation of social justice advocacy. One of the challenges I frequently bump into is how to evaluate training done or conferences run in support of advocacy. Clients may have done a post-event survey indicating that participants gained new knowledge or met new people, both of which were goals of the training or conference; but clients do not know whether this learning actually strengthened the quality of participants’ advocacy.

Lessons Learned: To address this, trainers are encouraged to gather data that distinguishes learning from action, preferably with observable changes in behavior, relationships, actions, policies, or practices. In order to gain verifiable information, I try to get participants to give details/nuances of their application to get them away from talking in generalities. For example, questions that include when, where, and who can nudge participants to describe technically verifiable events rather than broad statements of use. For advocacy purposes, the goal is then to try and understand did the participants’ action produce desired advocacy results. I like to use a two-part system. First is a yes/no—did the actions influence any actor? If yes, I again probe for the details that could, in theory, be verifiable. For example, “Can you please describe what the stakeholder did or said differently after you engaged them, and when and where this took place?”

Hot Tip: Don’t automatically dismiss observation as a data collection method. Many advocacy trainers think observation is too costly or not practical. However, many advocacy events are observable and the organizations can use a simple template to capture changes in behaviors, relationships, policies or practices in those event settings.

Cool Trick: Don’t get stuck in overly prescribed outcome frameworks. Because of the dynamic nature of advocacy work, outcomes from trainings could be highly variable. Rather than only looking at target competency changes or specific desired outcomes, using an approach like outcomes harvesting can capture nuanced aspects of the influence of advocacy training.

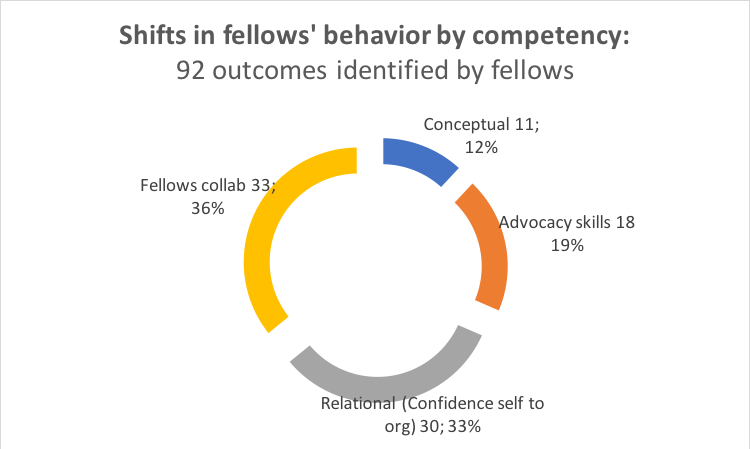

For example, as a developmental evaluator of the Atlantic Fellows for Health Equity based at Tekano (South Africa), I have captured outcomes from interviews, some of which I’ve done, some were done by the internal evaluator. The harvest gave us the following information regarding fellow’s descriptions of shifts in their behavior which we organized according to the three competencies the programme aims to improve as well as categorizing actions taken to engage other fellows.

Lessons Learned: It is important for advocacy training evaluators to remember that the effectiveness of participants’ advocacy will be heavily influenced by the opportunity to use those skills in the prevailing environment. As a result, evaluators should build in a context analysis as part of their assessment of outcomes influenced by the training or conference.

Rad Resources:

- The Kirkpatrick Model for the rationales of distinguishing reaction and learning from behavior change and results such behavior in turn influenced

- For detail on how to categorize outcomes see Ricardo Wilson-Grau’s publication on Outcome Harvesting. http://www.managingforimpact.org/sites/default/files/resource/outome_harvesting_brief_final_2012-05-2-1.pdf

AEA365 is hosting the APC (Advocacy and Policy Change) TIG week.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.