I’m Giovanni Dazzo, co-chair of the Democracy & Governance TIG and an evaluator with the Department of State’s Bureau of Democracy, Human Rights and Labor (DRL). I’m going to share how we collaborated with our grantees to develop a set of common measures—around policy advocacy, training and service delivery outcomes—that would be meaningful to them, as program implementers, and DRL, as the donor.

During an annual meeting with about 80 of our grantees, we wanted to learn what they were interested in measuring, so we hosted an interactive session using the graffiti-carousel strategy highlighted in King and Stevahn’s Interactive Evaluation Practice. First, we asked grantees to form groups based on program themes. After, each group was handed a flipchart sheet listing one measure, and they had a few minutes to judge the value and utility of it. This was repeated until each group posted thoughts on eight measures. In the end, this rapid feedback session generated hundreds of pieces of data.

Hot Tips:

- Add data layers. Groups were given different colored post-it notes, representing program themes. Through this color-coding, we were able to note the types of comments from each group.

- Involve grantees in qualitative coding. After the graffiti-carousel, grantees coded data by grouping post-its and making notes. This allowed us to better understand their priorities, before we coded data in the office.

- Create ‘digital flipcharts’. Each post-it note became one cell in Excel. These digital flipcharts were then coded by content (text) and program theme (color). Here’s a handy Excel macro to compute data by color.

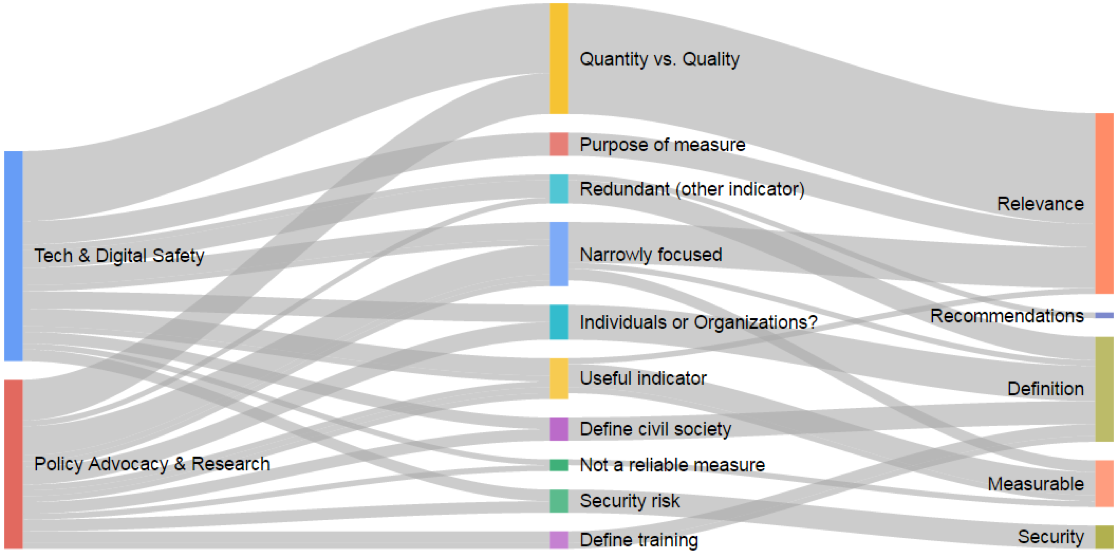

- Data visualization encourages dialogue. We created Sankey diagrams using Google Charts, and shared these during feedback sessions. The diagrams illustrated where comments originated (program theme / color) and where they led (issue with indicator / text).

Lessons Learned:

- Ground evaluation in program principles. Democracy and human rights organizations value inclusion, dialogue and deliberation, and these criteria are the underpinnings of House and Howe’s work on deliberative democratic evaluation. We’ve found it helpful to ground our evaluation processes in the principles that shape DRL’s programs.

- Time for mutual learning. It’s been helpful to learn more about grantees’ evaluation expectations and to share our information needs as the donor. After our graffiti-carousel session, this entire process took five months, consisting of several feedback sessions. During this time, we assured grantees that these measures were just one tool and we discussed other useful methods. While regular communication created buy-in, we’re also testing these measures over the next year to allow for sufficient feedback.

- And last… don’t forget the tape. Before packing your flipchart sheets, tape the post-it notes. You’ll keep more of your data that way.

The American Evaluation Association is celebrating Democracy & Governance TIG Week with our colleagues in the Democracy & Governance Topical Interest Group. The contributions all this week to aea365 come from our DG TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.