Hello from Florent and Margaret in Sydney! We are two seasoned evaluators from ARTD Consultants, an Australian public policy consultancy firm providing services in evaluation, research and strategy. As more Australian government services and programs are delivered through partnerships, evaluators need to find better partnership evaluation methods. Faced with the challenge of evaluating partnerships, we quickly realised that there are a number of methods out there: partnership assessment surveys of varying types, social network analysis, collaboration assessment, integration measure, etc.

But which one should we choose? Having looked at a number of these we felt that choosing one would not enable us to see what was really happening at all levels of the partnership.

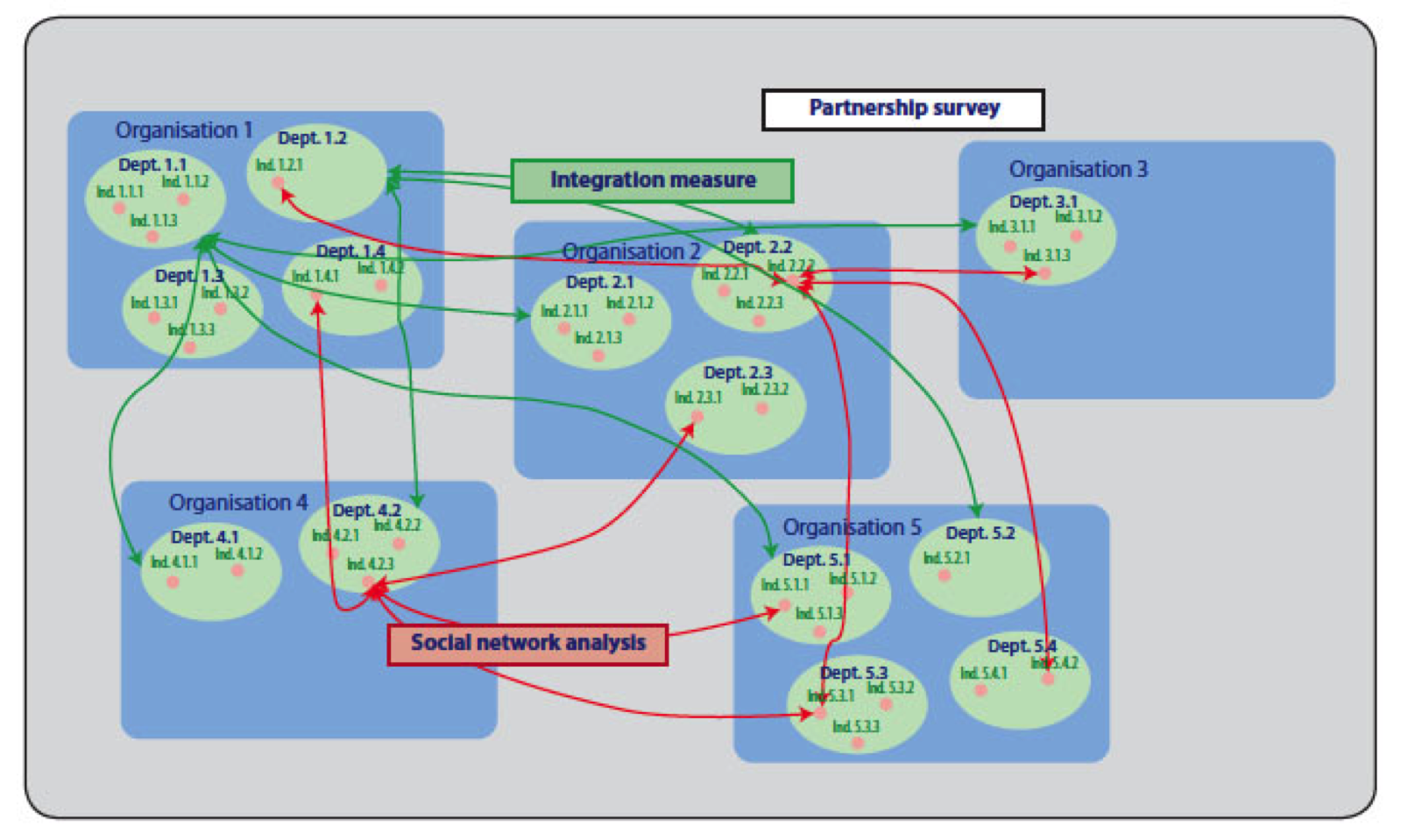

So, in our most recent partnership evaluation, we combined some of these methods to get a more complete picture of the partnership. The three we chose were: a partnership survey (adapted from the Nuffield Partnership Assessment Tool), an integration measure (based on the Human Service Integration Measure developed by Brown and colleagues in Canada) and Social Network Analysis (using UCINET). The diagram below represents our conceptual framework, with each method looking at the partnership at a different level: overall, between organisations and departments, and between individuals.

Lesson learned #1: A key benefit of combining partnership assessment methods is that it enables you to look at the partnership at different levels. Adding in-depth interviews or other qualitative methods to the mix will allow you to explore further and drill down into underlying mechanisms, perceptions of what works for whom, experiences of difficulties and suggestions for improvement.

Lesson learned #2: Partnerships are abstract/ intangible evaluation objects and evaluations of partnerships often lack data about what is happening on the ground. Adding methods to quantify and substantiate partnership activities and outcomes will make your evaluation more robust and the findings easier to explain to stakeholders.

Lesson learned #3: Combining methods sits within the good old mixed-methods tradition. Various metaphors are used to describe the benefits of integrated analysis in mixed-methods research (see Bazeley, 2010). In this case, the selected methods are combined ‘for completion’, ‘for enhancement’ and as ‘pointers to a more significant whole’.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.