Greetings! I’m Sara Vaca, independent consultant at EvalQuality.com and Creative Advisor of this blog. I started with this post (link) observing where and how evaluation can use a dash of creativity, and now I’m going to share my experience using creativity to better understand evaluation.

After my first AEA conference in Washington D.C. (October 2013), during my daily stroll, all the words and concepts I had been hearing during that week –mixed methods, rubrics, approaches, participation, values, dashboards, etc.- were flying around in my head. I was wondering: there are so many different possibilities (stance, paradigm, approach, methods) to design an evaluation, and yet they are not clearly visible in evaluation reports…

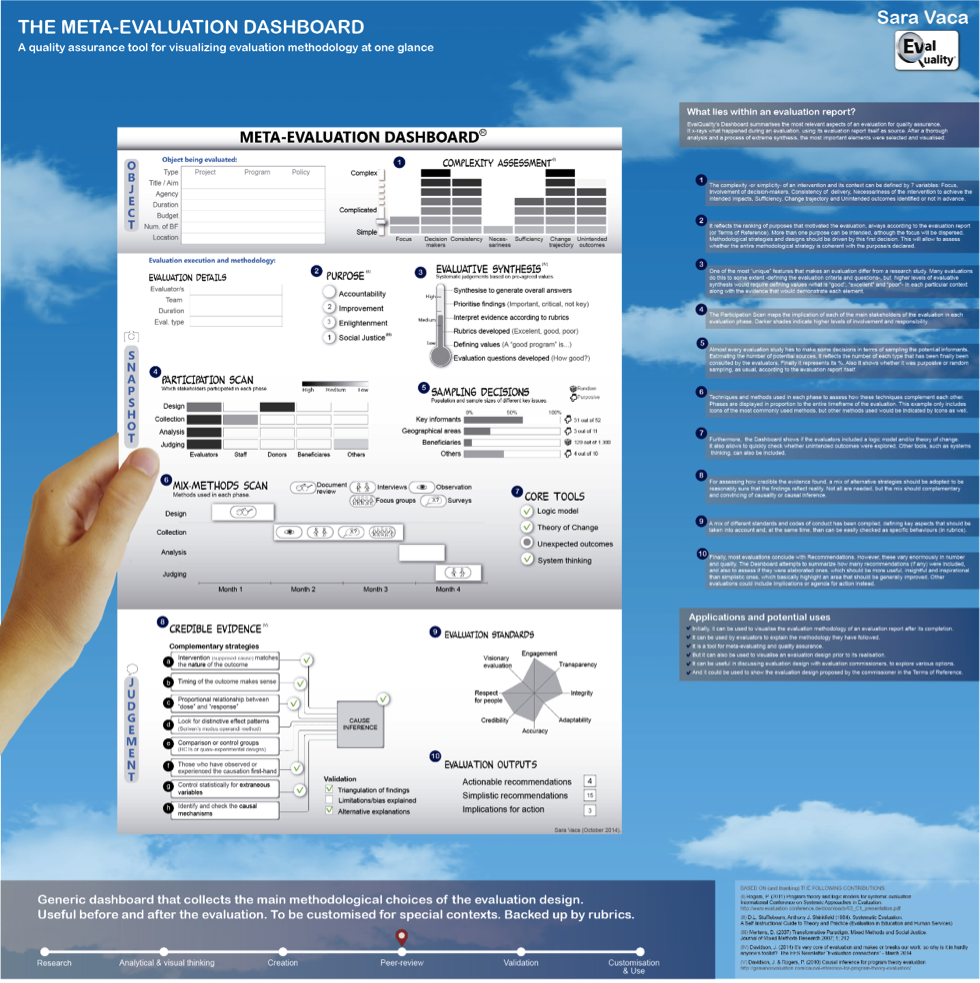

Suddenly, it all clicked in my mind and I thought: What if you could see many of these evaluator’s decisions in just one page? I know! I will create a “meta-evaluation” dashboard!

Some months later, after much reading and research and many sketches and drafts, I came up with this dashboard, where you can see reflected in a very visual way 10 (for me) major issues of an evaluation:

- Complexity

- Purpose ranking

- Evaluative synthesis thermometer

- Participation scan

- Sampling decisions

- Mix-methods scan

- Core tools

- Credible evidence

- Evaluation standards and

- Evaluation outputs.

Initially, the Dashboard can be used to visualize the evaluation methodology of an evaluation report after its completion. Or it can be used by evaluators to explain the methodology they have followed.

Also it is a tool for meta-evaluating and quality assurance. But, it can also be used to visualize an evaluation design prior to its realization. Finally, it can be useful in discussing evaluation design with evaluation commissioners, to explore various options. And, it could be used to show the evaluation design proposed by the commissioner in the Terms of Reference. Or, even to teach evalution.

I presented it as a poster in both European Evaluation Society and Evaluation 2014 conferences (Dublin and Denver) and I would like to thank all the comments and feedback received, from the people who didn’t understand it at first, to those who told me that it was inspiring. Special thanks to Michael, Scriven, Jennifer Greene, Patricia Rogers, Jane Davidson, Beverly Parsons, Ian Davies and many others who took the time to take a look at it and comment on it.

For more information: http://www.evalquality.com/the-meta-evaluative-dashboard/

For reactions and comments: Sara.vaca@EvalQuality.com

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.