Hi, everyone. This is Bethany Laursen, independent consultant with Laursen Evaluation & Design, LLC, and Assistant Dean in the Graduate School at Michigan State University; Nicole Motzer, Director of Research Development at Montana State University; and Kelly Anderson, Research Scientist at the National Institute of Standards & Technology.

Interdisciplinarity (ID) is all the rage in the research, technology, and development worlds—along with its cousin modalities convergence research, transdisciplinarity, boundary spanning, and many others. As an evaluator, have you ever been asked to assess the interdisciplinarity of one of these projects? Have you ever wondered what that would take? We have! And we got curious.

In 2022, we completed a systematic review of over 1,000 different ways people have assessed ID in 20 years of literature. The 142 reviewed studies focused on interdisciplinary research and education. We’ve published five different, open access Rad Resources to help our fellow evaluators navigate the options, adapt an approach for their context, and conduct smart research on evaluation of ID.

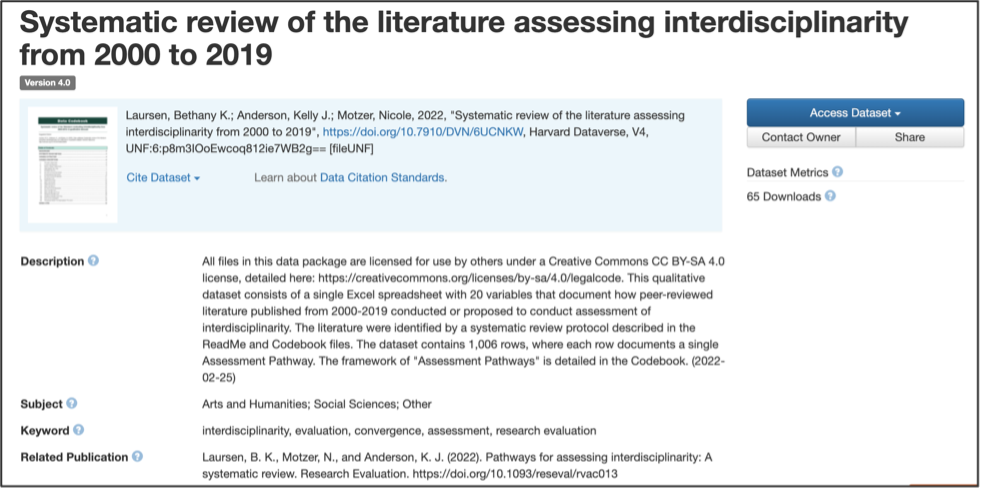

Rad Resource 1: The Dataset

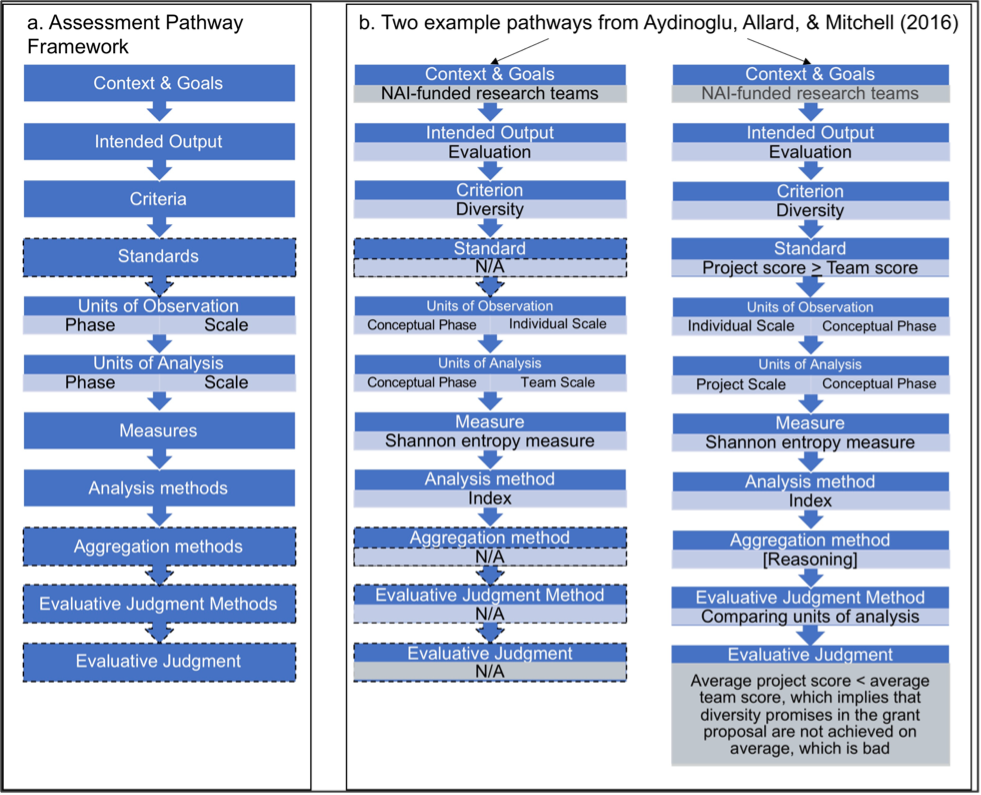

Our dataset gives structure to the ill-structured domain of interdisciplinary evaluation using a new framework we call the assessment pathway. There are 1,006 different pathways (evaluation options) in the dataset representing a total of 19,114 data points in a single, sortable spreadsheet. The dataset also comes with a codebook that, among other things, functions as a thesaurus of criteria for ID.

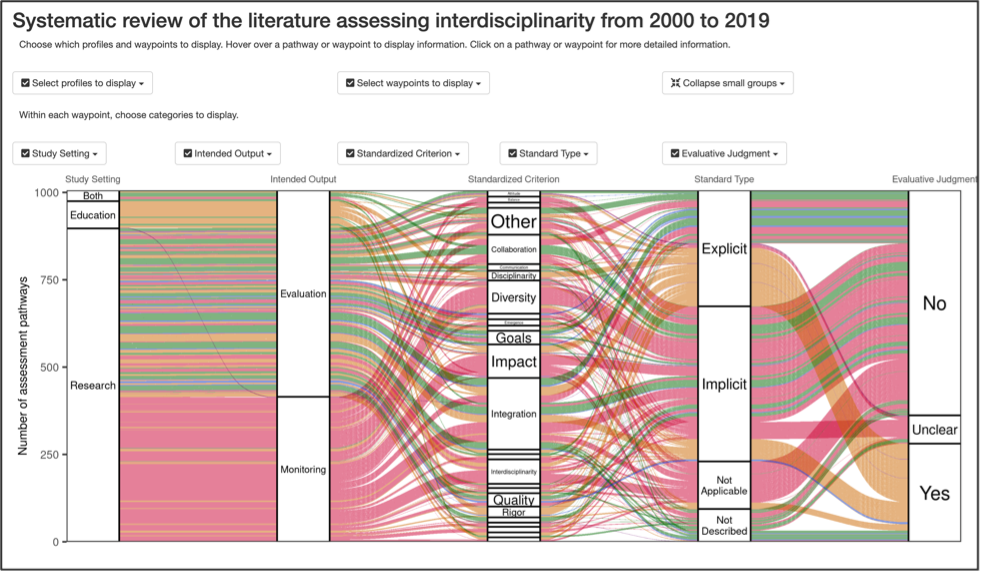

Rad Resource 2: The Sankey Dataviz

This online, interactive dataviz allows you to explore the dataset at your own pace by creating customized Sankey diagrams that depict each evaluation approach as a literal pathway.

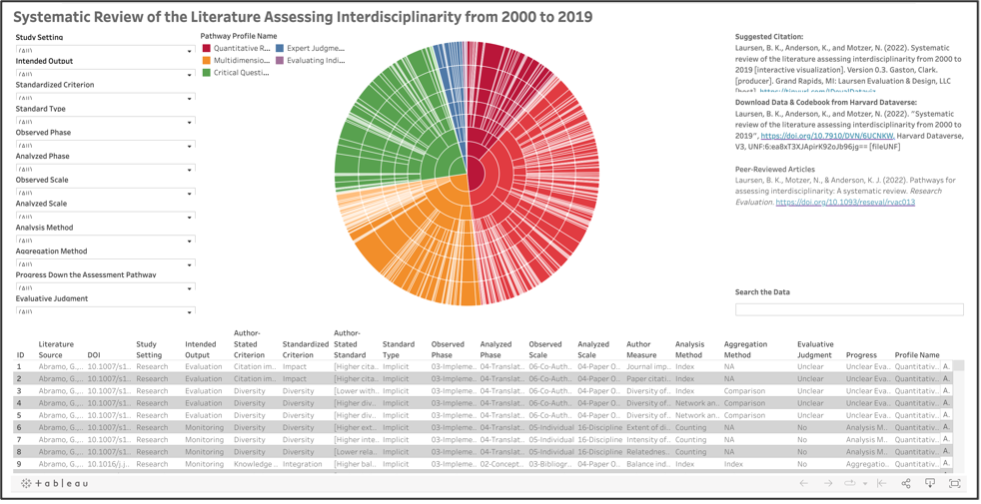

Rad Resource 3: The Sunburst Dataviz

This version of the dataviz uses a Sunburst chart to condense more information into a smaller space as another way to explore this immense amount of information.

Rad Resource 4: The Review Article

Our first article examines patterns in the choices evaluators made at each point in a study’s assessment pathway(s). We show what’s hot and what’s not, what should be hotter, and generally how to stay cool with evaluative thinking and mixed methods in this sticky space.

Rad Resource 5: The Profiles Article

Our second article reports five main ways evaluators have assessed ID over the last two decades. We describe each approach and give tips on how each can be used—and improved—in different assessment situations. This article won the best paper award at the 2022 Science of Team Science international conference.

What other tips or resources can you share about how to evaluate interdisciplinary efforts? Are these five Rad Resources helpful in your work?

We thank our funders and supporters for this project. The work was partly supported by the National Socio-Environmental Synthesis Center (SESYNC) under funding received from the National Science Foundation [DBI-1639145]. The Michigan State University Center for Interdisciplinarity (C4I) funded Laursen as an Engaged Philosophy Intern at SESYNC in 2018 and hosted a writing retreat in 2019.

The American Evaluation Association is hosting Research, Technology and Development (RTD) TIG Week with our colleagues in the Research, Technology and Development Topical Interest Group. The contributions all this week to AEA365 come from our RTD TIGmembers. Do you have questions, concerns, kudos, or content to extend this AEA365 contribution? Please add them in the comments section for this post on the AEA365 webpage so that we may enrich our community of practice. Would you like to submit an AEA365 Tip? Please send a note of interest to AEA365@eval.org. AEA365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.