Hello, my name is Kamna Mantode. I work as a Senior Monitoring and Evaluation Specialist at Compassion International. Having earned a PhD in Public Administration and through my work experience of over 10 years supporting evaluation in public and nonprofit space, I hold a high regard for cultivating “feedback” and “listening” practices in development and evaluation workspace (Hirschman’s exit, voice, and loyalty model). Widely held belief in the sector is that feedback and listening are essential to promote client engagement and achieving outcomes. Yet as data shows, many NGOs that not integrating feedback insights in their process improvements (https://hbr.org/2019/02/why-customer-feedback-tools-are-vital-for-nonprofits).

For many public and nonprofit organizations that do include feedback mechanisms most are project based, and end with the term of the project. Over the years, this ad-hoc system of feedback collection misses out on important institutional level information that could prove useful to improve the organization.

(Re)new focus and value add

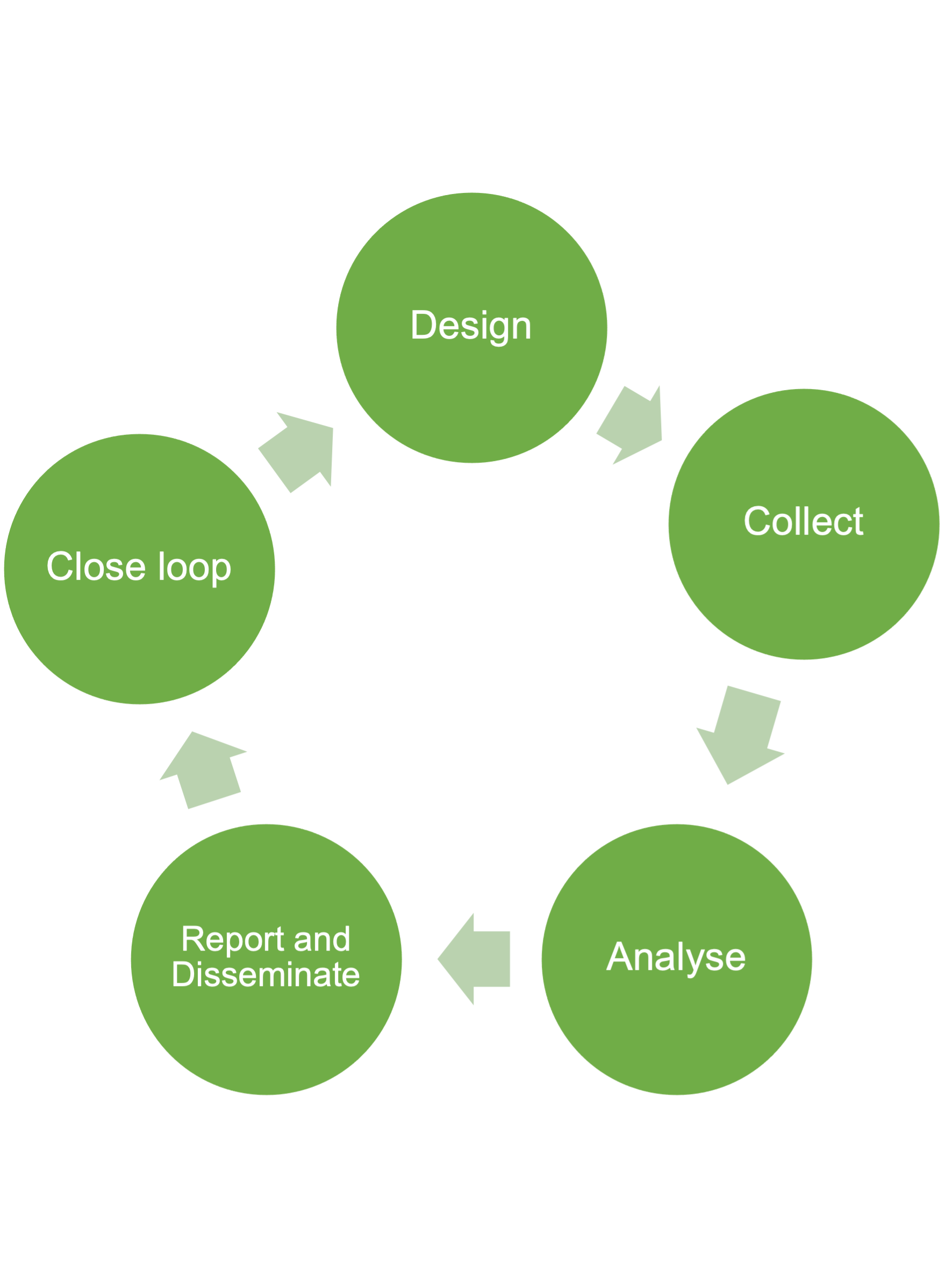

What if we did not think about feedback gathering as an add on, pushed by donors (upwards accountability) but considered it as a capability that is instituted and developed in the NGO? Feedback loops can be viewed as a core function that supports the organization, especially those that are set up to address challenging social and economic situations. Here are some ways in which I think feedback loops can be used to create value add that appeals to a broader set of stakeholders and build the momentum that it needs in an organization:

Hot Tips:

- Cast a wider, frequent, and occasionally a deeper net-. Cast a wider net with questions on topics that not only cover service delivery experience but also tap into factors that determine that experience. Design an array of questions that build an understanding of cultural and institutional expectations and associated experiences that could affect the motivation and engagement of the client. Try to get away from use of industry standard one-two question approach that by now is very familiar to clients. In short, don’t be predictable with your feedback surveys. Also use a combination approach to collection of data- frequent pulse surveys that are punctuated by deeper dives. I have found this article from Keystone Accountability about Constituent Voice approach as a great resource for thinking differently about the design and approach towards feedback loops with their pulse surveys and insight studies. Expanding the focus of feedback instruments to capture not just service feedback but institutional level variables, will help generate actionable information for improvements.

- Democratization of feedback information. Most often feedback data stays within the management and within process improvement space. It has helped me to wrestle with the question of, who has the right to this information? Certainly, those folks who have commissioned the feedback data collection, no question, but also those who have provided the feedback information. As part of my work, I have enjoyed building tools/platforms/methods that enable all stakeholders to have a chance to view and interact with feedback data. Viewing feedback data has been empowering for the respondents and has sparked discussions of how organizations and individuals interact on a day-to-day basis. It has promoted the view that attitudes, and cultures can change, not only for the project but for the organization as well. All the above are important considerations to engender a culture of feedback gathering and demonstrating value add.

Promoting the actionability of feedback data across the organization rests on demonstrating its value add. I think as practitioners we can be intentional about designing and democratizing feedback to boost its potential.

The American Evaluation Association is hosting North Carolina (NC) Evaluators Affiliate Week. The contributions all this week to aea365 come from NC Affiliate members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators. The views and opinions expressed on the AEA365 blog are solely those of the original authors and other contributors. These views and opinions do not necessarily represent those of the American Evaluation Association, and/or any/all contributors to this site.

Hi Sheila,

Thank you for sharing a very insightful and informative article by Kamna Mantode. My name is Kristin Lum-Tong and I am a Master’s of Education student at Queen’s University. I am also a full-time Kindergarten teacher.

I think it is genuinely interesting that you have found that organizations often do not build an adequate understanding of “cultural and institutional expectations… and experiences”. I have always thought that this is vital to truly understanding a population; in my case, such a population might be a classroom or a school.

You state that one or two question surveys often do not serve a valuable purpose. I wonder when these would still have value. I understand that longer surveys can at times be difficult to administer, as participants can be apprehensive or outright avoid spending their time answering these. I think a longer survey that establishes a better feedback loop certainly makes sense; what I am interested in learning more about is how to successfully implement these surveys in an ongoing manner. You subsequently directed to the article by Keystone Accountability about pulse surveys, and implementing the Constituent Voice approach. I think this approach is really interesting; it seems to help to ensure that the organization and program both approach their relationship with participants in a way that is much more reactive, immediate, and equitable. This approach seems to be focused on the day-to-day interactions with participants, whereby the program and organization shapes itself through its interactions with its participants. This seems to me to be highly applicable to the classroom setting, where the facilitators of programs (teachers) are at the ground-level every day, interacting in a sort-of feedback loop.

Your second point about the democratization of feedback struck a chord with me. In the classroom, there are many ways that feedback is provided. For students, they actively receive immediate feedback verbally, and in written form on their classwork. For parents, there are interactions daily when they pick up their children, they receive letters and marked work with their child daily, and report cards are prepared periodically. Furthermore, parent-teacher conferences are another form of feedback where the parents may provide their input. However, I think access to information could be improved further.

One medium that has been promoted relatively recently amongst the Vancouver School Board is the use of an online website called “myBlueprint”. This is an online student portfolio and can track student progress as they progress through each grade (Vancouver School Board, 2019). I think taking this idea and developing on it to fit your suggestions would be highly valuable in a classroom. For example, equal access to information could easily be improved through this method, especially to parents.

In the course I am taking, we have been learning about the importance of collaboration and avoiding the misuse of evaluation. Patton (2013) expresses the need for collaboration and consistent use of data in order to prevent misuse.

I am curious if you might have concrete examples of longer-form surveys that demonstrate improved democratization of feedback?

I am looking forward to hearing about your insights!

Kristin

References

Patton, M. Q. (2013, June 7). Utilization-Focused Evaluation [Video File]. MyM andE. Retrieved from https://www.youtube.com/watch?v=jQP1FGhxloY

Vancouver School Board. (2019, October 31). myBlueprint: All About Me Resources. Retrieved from https://www.vsb.bc.ca/site/csl/myblueprint/Pages/All-About-Me-Resources.aspx