Hi, we are Gita Upreti, Assistant Professor at the University of Texas at El Paso and Carl Liaupsin, Associate Professor at the University of Arizona in Tucson. Much of our work involves implementing broad academic and behavioral changes in educational systems. As such, we’ve had a front row seat to observe the explosion of educational data confronting stakeholders. Parents, school staff, school and district level administrators, state departments of education, and federal agencies are all expected to create and consume data. This is a really unique paradigm in evaluation.

In our work with schools, we noticed that similar measures of effectiveness could be used by various stakeholders for varying purposes. A construct that we call stakeholder utility has helped us work with clients to develop efficient measures that will be useful across a range of stakeholders. For example, students, teachers, administrators, school trainers and researchers may all have a stake in, and use, student achievement data but not all stakeholders will be impacted in the same way by those data. So, not only could the same data be used differently by each stakeholder, but based on the individual’s role and purpose for using the data, the level of utility for the data could also change.

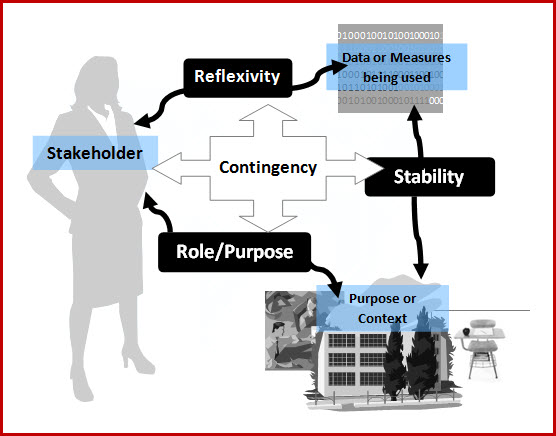

It may be possible to affect stakeholder utility and perhaps to maximize it for each stakeholder group by mapping, across four dimensions, how the stakeholder is connected to the data in question, the purpose for measurement, and the professional or personal rewards which might exist as a result of the use of those data. Here are some questions to ask in considering these dimensions:

| Role/Purpose: | Who is the stakeholder and what will they be doing with the data? |

| Reflexivity: | How much direct influence does the stakeholder exert over the data? Are they a generator as well as a consumer? Might this affect any human error factors? |

| Stability: | How impervious is the measure is to error? How stable is it over time and in varying contexts? How strongly does it represent what it is supposed to? |

| Contingency:

|

Are there any behavioral/professional rewards in place for using those data? Are the data easy to communicate and understand? What are the sources of pleasure or pain associated with the use of those data for the stakeholder role/purpose? |

We are very interested in hearing from folks from other fields and disciplines for whom this model might be useful, and devising ways to measure and monitor the influence of these factors on how data are generated and used by a variety of stakeholders.

Upreti, G., Liaupsin, C., & Koonce, D. (2010). Stakeholder utility: Perspectives on School-wide Data for Measurement, Feedback, and Evaluation. Education and Treatment of Children, Volume 33, Number 4, November 2010, pp. 497-51

A tip of the nib to Holly Lewandowski : http://www.evaluationforchangeinc.com/

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.