Greetings! I’m Natalie Trisilla and I lead the International Republican Institute’s Office of Monitoring, Evaluation, and Learning. We lead dozens of internal evaluations annually. These occur throughout the project lifecycle – from needs assessments and baseline evaluations to mid-point and final evaluations. We always strive to involve our program staff colleagues, who actually implement the projects we evaluate, throughout the evaluation process. For example, their firsthand expertise is key to developing a feasible, culturally sensitive methodology. Further, their role as project designers and implementers makes them ideally suited to utilize the results of the evaluation. Here are two additional ways that we involve program staff in the data analysis and recommendations/conclusions development process. These practices might be useful for you, too!

Hot Tips:

Hot Tips:

- Daily After-Action Review: If our program staff colleagues are also in the field for data collection, we have a quick, daily after-action review session. These are informal, often happening during dinner or in the taxi back to the hotel, but have proven useful to elicit rapid, yet systematic, reflection. We use three basic prompts:

- What was the most surprising or unexpected aspect of today?

- What was the most common topic or issue you think you heard/observed today?

- From your perspective, did any other notable things happen today?

This daily debrief allows us the opportunity to identify gaps or challenges with data collection tools or protocols early, and adapt accordingly. It also provides an important opportunity for program staff to practice critical thinking and analysis skills, which builds their overall evaluative thinking capacity.

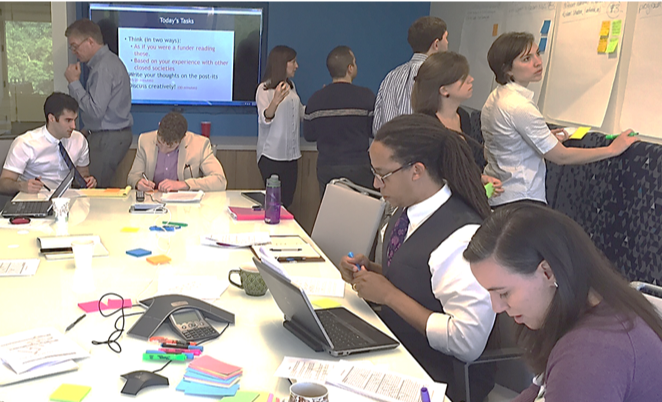

- Data Validation & Analysis Session: After initial data analysis and before we develop recommendations, we convene a 60 minute data validation session for interested program staff. This includes program staff with relevant thematic experience or regional expertise who are not part of the project being evaluated. During this interactive session, we write key evaluation findings on flip chart paper that we post around a conference room. Next, staff to walk around the room, read the findings, and note their questions, reflections, reactions to the findings somewhere on the corresponding flipchart paper. We spend the remainder of the time exploring their reactions and/or reflections to the findings as a group. This analysis process is valuable to us as evaluators since our program staff colleagues’ insights put our data and resultant findings in the broader sociopolitical context, making them more specific and meaningful. Additionally, the sessions provide us with rich details and examples from other projects to craft more detailed, actionable recommendations. Program staff also appreciate these sessions since they are exposed to learning across projects and feel more invested in evaluations, especially the recommendations.

The American Evaluation Association is celebrating Democracy & Governance TIG Week with our colleagues in the Democracy & Governance Topical Interest Group. The contributions all this week to aea365 come from our DG TIG members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on theaea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by theAmerican Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hi Natalie,

My name is Janet and I am currently taking a course on ‘Program Inquiry and Evaluation’ at Queens University as part of a master’s program. We have been learning about program evaluation approaches, methods, design, data collection and analysis and promoting evaluation use.

I connected with your article about including the stakeholders in the evaluation process. I understand how valuable it can be for evaluators to provide opportunities for stakeholders to be directly involved in the evaluation process. The evaluators receive knowledge and insight from those who are directly involved in the program they are evaluating. I can also see how stakeholder participation makes the evaluation findings more significant and meaningful to them as well as enhance investment which will likely lead to increased evaluation use.

The two suggestions you made about how to involve stakeholders in the evaluation process seem feasible and valuable. I particularly liked the second suggestion where stakeholders have an opportunity to examine evaluation findings before conclusions and recommendations are suggested by writing down their questions, reflections and reactions to the findings on chart paper. This suggestion would allow for many voices to be heard in a short amount of time. In the context of education, elementary schools in particular, there is often a lack of time to question, reflect and react to programs, evaluations, initiatives, etc. Using this method of data validation would definitely allow for more thoughts and opinions to be shared and in turn, examined by evaluators at a later time if necessary.

Thanks for posting your suggestions about involving staff in data analysis. They have been valuable to me in the context of the program I have been designing an evaluation for as a project in my ‘Program Inquiry and Evaluation’ course.

Natalie,

First and foremost, thank you for this post as it leaves me with new insights into participatory evaluation and some food for thought. I like that two of your three basic prompts are open-ended, allowing for greater self-reflection and conversation, and I fully agree that without the opportunity to reflect, it is very difficult to use “evaluation as an intentional intervention in support of program outcomes,” (Kirkhart).

I am wondering if you have used this approach in educational institutions where key stakeholders tend to rarely have a strong skill set in data analysis, but also lack the time necessary to implement your approach. While I love your approach of meeting on a daily basis for a quick debrief and eventually coming together for one hour data validation sessions, I am concerned about what Shulha describes as ” formidable obstacles to genuine stakeholder participation.” (Shulha) I think your idea to get the program implementers involved in the process has great merit; however, I wonder about the practicality of this approach when it comes to educators who often feel their time is already heavily taxed. Any advice you could provide would be most appreciated.

Thank you again for the post, and I look forward to learning more from you.

Richard Prosser

Hi Natalie,

My name is Antara, and I’m currently a student in the Master of Education program at Queen’s University in Canada. We are just nearing the end of our course, “Program Inquiry and Evaluation”, and have learned about the many interesting aspects, and layers of complexity involved in successfully conducting a program evaluation. One of our last items is to read Blogs of interest in this portal to add to our knowledge base, and initiate interaction with the Author of our choice to further exchange ideas.

What I loved most about your article Natalie was the ‘Hot Tips’ you provided. Firstly, the “Daily After-Action Review” is a concept that I have not been exposed to previously, and I think it is brilliant. The informal nature of it, including your prompting questions makes a lot of sense. This can be a critical component of the overall evaluation process as it can provide some key nuggets of information from the program staff very early on. This means if you, in the role of evaluator, need to adjust or adapt to the evaluative approach, you have the opportunity to do so based on immediate (relevant) feedback. Secondly, the “Data Validation & Analysis Session” that you proposed is another enlightening element of the overall process where you convene for an hour to discuss implications prior to establishing any recommendations. What strikes me about this tip is that you include program staff with relevant thematic experience or regional expertise who are not part of the project being evaluated to test against your own understanding—that’s quite intelligent!

Carol H. Weiss wrote the article titled “Have We Learned Anything New About the Use of Evaluation?”, published in the American Journal of Evaluation. Here is an excerpt from her article where she raised valid concerns and a very good argument as follows:

“People have been writing in the evaluation literature about the necessity for evaluators to take on a broader assignment and become consultants and advisors to organizations and programs. But what are our qualifications for assuming such roles? Our strengths are usually our technical skills, our ability to listen to people’s concerns and design a study that addresses them, and the ability to communicate. Are we the best source of new ideas? We are smart, and we could read up on the program area, look at evaluations and meta-analyses, and reflect on our own experience. We can go beyond the data from a specific study and extrapolate and generalize. But does that qualify as experts?”

Natalie, I believe you have done an excellent job with your tips, especially the latter, to combat Weiss’ argument above by bringing in the experts for their experience and knowledge at the appropriate times in the evaluation process. Taking into account stakeholder feedback, even feedback from those individuals not directly part of the project prior to providing your program recommendations makes your work as an evaluator just that much more credible and noteworthy. Thank you for your insights and I look forward to following your future blog posts and response with great interest.

Antara

Dear Natalie,

Thanks for sharing your firsthand evaluation experience. You have a great practice of always involving your program staff who actually implement the projects you evaluate throughout the evaluation process because the stakeholders’ “engagement, self determination, and ownership appears as essential elements in evaluation contexts” (Shulha & Cousin, 1997). Further staff’s involvement in the evaluation process “promotes capacity building, organization learning and stakeholder empowerment” (Shulha & Cousin, 1997).

I particularly find your hot tip of daily after-action review relevant and beneficial. The rapid, informal daily after-action review is a great way for staff to consciously reflect on what is working and what is not for improvement when the experience is still fresh. It is effective, systematic for data collection. I like the 3 identified questions and find them practical and helpful in driving this after action reflection routine. I believe establishing a habit of daily reflection is even beneficial on personal level. Conscious reflection helps us stay focused, productive, but balanced. It is important each day to give ourselves a chance to reflect on what we have just done, giving ourselves some time to just think, slow down and be mindful.

1. What was the most surprising or unexpected aspect of today?

2. What was the most common topic or issue you think you heard/observed today?

3. From your perspective, did any other notable things happen today?

Thanks again! Look forward to more of your valuable perspective in program evaluation!

Niya