Hi! This is Andrea Crews-Brown, Tom McKlin, and Brandi Villa with SageFox Consulting Group, a privately owned evaluation firm with offices in Amherst, MA and Atlanta, GA, and Shelly Engelman with the School District of Philadelphia. Today we’d like to share results of a recent survey analysis.

Lessons Learned: Retrospective vs. Traditional Surveying

Evaluators typically implement pre/post surveys to assess the impact a particular program had on its participants. Often, however, pre/post surveys are plagued by multiple challenges:

- Participants have little knowledge of the program content and thus leave many items blank.

- Participants complete the “pre” survey but do not submit a “post” survey; therefore, it cannot be used for comparison.

- Participants’ internal frames of reference change between the pre and post administrations of the survey due to the influence of the intervention. This is often called “response-shift bias.” Howard and colleagues (1979) consistently found that the intervention directly affects the self-report metric between the pre-intervention administration of the instrument and the post-intervention administration.

Retrospective surveys ask participants to compare their attitudes before the program to their attitudes at the end. The retrospective survey addresses most of the challenges that plague traditional pre/post surveys:

- Since the survey occurs after the course, participants are more likely to understand the survey items and, therefore, provide more accurate and consistent responses.

- Participants can reflect on their growth over time, giving them a more accurate view of their progression.

- Participants will take the survey in one sitting which means that the response are more likely to be paired.

Lesson Learned: Response Differences

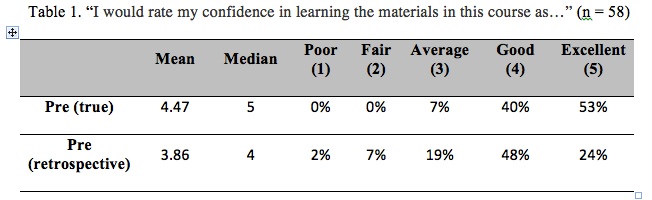

To analyze response-shift bias, we compared the pre responses on traditional pre/post items measuring confidence to “pre” responses on identical items administered retrospectively on a post survey. When asked about their confidence at the beginning of the course, a mean of 4.47 was reported while on the retrospective survey a value of 3.86 was reported. The students expressed significantly less confidence on the retrospective. A Wilcoxon Signed-Rank Test was used to evaluate the difference in score reporting from traditional pre to retrospective pre. A statistically significant difference (p < .01) was found indicating that the course may have encouraged participants to recalibrate their perceptions of their own confidence.

Rad Resource: Howard has written several great articles on response-shift bias!

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

The idea of retrospective surveys is appealing and seems worth more attention. One concern is that we cannot always be confident that the response shift is toward a more accurate estimation in the retrospective condition. There is a reasonable case that retrospectively indicating change following the intervention (perhaps by lowering the “pre” measurement) could be a “Halo” effect of expressing satisfaction with some other aspect of the intervention (such as entertainment or social interaction). Participants will recognize when an intervention goal was change in the pre-post item.