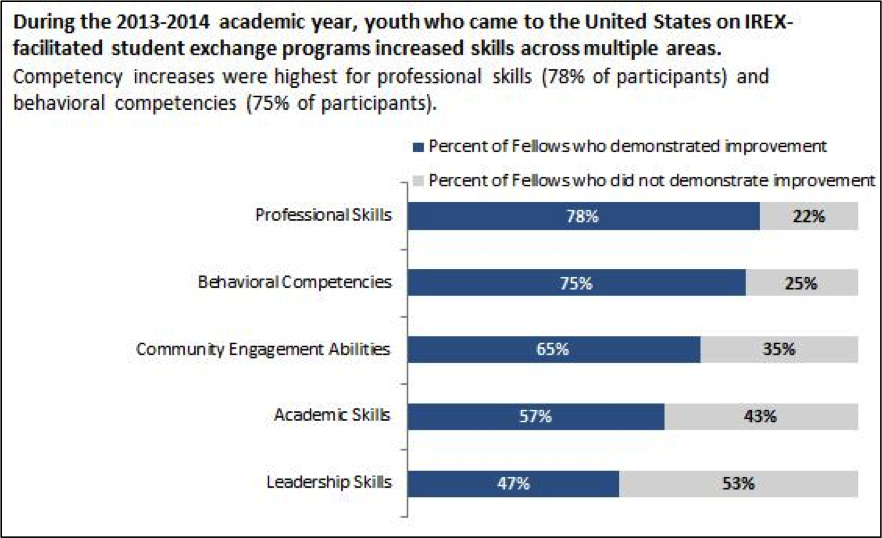

Hi! My name is Celeste Brubaker and I am a Monitoring and Evaluation Coordinator at IREX. IREX is a US-based nonprofit organization working to improve lives through international education, professional training, and technical assistance. In our education programs division we have a portfolio of seven student programs (in which international young leaders complete intensive U.S. based learning experiences), each similar but also unique. To understand the outcomes of the programs as a whole we created one standardized monitoring and evaluation framework. From start to finish the process took about half a year. M&E staff led the design with feedback solicited from program managers at each stage of the process. At this point, the first round of data has been collected. Some of our results are visualized in the graph at the bottom of this post. Here are some hot tips and lessons learned we picked up along the way.

Hot Tip: Clearly define the purpose of standardization. At IREX, our aim was to create a framework for gathering data that would allow us to report on our portfolio of student programs as a whole and also to streamline the data collection and information management process. We wanted to achieve these goals while still accounting for the unique aspects of each program. Understanding these goals and parameters guided our decision to create a common framework with room for a small quantity of customized components.

Hot Tip: Start by identifying similarities and differences in expected results. To do this we literally cut apart each of our existing results frameworks. We then grouped similar results, stratified by type of result – output, outcome, objective or goal. The product of this activity was useful in helping us to visualize overlaps across our multiple evaluation systems and provided a base from which to draft an initial standardized results framework. Check out the activity in the picture to the right.

Lesson Learned: It’s an iterative process. There will be lots of rewrites and that’s a good thing! During the process we learned that soliciting feedback in multiple settings worked best. Meeting with the collective group of program managers was useful in that dynamic discussion often led to ideas and points that would not have necessarily come out of individualized input. At the same time, one-on-one meetings with managers provided a useful space for individualized reflection.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.