Hello! We are Monica Hargraves and Miranda Fang, from the Cornell Office for Research on Evaluation. We presented together at Eval2012 would like to share some practical tips on literature searches in the context of evaluation.

2016 Update: Monica Hargraves is now Associate Director for Evaluation Partnerships at the Cornell Office for Research on Evaluation; Miranda Fang is now Manager, Development Strategy and Operations at Teach For America – Los Angeles

Program managers often face an expectation worthy of Hercules: to provide strong research-quality evidence that their program is effective in producing valuable outcomes. This is daunting, particularly if the valued outcomes only emerge over a long time horizon, the program is new or small, or the appropriate evaluation is way beyond the capacity of the program. The question is, what can bridge the gap between what’s feasible for the program and what’s needed in terms of evidence?

Hot Tip: Strategic literature searches can help. And visual program logic models provide an ideal framework for organizing the search process.

Quoting our colleagues Jennifer Urban and William Trochim in their AJE 2009 paper on the Golden Spike,

“The golden spike is literally a place that can be drawn on the visual causal map … where the evaluation results and the research evidence meet.”

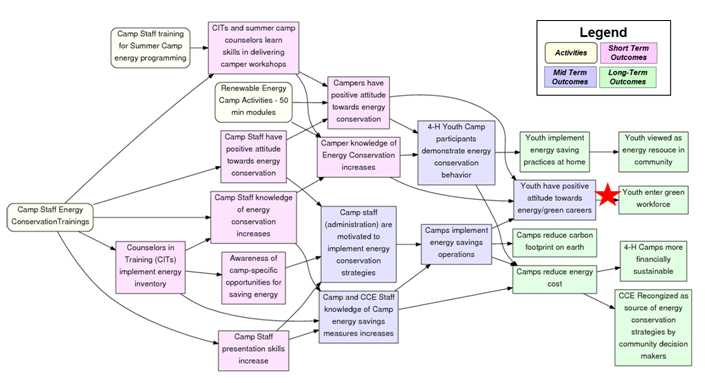

We use pathway models, which build on a columnar logic model and tell the logical story of the program by specifying the connections between the activities and the short-term outcome(s) they each contribute to, and the subsequent short- or mid-term outcome(s) that those lead to, and so on. What emerges is a visual program theory with links all the way through to the program’s anticipated long-term outcomes.

The visual model organizes and makes succinct the key elements of the program theory. It helps an evaluator to zero in on the particular outcomes and causal links that are needed in order to build credible evidence beyond the scope of their current evaluation.

Here’s an example, from a Cornell Cooperative Extension program on energy conservation in a youth summer camp. Suppose the program needs to report to a key funder whose interest is in youth careers in the environmental sector. If the program evaluation demonstrates that the program is successful in building a positive attitude towards green energy careers, then a literature search can focus on evidence for the link (where the red star is) between that mid-term outcome and the long-term outcome of an increase in youth entering the green workforce.

The American Evaluation Association is celebrating Best of aea365, an occasional series. The contributions for Best of aea365 are reposts of great blog articles from our earlier years. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org . aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.