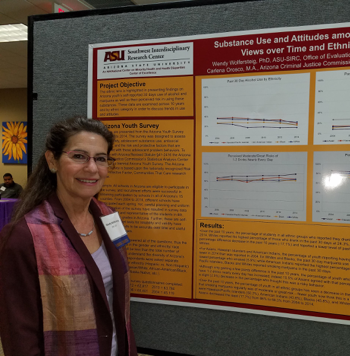

I’m Wendy Wolfersteig, Director of the Office of Evaluation and Partner Contracts for the Southwest Interdisciplinary Research Center (SIRC) and Research Associate Professor at Arizona State University and President of the Arizona Evaluation Network. I focus on evaluating effective prevention programs and thus discuss evidence-based practice (EBP) and how to use it in community and government settings. I have explained EBP so many times in so many ways, and lately it is a hot topic.

It has taken years to bring the term “evidence-based” into the vocabulary of Arizona state and local government officials. The push over the past 8-10 years from federal, private business and foundation sources that insists upon accountability, has slowly but surely led officials to use words like evidence-based, or at least evidence-informed, in selecting programs to be funded.

Yet, the fate of evidence-based decision-making was not clear as the year came to an end. When is evidence – evidence? What is the evidence that it is a fact? How are science and evidence to be considered in practice and policy making?

Even use of EPB terminology was being questioned with reports that government staff were encouraged not to use certain words, including “science-based.” Further, the National Registry of Evidence-Based Programs & Practices (NREPP), a database of prevention and treatment programs with evidence-based ratings, had its funding ended prematurely.

I gain hope from my graduate students when we discuss evidence-based practice – in practice. We talk about the research, when are data facts, when do programs account for participants’ cultural and other differences, and how to make these judgments. This focuses us on what research and evidence can and cannot determine, and how we each make personal and professional decisions every day. We are left to ponder the outcome when the NREPP website says that “H.H.S. will continue to use the best scientific evidence available to improve the health of all Americans.”

Hot Tips:

- Relate and avoid jargon. Put the reasoning for evidence-based evaluation and practice into the terms used by my/your client or potential client.

- Talk about desired outcomes – and how what assessments, practices, programs, strategies and activities were selected – would impact what happened.

- Ask questions before giving answers: Why do they want a specific strategy? How do they know if it would work? Were they willing to keep on doing “what we’ve always done” without some evaluation or data to know they were spending money and time in the best interest of their clients? Do they need data to show success? Who decides?

Rad Resources:

I learned a lot about professional efforts to enhance evidence-based decision-making by participating in the EvalAction 2017 visit to my local Congressperson’s office during the AEA Conference. Here are a few resources that came to my attention.

- Report of the Commission on Evidence-Based Policymaking, The Promise of Evidence-Based Policymaking, 2017.

- Evaluation Roadmap for a More Effective Government (2013); and the AEA letters in support of legislation to use scientific methods and practices.

- Keep the discussion on evidence-based decision-making going at the Arizona Evaluation Network’s 2018 From Learning to Practice: Using Evaluation to Address Social Equity conference taking place this April in Tucson, AZ.

The American Evaluation Association is celebrating Arizona Evaluation Network (AZENet) Affiliate Week. The contributions all this week to aea365 come from our AZENet members. Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

Hello there,

My name is Umair Majid. I am currently completing a course called Program Inquiry and Evaluation at Queen’s University. I recently read your post about evidence-based practice – in practice. I want to discuss some of the topics and points you raised in your post.

The first point you raised was about the difficulty associated with describing and integrating evidence-based or evidence-informed practice. I wanted to step back for a moment and clarify how you differentiate between these two orientations towards evidence? In particular:

• What are the differences between evidence-based and evidence-informed practice?

• What are the distinctions between these orientations towards the role of evidence in program evaluations?

• How may these distinctions influence program evaluation activities?

I am also interested in clarifying the effects of adopting an evidence-based versus evidence-informed practice orientations on program evaluation activities.

The second point relates to that difficulty associated with adopting an evidence-based practice orientation. I recently surveyed some of the issues raised by the new political leadership in the United States. In essence, I understand that the stance of the political leadership is to deemphasize the importance and role of evidence-based decisions for various ideological and philosophical reasons. I am interested in discussing:

• What should be the role of political ideologies in evidence-based practices and academia?

• What is the actual role of political ideologies in practice?

• How does this move by various political parties to deemphasize evidence-based decision-making influence program design and evaluation?

• What are the short- and long-term outcomes of building social programs that are not guided by evidence-based practice?

I sincerely thank you for the time you are taking to read and respond to my questions. I hope I can gain insight into some of these questions from your perspective.

Sincerely,

Umair Majid

Hi Umair,

You certainly ask good questions. I will try to be as thoughtful in my responses as you were in your discussion.

From my field work, I know there is a gap between what is viewed as evidence by practitioners as compared to researchers. I use the term evidence-based pretty much as it might be described in academic literature or used as a requirement in a grant that lists evidence-based or proven effective programs that have research outcomes that have been published, probably in an academic journal. I use the term evidence-informed for programs or practices that may or may not have a theoretical or conceptual framework, use some strategies or activities that may be evidence-based, and the entire program might have had an evaluation or pre-post surveys that showed positive results, but as a program would not yet meet a higher level of standards. An evidence-informed program would have some basis of evidence, just not the rigor required to be officially termed evidence-based. Program evaluation can have a role in both situations. With evidence-based it may be to assure that the application of the intervention maintains its original effectiveness whereas with evidence-informed, an evaluation of meeting objectives and outcomes (but without comparisons or controls) may be the only research that has been conducted. Either situation may call for an evaluation, but those activities might be more prescribed with the evidence-based program, and more formative with the evidence-informed approach.

As to your excellent discussion questions, I think these are the topics and answers that will be discussed at the AEA Conference in Cleveland around Speaking Truth to Power. What should or should there even be any role of political ideologies in evidence-based practices? Maybe yes maybe no, but the truth is that politics do become involved both around whether it is necessary to have evidence and to what level do we need evidence to make our dollars worth spending. Thus the actual role of politics and political ideologies does become involved in determining priorities. Politics impacts decisions around what types of programs to fund, e.g. teen pregnancy prevention, which had its five year-funding cut back to three years. A de-emphasis of evidence-based decision-making puts less value on evaluation and probably thus allocates less funding for program evaluation in service grants and possibly in basic research science; time will tell. I find I prefer outcomes guided by evidence and a data-driven decision-making approach, but I realize that not everyone agrees with me. So when I talk with my clients, some of whom are in governmental positions, I reason for rigor and evidence and yet know that dollars, politics, time, staffing and a host of other factors will guide any final decision that is made about the types of evaluations I may ultimately conduct. Further, as a member of academia and a state university employee, it is for me to educate and inform, not to influence.

Wendy